Here we look at: tweak the humanoid model so that it has much longer arms, the code I used for training an agent with long arms to walk. Note that I made the arms a bit longer than they were in the code above, trying to encourage the agent to use them for walking, and training the agent with different arm proportions.

Creating a Tweaked Model: Longer Arms

PyBullet loads the environments we have been using from XML files. This gives us an opportunity to tweak the XML.

We can see how the humanoid is built up from simpler components by looking at the XML file that defines it.

Specifically, let’s tweak the humanoid model so that it has much longer arms!

The following code doesn’t do any training. It just makes the change we’re interested in and displays the results. If you’re just following along in an SSH shell then you can skip this bit, otherwise you should see the humanoid rendered with its longer arms.

import xml.etree.ElementTree as ET

from tempfile import NamedTemporaryFile

from time import sleep

from pathlib import Path

import pybullet_data

from pybullet_envs.gym_locomotion_envs import HumanoidBulletEnv

from pybullet_envs.robot_locomotors import Humanoid, WalkerBase

assets_path = Path(pybullet_data.getDataPath()) / "mjcf"

assert assets_path.exists()

humanoid_model_path = assets_path / "humanoid_symmetric.xml"

assert humanoid_model_path.exists()

tree = ET.parse(humanoid_model_path)

def find_elem(node_type, name):

nodes = tree.iter(node_type)

for node in nodes:

if node.get("name") == name:

return node

assert False

for side in ("left", "right"):

lower_arm = find_elem("geom", f"{side}_larm")

lower_arm.set("fromto", "0.01 0.01 0.01 0.34 0.34 0.34")

hand = find_elem("geom", f"{side}_hand")

hand.set("pos", ".35 .35 .35")

with NamedTemporaryFile() as temp_file:

tree.write(temp_file)

class CustomHumanoid(Humanoid):

def __init__(self):

WalkerBase.__init__(self,

temp_file.name,

'torso',

action_dim=17,

obs_dim=44,

power=0.41)

class CustomHumanoidBulletEnv(HumanoidBulletEnv):

def __init__(self, render=False):

super().__init__(robot=CustomHumanoid(), render=render)

env = CustomHumanoidBulletEnv()

env.render(mode="human")

env.reset()

while True:

sleep(1)

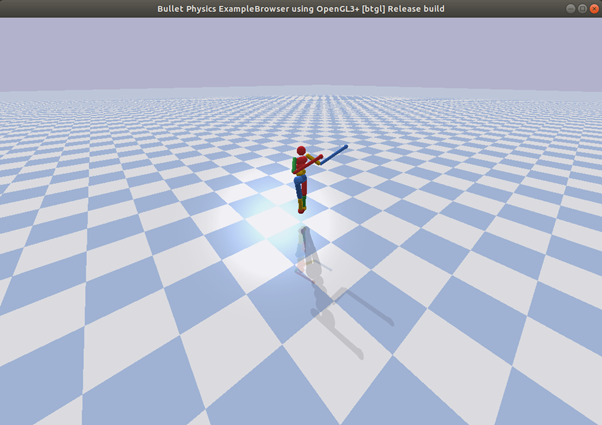

The result should look something like this:

Training With Long Lower Arms

Code

Here is the code I used for training an agent with long arms to walk. Note that I made the arms a bit longer than they were in the code above, trying to encourage the agent to use them for walking.

import xml.etree.ElementTree as ET

from tempfile import NamedTemporaryFile

from pathlib import Path

import pybullet

import pybullet_data

from pybullet_envs.gym_locomotion_envs import HumanoidBulletEnv

from pybullet_envs.robot_locomotors import Humanoid, WalkerBase

from ray.rllib.agents.sac import SACTrainer

from ray.tune import register_env, tune

assets_path = Path(pybullet_data.getDataPath()) / "mjcf"

assert assets_path.exists()

humanoid_model_path = assets_path / "humanoid_symmetric.xml"

assert humanoid_model_path.exists()

tree = ET.parse(humanoid_model_path)

def find_elem(node_type, name):

nodes = tree.iter(node_type)

for node in nodes:

if node.get("name") == name:

return node

assert False

for side in ("left", "right"):

lower_arm = find_elem("geom", f"{side}_larm")

lower_arm.set("fromto", "0.01 0.01 0.01 0.42 0.42 0.42")

hand = find_elem("geom", f"{side}_hand")

hand.set("pos", ".43 .43 .43")

with NamedTemporaryFile() as temp_file:

tree.write(temp_file)

temp_file_name = temp_file.name

class CustomHumanoid(Humanoid):

foot_list = ["right_foot", "left_foot", "left_lower_arm", "right_lower_arm"]

def __init__(self):

WalkerBase.__init__(self,

temp_file_name,

'torso',

action_dim=17,

obs_dim=44 + 2,

power=0.41)

def reset(self, bullet_client):

self._p = bullet_client

if self.doneLoading == 0:

self.ordered_joints = []

self.doneLoading = 1

self.objects = self._p.loadMJCF(

temp_file_name,

flags=pybullet.URDF_USE_SELF_COLLISION |

pybullet.URDF_USE_SELF_COLLISION_EXCLUDE_ALL_PARENTS |

pybullet.URDF_GOOGLEY_UNDEFINED_COLORS)

self.parts, self.jdict, self.ordered_joints, self.robot_body = self.addToScene(

self._p, self.objects)

self.robot_specific_reset(self._p)

s = self.calc_state()

return s

class CustomHumanoidBulletEnv(HumanoidBulletEnv):

def __init__(self, render=False):

super().__init__(robot=CustomHumanoid(), render=render)

ENV = 'HumanoidBulletEnvLongArms-v0'

def make_env(*args, **kwargs):

return CustomHumanoidBulletEnv()

register_env(ENV, make_env)

TARGET_REWARD = 6000

TRAINER = SACTrainer

tune.run(

TRAINER,

stop={"episode_reward_mean": TARGET_REWARD},

config={

"env": ENV,

"num_workers": 7,

"num_gpus": 1,

"monitor": True,

"evaluation_num_episodes": 50,

}

)

Graph

Video

Here is the best video of progress. As you can see, the agent has learnt to walk along. Instead of trying to balance on its arms, they are proving to be an annoyance that needs to be balanced.

Training With Gorilla Arms

Changes

Because it was simpler to implement, the previous code only changed the lengths of the lower arms. This doesn’t seem to be enough to encourage the agent to use its arms to help it walk.

Instead, I tried changing both the upper and lower arms to be roughly in the same proportions as gorillas have, in the hope that we would get more of an apelike motion. Gorillas are also very heavy and I didn’t change the weight distribution, so that is something you might like to experiment with.

I also found that I had to reduce the electricity cost that gets subtracted from the agent’s reward (see "Cancelled first attempt" below). Further, I added the SAC learning parameters that we have used before for the Humanoid, instead of relying on the defaults.

Code

Here is the code for training the agent with different arm proportions.

import xml.etree.ElementTree as ET

from tempfile import NamedTemporaryFile

from pathlib import Path

import pybullet

import pybullet_data

from pybullet_envs.gym_locomotion_envs import HumanoidBulletEnv

from pybullet_envs.robot_locomotors import Humanoid, WalkerBase

from ray.rllib.agents.sac import SACTrainer

from ray.tune import register_env, tune

assets_path = Path(pybullet_data.getDataPath()) / "mjcf"

assert assets_path.exists()

humanoid_model_path = assets_path / "humanoid_symmetric.xml"

assert humanoid_model_path.exists()

tree = ET.parse(humanoid_model_path)

def find_elem(node_type, name):

nodes = tree.iter(node_type)

for node in nodes:

if node.get("name") == name:

return node

assert False

for side in ("left", "right"):

upper_arm = find_elem("geom", f"{side}_uarm1")

upper_arm.set("fromto", "0 0 0 .36 .36 .36")

lower_arm_body = find_elem("body", f"{side}_lower_arm")

lower_arm_body.set("pos", ".37 .37 .37")

lower_arm = find_elem("geom", f"{side}_larm")

lower_arm.set("fromto", ".01 .01 .01 .3 .3 -.3")

hand = find_elem("geom", f"{side}_hand")

hand.set("pos", ".31 .31 -.31")

hand_body = ET.Element("body", {"name": f"{side}_hand"})

lower_arm_body.remove(hand)

hand_body.append(hand)

lower_arm_body.append(hand_body)

with NamedTemporaryFile() as temp_file:

tree.write(temp_file)

temp_file_name = temp_file.name

class CustomHumanoid(Humanoid):

foot_list = ["right_foot", "left_foot", "left_hand", "right_hand"]

def __init__(self):

WalkerBase.__init__(self,

temp_file_name,

'torso',

action_dim=17,

obs_dim=44 + 2,

power=0.41)

def reset(self, bullet_client):

self._p = bullet_client

if self.doneLoading == 0:

self.ordered_joints = []

self.doneLoading = 1

self.objects = self._p.loadMJCF(

temp_file_name,

flags=pybullet.URDF_USE_SELF_COLLISION |

pybullet.URDF_USE_SELF_COLLISION_EXCLUDE_ALL_PARENTS |

pybullet.URDF_GOOGLEY_UNDEFINED_COLORS)

self.parts, self.jdict, self.ordered_joints, self.robot_body = self.addToScene(

self._p, self.objects)

self.robot_specific_reset(self._p)

s = self.calc_state()

return s

class CustomHumanoidBulletEnv(HumanoidBulletEnv):

def __init__(self, render=False):

super().__init__(robot=CustomHumanoid(), render=render)

self.electricity_cost /= 4

ENV = 'HumanoidBulletEnvLongArms-v1'

def make_env(*args, **kwargs):

return CustomHumanoidBulletEnv()

register_env(ENV, make_env)

TARGET_REWARD = 100_000

TRAINER = SACTrainer

tune.run(

TRAINER,

stop={"episode_reward_mean": TARGET_REWARD},

config={

"env": ENV,

"num_workers": 7,

"num_gpus": 1,

"monitor": True,

"evaluation_num_episodes": 50,

"optimization": {

"actor_learning_rate": 1e-3,

"critic_learning_rate": 1e-3,

"entropy_learning_rate": 3e-4,

},

"train_batch_size": 128,

"target_network_update_freq": 1,

"learning_starts": 1000,

"buffer_size": 1_000_000,

"observation_filter": "MeanStdFilter",

},

)

Graph

I killed the process after 45 hours of training. The graph of learning progress looked like this.

Because the reward structure has changed (the smaller electricity cost), this graph is not comparable to the earlier one.

Videos

Cancelled First Attempt

Just for interest, here is the result I obtained when originally trying this configuration but only dividing the electricity cost by two instead of four. The agent just learned to accrue reward by balancing on all fours and not trying to move forwards.

This is after 18 hours of training.

Reduced Electricity Cost

Here are the three largest progress videos that were generated, which are included because they each show markedly different learned behaviours, and these were really the only three progress snapshots that could be considered to be examples of successful learning.

Series Conclusion

I hope you have enjoyed working through this series of articles about reinforcement learning in continuous control environments.

You have learned the basics of two algorithms capable of learning in continuous action spaces: Proximal Policy Optimisation (PPO) and Soft Actor-Critic (SAC). You have seen some of the environments that PyBullet makes available: CartPole (discrete and continuous), Hopper, Ant and Humanoid. Finally, you have seen some of the possibilities that exist for pushing the boundaries and changing the nature of what the agent is learning by adapting the environment so that the agent learns to run backwards and by altering the physics model so the agent has to learn to move with a different body shape.

I had fun and I hope you did too. Thank you for joining me on this trip through some weird and wonderful computer-generated environments.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.