In this short article, I would like to solve the XOR problem using a combination of both worlds using the dynamic weights approach.

Introduction

The XOR, or “exclusive or”, the problem is a classic problem in ANN research.

It is the problem of using a neural network to predict the outputs of XOR logic gates, given two binary inputs.

An XOR function should return a true value if the two inputs are not equal and a false value if they are equal.

There are many different approaches to solve the XOR problem. Some of them are based on declarative methods, some on imperative approaches like neural networks, etc.

In this article, we will try to use a combined approach to solve XOR problem.

This approach can also be used to solve different search problems.

Background

In order to use this approach, we will need some prerequisites.

First of all, we need to define the required output for a particular input, so let's define a data structure for that.

var thought = new Thought

{

Decisions = new List<Decision>

{

new Decision

{

Answer = "True",

Id =1,

Symbols = new List<Symbol>{new Symbol { Word = "1"},

new Symbol { Word = "1" } }

},

new Decision

{

Answer = "False",

Id = 2,

Symbols = new List<Symbol>{new Symbol { Word = "1"},

new Symbol { Word = "0" } }

},

new Decision

{

Answer = "True",

Id = 3,

Symbols = new List<Symbol>{new Symbol { Word = "0"},

new Symbol { Word = "0" } }

},

new Decision

{

Answer = "False",

Id = 4,

Symbols = new List<Symbol>{new Symbol { Word = "0"},

new Symbol { Word = "1" } }

}

}

};

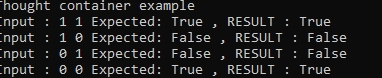

Here, we defined a thought class. This class represents a logical container with a list of decisions.

Each decision represents an answer depending on the input, the thought should produce a valid decision.

Using the Code

Before we can use our thought, we need to train its decisions.

When thought is created, all weight inside decisions are randomly distributed.

- Randomize weights using random normal distribution:

thought.Decisions.ForEach(x => x.ResetWeights());

- Now we need to train our thought:

thought.Reinforce(new[] { "0", "0" }, 3);

thought.Reinforce(new[] { "1", "0" }, 2);

thought.Reinforce(new[] { "0", "1" }, 4);

thought.Reinforce(new[] { "1", "1" }, 1);

During the training process, we provide input and desired decision id as the output.

The training itself is represented with a loop and the predefined number of iterations.

The number of iterations is defined as Decisions.Count * 1300; where 1300 is a Heuristic defined constant.

Inside the loop, we try to calculate the difference in output score and propagate it to the target decision.

Update delta for a target decision is calculated using the following formula:

var updateDelta = MathUtils.Derivative(answer.Score) *

targetDecision.Delta * targetAnswer.Score;

Which is very similar if we decided to build a two-layered neural network.

After delta calculated, we will apply it to the decision's symbols inside the method:

public void UpdateWeights() {

Bias += Delta;

foreach (var symbol in Symbols) {

symbol.Weight += Delta;

}

}

After training is completed, we can ask trained thought for an answer:

To get an answer from the thought, first we need to find a match between input and decision symbols.

Then some weights and pass it to some activation function (in our case, this was a sigmoid function).

public double Stimulate(string[] words) {

var matchedSymbols = Symbols.Where(s => words.Contains(s.Word));

var score = matchedSymbols.Sum(x => x.Weight) + Bias;

return MathUtils.Sigmoid(score);

}

Points of Interest

The described approach can be easily adapted to solve various search problems.

You can find the full source code of the example on Github:

History

- 13th January, 2022: Initial version

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin