Click here for a free trial software download

Kazushige Goto, Murat Efe Guney, and Sarah Knepper, Software Development Engineers, Intel Corporation

General matrix-matrix multiplication (GEMM) is a fundamental operation in many scientific, engineering, and machine learning applications and is one of the key routines in the BLAS (basic linear algebra subprograms) domain. Four precisions (real single, real double, complex single, and complex double) of GEMM exist. In this article, we focus on SGEMM (real single precision).

The Fortran API for SGEMM is:

SGEMM(transa, transb, m, n, k, alpha, A, lda, B, ldb, beta, C, ldc)

It performs the computation:

C := alpha * op(A) * op(B) + beta * op(C), where op(X) = X or X<sup>T</sup> (depending on the value of the transx parameter).

The arrays A and B are inputs, while C is both input and output. Array A contains an m-by-k matrix, array B a k-by-n matrix, and array C an m-by-n matrix. The leading dimensions (lda, ldb, and ldc) determine the stride from one column to the next, allowing GEMM to work on portions of a larger matrix. Leading dimensions can also impact the performance by causing subsequent columns to be cache line-aligned or to map to the same set on the 8-way level-1 cache.

Intel® Math Kernel Library (Intel® MKL) offers high-performing GEMM implementations. The typical approach for optimizing matrix-matrix multiplication is to transform blocks of the original input matrices into an internal data format (such as a packed format), multiply transformed blocks via a handwritten assembly kernel, and then update the output matrix.1 Block sizes are chosen to maximize cache and register usage. The reasons to pack are numerous:

- The ability to fit more data from A and B into the caches, which allows for bigger blocking and more data reuse

- Contiguous, aligned, and predictable accesses, which minimize cache and data translation lookaside buffer (DTLB) misses

- A reduction in loop overhead

For conventional sizes in high-performance computing, this packing-based approach works well. In general here, m and n are relatively large, while k may be moderate (outer product) or also relatively large (square), so that the amount of time spent packing the input matrices is small, relative to the time spent in the computational kernel. However, for sizes where one of m or n is relatively small, as is common for some machine learning applications, the packing overhead can become significant. As a result, a GEMM implementation that does not rely on explicit packing can outperform a conventional, packing-based GEMM implementation. Intel MKL 11.3 Update 3 includes {S,D}GEMM kernels that are optimized for some of these skewed sizes for Intel® Advanced Vector Extensions 2 (Intel® AVX2) and Intel® Advanced Vector Extensions 512 (Intel® AVX-512) and on the 2nd generation of the Intel® Xeon Phi™ > processor. Later versions of Intel MKL continue to improve these kernels.

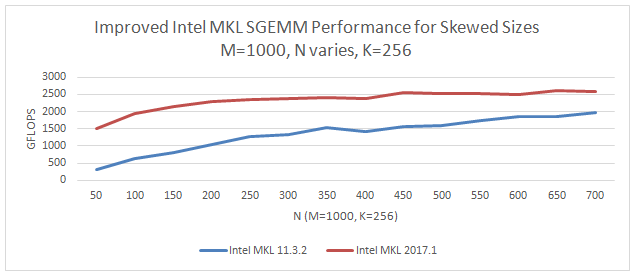

To see an example of the benefit these new kernels can provide, Figure 1 compares the performance of SGEMM in Intel MKL 2017 Update 1 with that of Intel MKL 11.3 Update 2 for sizes that may arise in machine learning. Here, m and k are fixed to 1000 and 256, respectively, while n varies. The performance is given in gigaflops (billions of floating-point operations per second), so higher is better.

Figure 1. Improved Intel® MKL SGEMM performance for skewed sizes

Based on the particulars of the SGEMM call (including processor type and thread count, problem size and leading dimensions, and transposition parameters), Intel MKL chooses to use either the conventional kernels or the new packing-free kernels. Deep learning frameworks that rely on Intel MKL for SGEMM performance benefit from these optimizations without requiring modification to the frameworks.

Another way to minimize the packing overhead arises when one or more of the input matrices (A or B) is reused in multiple matrix multiplications; for example, this can arise during recurrent neural networks. Here, we can pay the cost to pack the reused matrix once and then use the packed version in multiple SGEMM computations. Intel MKL 2017 introduced packed APIs for {S,D}GEMM that allow the packing overhead to be amortized over multiple matrix multiplications. For single precision, the four new packed APIs are:

dest = sgemm_alloc (identifier, m, n, k)

sgemm_pack (identifier, trans, m, n, k, alpha, src, ld, dest)

sgemm_compute (transa, transb, m, n, k, A, lda, B, ldb, beta, C, ldc)

sgemm_free (dest)

The parameters are similar to those of SGEMM, with the addition of the identifier character, which identifies which matrix (A or B) is to be packed. The transa and transb parameters of sgemm_compute can be set to "T" (transpose) or "N" (no transpose) as usual; additionally, a value of P indicates that the corresponding matrix is in the internal packed format. The packed APIs provide benefit if an input matrix is used multiple times; thus, sgemm_alloc and sgemm_pack would each be called once to allocate memory and pack the desired matrix in the internal packed format, respectively, followed by multiple calls to sgemm_compute where the packed matrix is passed as one of the input matrices. Finally, sgemm_free is called to release the memory. (For further details, please see the Intel® Math Kernel Library Developer Reference.)

Figure 2 shows a comparison of the performance of sgemm and sgemm_compute (where the A matrix is packed and the packing time is ignored) in gigaflops.

Configuration Info - Versions: Intel® Math Kernel Library (Intel® MKL) 2017.1; Hardware: Intel® Xeon® Processor E5-2699v4, 2 22-core CPUs (55MB LLC, 2.2GHz), 64GB of RAM; Operating System: RHEL 7.2 GA x86_64.

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. *Other names and brands may be claimed as the property of others. Benchmark Source: Intel Corporation

Optimization Notice: Intel’s compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice revision #20110804.

Figure 2. Faster matrix multiplication using Intel ® MKL packed APIs

Applications that reuse the larger of the A and B input matrices between GEMM calls have the greatest potential to benefit from packed APIs. If both m and n parameters are large, or if the smaller of the two input matrices is reused, the performance improvements from switching to packed APIs may not be significant enough to justify the programming effort. Therefore, we recommend measuring the performance of the particular use case before modifying the application code to employ packed APIs.

As we have seen, the internal packing operation as found in a conventional GEMM implementation can have a noticeable overhead, particularly for sizes with one or more small dimensions. This overhead can be reduced by using kernels that avoid explicitly packing the input matrices or by amortizing the cost of packing over multiple GEMM computations. Starting from Intel MKL 11.3 Update 3, {S,D}GEMM can choose to use kernels that operate directly on the input matrices without first packing to internal buffers; which kernels are used is determined at runtime based on problem characteristics and processor information. Alternatively, Intel MKL 2017 introduced packed APIs that allow one or both of the input matrices to be explicitly packed and then reused in multiple matrix-matrix computations. These two approaches help to achieve high GEMM performance on multicore and many-core Intel® architectures, particularly for sizes arising from deep neural networks.

1. Kazushige Goto and Robert A. van de Geijn. 2008. "Anatomy of High-Performance Matrix Multiplication." ACM Transactions on Mathematical Software, 34, 3, Article 12, May 2008.

Try Intel® Math Kernel Library (Intel® MKL) today

You may know us for our processors. But we do so much more. Intel invents at the boundaries of technology to make amazing experiences possible for business and society, and for every person on Earth.

Harnessing the capability of the cloud, the ubiquity of the Internet of Things, the latest advances in memory and programmable solutions, and the promise of always-on 5G connectivity, Intel is disrupting industries and solving global challenges. Leading on policy, diversity, inclusion, education and sustainability, we create value for our stockholders, customers and society.