Here we'll create the Python code for detecting humans in images using SSD DNN models.

In the previous article of this series, we’ve launched our person detection software on a Raspberry Pi device. In this one, we are going to compare two SSD models – MobileNet and SqueezeNet – on the Raspberry Pi 3B device for precision and performance. We’ll select the better one for using against a video clip, and in the real-time mode.

First, let’s create a utility class for evaluating the time and FPS of the function execution:

import time

class FPS:

def __init__(self):

self.frame_count = 0

self.elapsed_time = 0

def start(self):

self.start_time = time.time()

def stop(self):

self.stop_time = time.time()

self.frame_count += 1

self.elapsed_time += (self.stop_time-self.start_time)

def count(self):

return self.frame_count

def elapsed(self):

return self.elapsed_time

def fps(self):

if self.elapsed_time==0:

return 0

else:

return self.frame_count/self.elapsed_time

The above class simply saves the start time of the execution – when calling the start method – and evaluates the time elapsed until the stop method is called. Knowing the total elapsed time and the number of the function executions, we can calculate the average FPS for a long computing procedure.

Now let’s modify our Python code to evaluate the detection time of detections (only the modified part is shown here):

fps = FPS();

fps.start()

obj_data = ssd.detect(image)

persons = ssd.get_objects(image, obj_data, person_class, 0.5)

fps.stop()

person_count = len(persons)

print("Person count on the image: "+str(person_count))

print("FPS: "+str(fps.fps()))

By running the code on several images, we can estimate the average time the device’s processing unit needs to detect persons in a frame. We’ve done this for the MobileNet DNN model, and we’ve got the average value of 1.25 FPS. This means that the device can process one frame in about 0.8 seconds.

To test the SqueezeNet SSD model, let’s modify our code again. One obvious change is to the paths for the model loading:

proto_file = r"/home/pi/Desktop/PI_RPD/squeezenet.prototxt"

model_file = r"/home/pi/Desktop/PI_RPD/squeezenet.caffemodel"

Another important modification is switching to the frame processor with the different scaling parameters. The SqueezeNet model has been trained with its own scaling coefficients, so we must specify the appropriate values for the model:

ssd_proc = FrameProcessor(proc_frame_size, 1.0, 127.5)

Testing the SqueezeNet model on the same images we’d used for MobileNet, we’ve got a slightly higher processing speed, about 1.5 FPS (or about 0.67 seconds per frame). Looks like the SqueezeNet model is faster than MobileNet on the Raspberry Pi device. And with speed being the main issue, we should probably use the faster model.

But let’s not jump to conclusions before we test the models in terms or precision. We need to compare the rate of positive detections. Why is it important here? This will be completely clear when we test person detection in the real-time mode. The obvious reason is that if the detection rate is high, the probability of finding persons on a video stream increases.

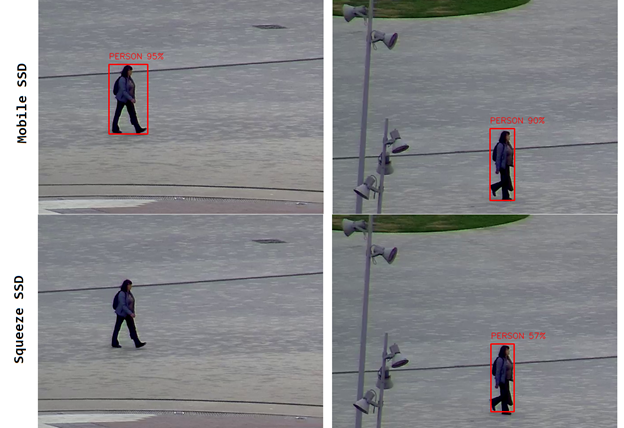

To estimate the detection rate of our models, we should run the code against many images and evaluate the number of correct detections. Here is the experiment results for two images:

As you can see, the MobileNet SSD found the person on both images with a good confidence reported – more than 90 percent. The SqueezeNet model found the person on one of the images only, with a rather low confidence of 57 percent. So the tests showed that the probability of a person detection with the SqueezeNet SSD is about twice lower than with the MobileNet model.

Taking into account both experiments - the speed estimate and the accuracy evaluation - we announce the MobileNet DNN as the final winner. We’ll do all further experiments with this model. Note that, should we change our mind, all the code could be easily adapted for the SqueezeNet model.

Next Steps

In the next article of the series, we’ll develop Python code for person detection in a video stream. It will read frames from a video file using the OpenCV library. We’ll then see how the detection algorithm works for the streaming data and how to adapt it for the live video.