Here we adapt the code for real-time processing and tested it on a video, proving that it could detect persons in a live video stream.

This is the last article in a series of seven. By now, we have a DNN model for person detection and the Python code for launching this model on a Raspberry Pi device. The AI algorithm we’ve built using the MobileNet DNN averages about 1.25 FPS on the Pi 3B board. As we’ve shown in the previous article, this speed is sufficient for detecting a person’s appearance in the video stream from a live camera. But our algorithm is not real-time-geared in the conventional sense.

Live video cameras in a video surveillance system typically operate at the speed of 15-30 FPS. Our algorithm, which processes frames at the rate of 1.25 FPS, is simply way too slow. What makes it slow? Let’s look at our code for a clue.

Here is a piece of the code we developed in one of the previous articles:

fps.start()

obj_data = self.ssd.detect(frame)

persons = self.ssd.get_objects(frame, obj_data, class_num, min_confidence)

fps.stop()

As you can see, we measure the speed only for the person detection operation (running the SSD model on a frame). So this is the operation which requires 0.8 seconds per frame to execute. The rest of the algorithm is fast enough to run at the speed of the real-time video systems.

Taking this into account, could we redesign our algorithm to adapt it for higher speed required to process real-time video streams? Let’s try.

In the current version of the algorithm, we run the SSD model processing, which takes about 0.8 seconds per frame, and the rest of the code, which can operate in real-time, sequentially. So the slower part defines the overall speed. The simplest way of adapting the algorithm to the real-time mode is dividing it into parts and running these parts in parallel. The "fast" part will run at its real-time speed, and the "slow" part will run at 1.25 FPS. But because the parts will run asynchronously, we can process the "slow" part (of the SSD model) and only those frames that merit it.

Take a look at the code below. It is a new class that realizes the idea of asynchronous processing:

import sys

from multiprocessing import Process

from multiprocessing import Queue

def detect_in_process(proto, model, ssd_proc, frame_queue, person_queue, class_num, min_confidence):

ssd_net = CaffeModelLoader.load(proto, model)

ssd = SSD(ssd_proc, ssd_net)

while True:

if not frame_queue.empty():

frame = frame_queue.get()

obj_data = ssd.detect(frame)

persons = ssd.get_objects(frame, obj_data, class_num, min_confidence)

person_queue.put(persons)

class RealtimeVideoSSD:

def __init__(self, proto, model, ssd_proc):

self.ssd_proc = ssd_proc

self.proto = proto

self.model = model

def detect(self, video, class_num, min_confidence):

detection_num = 0

capture = cv2.VideoCapture(video)

frame_queue = Queue(maxsize=1)

person_queue = Queue(maxsize=1)

detect_proc = Process(target=detect_in_process, args=(self.proto, self.model, self.ssd_proc, frame_queue, person_queue, class_num, min_confidence))

detect_proc.daemon = True

detect_proc.start()

frame_num = 0

persons = None

while(True):

t1 = time.time()

(ret, frame) = capture.read()

if frame is None:

break

if frame_queue.empty():

print ("Put into frame queue ..."+str(frame_num))

frame_queue.put(frame)

t2 = time.time()

dt = t2-t1

if dt<0.040:

st = 0.040-dt

print ("Sleep..."+str(st))

time.sleep(st)

if not person_queue.empty():

persons = person_queue.get()

print ("Get from person queue ..."+str(len(persons)))

if (persons is not None) and (len(persons)>0):

detection_num += len(persons)

Utils.draw_objects(persons, "PERSON", (0, 0, 255), frame)

cv2.imshow('Person detection',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

frame_num += 1

capture.release()

cv2.destroyAllWindows()

return (frame_num, detection_num)

As you can see, the detection algorithm is extracted into a separate function, detect_in_process. This function has two special arguments: frame_queue and person_queue. These queues are intended to support interaction between the process of frame capturing and the process of person detection.

Let’s take a look at the main class for real-time person detection, RealtimeVideoSSD. Its constructor receives three parameters: a prototype of the SSD structure, the SSD model, and the frame processor. Note that the first two parameters are the paths to the model data, not to the loaded model itself. The model is loaded inside the process where it executes. This allows us to avoid transfer of heavy data between the processes; we transfer only lightweight string data.

The detect method of the class receives the path to a video file, the number of the person class, and the confidence threshold. First, it creates the capture for the video stream and the queues for frames and person detection. Then, it initializes an instance of the Process class with the specified parameters. One of these is the detect_in_process function, which will run with the process. The detection process runs in the background (daemon = True).

Further on, we loop over all the received frames. When we get a new frame, we look at the frame queue and, if it is empty, we put the frame into the queue. The background process looks at the queue and starts working on the frame; when done, the process puts the result into the person queue.

The frame processing loop looks at the person queue and, if there is a detection, it draws it on the current frame. So, if a person is detected in a frame, that person is shown in the frame that resulted from the detection, not in the original frame.

One more trick here is in the frame processing loop. Note that we measure the time of the capture.read method. Then we turn the frame processing to sleep mode to force it to slow down. In this case, we reduce its speed to about 25 FPS (0.040 sec = 1/25 FPS) – the actual frame rate of our test video.

Now we can test the person detection in the real-time mode with the following Python code:

if __name__ == '__main__':

proto_file = r"/home/pi/Desktop/PI_RPD/mobilenet.prototxt"

model_file = r"/home/pi/Desktop/PI_RPD/mobilenet.caffemodel"

proc_frame_size = 300

person_class = 15

frame_proc = FrameProcessor(proc_frame_size, 1.0/127.5, 127.5)

video_file = r"/home/pi/Desktop/PI_RPD/video/persons_4.mp4"

video_ssd = RealtimeVideoSSD(proto_file, model_file, frame_proc)

(frames, detections) = video_ssd.detect(video_file, person_class, 0.5)

print ("Frames count: "+str(frames))

print ("Detection count: "+str(detections))

Here is the screen video of the test run on the Pi 3B device:

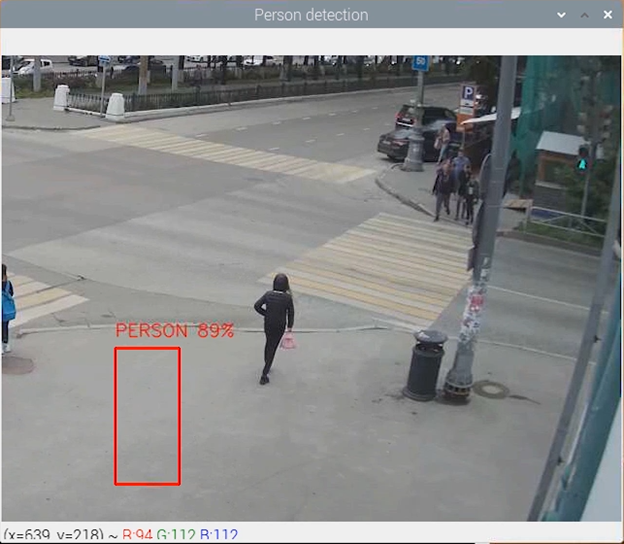

You can see that our video runs in the real-time mode at FPS=25. Person detections lag – the detection rectangles don’t match the people positions, as shown in the picture below:

This lag is an obvious drawback of our detection algorithm: we show detection 0.8 seconds after it appears. As the person moves, the detection shows the person’s position in the past. Nevertheless, the algorithm is real-time: it does process the video at FPS=25 - the frame-rate of the video itself. The algorithm performs the task it is intended for: it detects persons in a video stream. Taking into account that a person walks across the camera view for several seconds, the probability to discover that person’s appearance is very high.

In this series of articles, we used modern AI algorithms for person detection on edge devices with limited computing resources. We considered three DNN methods for object detection and selected the best model – suitable for image processing on the Raspberry Pi device. We developed the Python code for person detection using a pre-trained DNN model, and showed how to launch the code on the Raspberry Pi device. Finally, we adapted the code for real-time processing and tested it on a video, proving that it could detect persons in a live video stream.