Here we’re going to extend the pre-trained model using transfer learning to detect grumpiness in real time using data from a webcam.

With modern web browsers supporting HTML5, we get easy access to a number of APIs such as webcam. It is especially useful when we need access to real time data. In this article, we’re going to use the webcam to access real-time data and use transfer learning to detect grumpiness.

If you’ve been following along, the code being used in this article should seem familiar. We’re going to use the same transfer learning technique to combine the pre-trained MobileNet model with our custom real-time training data.

Setting Up

Create an HTML document and start off by importing the required TensorFlow.js, MobileNet, and KNN classifier models.

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs/dist/tf.min.js"> </script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/mobilenet"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/knn-classifier"></script>

Instead of using an image or canvas tag, we will use a video tag to use images from the live camera feed.

<video autoplay muted id="webcam" width="224" height="224"></video>

We’ll also need "Grumpy" and "Neutral" buttons to add image stills from the live video to our training data:

<button id="grumpy">Grumpy</button>

<button id="neutral">Neutral</button>

Let’s add another tag to output our prediction on the page instead of the console.

<div id="prediction"></div>

Our final HTML file looks like this:

<html lang="en">

<head>

<meta charset="UTF-8">

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs/dist/tf.min.js"> </script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/mobilenet"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/knn-classifier"></script>

</head>

<body>

<div>

<h1>Real-Time Grumpiness detection using Tensorflow.js</h1>

<div style="width:100%">

<video autoplay muted id="webcam" width="224" height="224" style=" margin: auto;"></video>

</div>

<h3>How are you feeling?</h3>

<button id="grumpy">Grumpy</button>

<button id="neutral">Neutral</button>

<div id="prediction"></div>

<script src="grumpinessClassifier.js"></script>

</div>

</body>

</html>

Getting a Live Video Feed

It’s time to move to the JavaScript file where we will start off by setting up a few important variables:

let knn;

let model;

const classes = ['Grumpy', 'Neutral'];

const video = document.getElementById('webcam');

We also need to load the model and create an instance of the KNN classifier:

let knn;

let model;

const classes = ['Grumpy', 'Neutral'];

const video = document.getElementById('webcam');

We’re now in a position to set up the webcam to get video feed:

const setupWebcam = async () => {

return new Promise((resolve, reject) => {

const _navigator = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

_navigator.webkitGetUserMedia || _navigator.mozGetUserMedia ||

_navigator.msGetUserMedia;

if (navigator.getUserMedia) {

navigator.getUserMedia({video: true},

stream => {

video.srcObject = stream;

video.addEventListener('loadeddata', () => resolve(), false);

},

error => reject());

}

});

}

Using the KNN Classifier

Now that we’re getting data from a webcam, we will go ahead and use the addExample method of knn to add example training data.

const addExample = label => {

const feature = model.infer(video, 'conv_preds');

knn.addExample(feature, label);

};

document.getElementById('grumpy').addEventListener('click', () => addExample(0));

document.getElementById('neutral').addEventListener('click', () => addExample(1));

Putting the Code Together

Here’s the final look of our grumpinessClassifier.js file:

let knn;

let model;

knn = knnClassifier.create();

const classes = ['Grumpy', 'Neutral'];

const video = document.getElementById('webcam');

async function loadKnnClassifier() {

console.log('Model is Loading..');

model = await mobilenet.load();

console.log('Model loaded successfully!');

await setupWebcam();

const addExample = label => {

const feature = model.infer(video, 'conv_preds');

knn.addExample(feature, label);

};

document.getElementById('grumpy').addEventListener('click', () => addExample(0));

document.getElementById('neutral').addEventListener('click', () => addExample(1));

while(true) {

if (knn.getNumClasses() > 0) {

const feature = model.infer(video, 'conv_preds');

const prediction = await knn.predictClass(feature);

document.getElementById('prediction').innerText = `

Predicted emotion: ${classes[prediction.classIndex]}\n

Probability of prediction: ${prediction.confidences[prediction.classIndex].toFixed(2)}

`;

}

await tf.nextFrame();

}

}

const setupWebcam = async () => {

return new Promise((resolve, reject) => {

const _navigator = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

_navigator.webkitGetUserMedia || _navigator.mozGetUserMedia ||

_navigator.msGetUserMedia;

if (navigator.getUserMedia) {

navigator.getUserMedia({video: true},

stream => {

video.srcObject = stream;

video.addEventListener('loadeddata', () => resolve(), false);

},

error => reject());

}

});

}

loadKnnClassifier();

Testing it Out

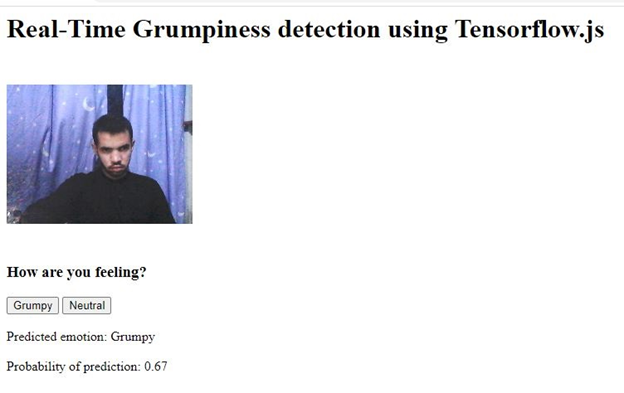

Open the file in the browser and start training the custom classifier by clicking the appropriate button for your expressions as grumpy or neutral. You will start seeing the result after capturing only a few images.

To get a better prediction, you’ll have to feed more data to the custom classifier. Because we trained our model on a small dataset, the AI accuracy of the classifier will be low when other people try your app. For better prediction results, you can feed photos of a lot of people different into the classifier.

What’s Next?

In this article, we learned how to extend the pre-trained MobileNet model using transfer learning to detect grumpiness detection on live webcam data. Our app can now recognize the user’s expressions from real-time video frames. But we still have to feed expressions to the app to start using it. Wouldn’t it be nice and more convenient if we could just get our app up and running without explicitly training it first?

In the next article, we will use another pre-trained model, face-api.js, to detect the expressions without doing any training ourselves.

C# Corner MVP, UGRAD alumni, student, programmer and an author.