Here we’ll discuss pre-processing of the time series data for forecasting and anomaly detection tasks based on Bitcoin’s historical price.

Introduction

This series of articles will guide you through the steps necessary to develop a fully functional time series forecaster and anomaly detector application with AI. Our forecaster/detector will deal with the cryptocurrency data, specifically with Bitcoin. However, after following along with this series, you’ll be able to apply the concepts and approaches you’ve learned to any data type of similar nature.

To fully benefit from this series, you should have some Python, Machine Learning, and Keras skills. The entire project is available in my "GitHub repository. You can also check out the fully interactive notebooks here and here.

In the previous article of this series, we discussed the nature and importance of time series data. In this one, you’re going to learn how to transform the data into the form that can be used to train neural network forecasting models, as well as anomaly detectors.

We are going to work with Bitcoin prices most of the time. I’ve found a complete dataset at Kaggle. To clarify some concepts and ideas, we’ll use a weather dataset that I’ve obtained from the NOAA website. Go ahead and download these datasets to follow along. In this project, I’ll be using Kaggle Notebooks – but you can use any other Cloud notebook, or even your local machine.

Inspecting, Reformatting, and Cleaning the Bitcoin Dataset

Before diving into inspection, let’s import all the libraries that we’ll use in this project. If your notebook fails to import any of them, remember that you can install them with the pip command from the terminal.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import os

import plotly.graph_objects as go

from sklearn.preprocessing import MinMaxScaler

import gc

import joblib

from tensorflow.keras.models import Sequential

from tensorflow.keras import layers

from tensorflow.keras.callbacks import ModelCheckpoint

from tensorflow.keras.models import load_model

from sklearn.ensemble import IsolationForest

from sklearn.cluster import KMeans

import json

import urllib

from datetime import datetime, timedelta,timezone

import requests

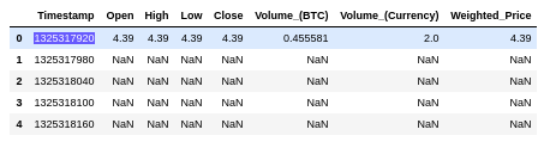

Now let’s load the .csv file that contains the Bitcoin history from December 2011 to December 2020 (minutely separated – around 4 million samples) and show the first rows. The file path is /kaggle/input/bitcoin-historical-data/bitstampUSD_1-min_data_2012-01-01_to_2020-1.

btc = pd.read_csv('/kaggle/input/bitcoin-historical-data/bitstampUSD_1-min_data_2012-01-01_to_2020-12-31.csv')

btc.head()

You’ll now be able to take the first look:

Notice that the "Timestamp" column, which should be expressed as date format, is currently expressed as Unix format. To switch it to the right one, let’s do this:

btc['Timestamp'] = pd.to_datetime(btc.Timestamp, unit='s')

btc.head()

This will change the column format to the right one:

You may see a lot of NaN values (null fields) in most columns. Let’s select the "Weighted_Price'' to focus on an univariate approach, to keep things simple. We’re going to resample the observations and get points equally separated by 1 hour rather than by 1 minute because you wouldn’ expect to notice big variations in short periods of time and therefore you’d have tons of redundant data.

btc = btc[['Timestamp','Weighted_Price']]

btc = btc.resample('H', on='Timestamp')[['Weighted_Price']].mean()

Let’s now take a look at the entire picture to visually understand what portion of the set must be removed to detect data discontinuity and to get rid of meaningless data:

pano = btc.copy()

fig = go.Figure()

fig.add_trace(go.Scatter(x=pano.index, y=pano['Weighted_Price'],name='Full history BTC price'))

fig.update_layout(showlegend=True,title="BTC price history",xaxis_title="Time",yaxis_title="Prices",font=dict(family="Courier New, monospace"))

fig.show()

In the above chart, you’ll notice some discontinuities from 2012 to mid-2013. Also, the data trend until mid-2017 the data trend is useless because it’s very unlikely that the Bitcoin price gets back to values close to zero. You don’t want to feed your future models with irrelevant data, so let’s truncate our dataset and include only the meaningful values, and replace the null ones with their next not-null neighbors’:

btc = btc.iloc[51000:]

btc.fillna(method ='bfill', inplace = True)

print('NaN values: ',btc.isna().sum())

Now you have a dataset that doesn’t contain any null values. The data points start from 2017-10-25 07:00:00, and your new set has 27906 values. If you plot your dataset again, you must get something like this:

Splitting the Bitcoin Dataset

As you probably know, it’s a common practice to take one portion of the dataset to train a model and another one to test it. In this particular case, we need to take continuous portions, that’s why I’m going to take the first 20% of values as a test set and the last 80% as a training set. To do so, issue the following:

data_for_us = btc.copy()

training_start = int(len(btc) * 0.2)

train = btc.iloc[training_start:]

test = btc.iloc[:training_start]

Now, your training set and test set shapes are (22325, 1) and (5581, 1), respectively.

Scaling the Bitcoin Dataset

This step is crucial before implementing any machine learning/deep learning model (ML/DL). Without it, the model convergence speed will be low; possibly, the model will never even reach a good result after training. Typically, this happens because the parameter adjustments that need to fit heterogeneous values are very resource-consuming. We can define this step as ranging the data between small values that make convergence easier for any ML/DL model.

Let’s first fit the scaler by issuing:

scaler = MinMaxScaler().fit(train[['Weighted_Price']])

Note that we’re using the MinMaxScaler method from Scikit-Learn. I’ll take its description from the official documentation website: "This estimator scales and translates each feature individually such that it is in the given range on the training set, e.g. between zero and one." A mistake people often make when working with scalers is fitting it on the entire available data. That’s not an appropriate approach; you must fit the scaler only on the training set. To save the scaler for future use and scale the training and test sets, issue these commands:

joblib.dump(scaler, 'scaler.gz')

scaler = joblib.load('scaler.gz')

def scale_samples(data,column_name,scaler):

data[column_name] = scaler.transform(data[[column_name]])

return data

train = scale_samples(train.copy(),train.columns[0],scaler)

test = scale_samples(test,test.columns[0],scaler)

Now if you inspect the tables, you’ll notice that all values are between 0 and 1. That’s what we wanted, right?

Generating Sequence and Creating the Dataset

As I’ve mentioned in the previous article, we want to detect anomalies in the current and future Bitcoin prices. Let’s clarify. First of all, we need to use the past and current data to predict what’s going to be the future price value, to then determine anomalies in the entire data picture. Let me further clarify this graphically.

This is what you’d typically have in a sequence dataset:

Suppose that t = n is the current date and time. What we want is to predict the price values for, for example, t = n+1 given the data from t = 0 to t = n, and detect anomalies in the entire window (t = 0 to t = n+1). Like this:

To achieve this, you need to segment the entire dataset into small chunks of sequences that can be passed to your model. In this case, we want to pass to our model the last 24 hours of Bitcoin prices and predict the one for the next hour. To do so, let’s define a lookback as the steps of the past window and lookforward as the steps of the future window:

In code, the function would be:

def shift_samples(data,column_name,lookback=24):

data_x = []

data_y = []

for i in range(len(data) - int(lookback)):

x_floats = np.array(data.iloc[i:i+lookback])

y_floats = np.array(data.iloc[i+lookback])

data_x.append(x_floats)

data_y.append(y_floats)

return np.array(data_x), np.array(data_y)

This will return two NumPy arrays, the first one for the lookback chunk and the second one for the lookforward chunk. Now call this function and pass the test and training sets to create the dataset that we’re going to use:

X_train, y_train = shift_samples(train[['Weighted_Price']],train.columns[0])

X_test, y_test = shift_samples(test[['Weighted_Price']], test.columns[0])

Lastly, to understand the final shape of the sets, issue these commands:

print("Final datasets' shapes:")

print('X_train: '+str(X_train.shape)+', y_train: '+str(y_train.shape))

print('X_test: '+str(X_test.shape)+', y_train: '+str(y_test.shape))

This will return:

Final datasets' shapes:

X_train: (22301, 24, 1), y_train: (22301, 1)

X_test: (5557, 24, 1), y_train: (5557, 1)

If you want to increase the lookforward steps, you’ll need to slightly modify the function:

def shift_samples(data,column_name,lookback=30,lookforward=2):

data_x = []

data_y = []

for i in range(len(data) - int(lookback)-int(lookforward)):

x_floats = np.array(data.iloc[i:i+lookback])

y_floats = np.array(data.iloc[i+lookback:i+lookback+lookforward])

data_x.append(x_floats)

data_y.append(y_floats)

return np.array(data_x), np.array(data_y)

Keep in mind that if you modify the shift_sample function and increase the lookforward steps, you’ll also need to modify the incoming models. I’ve created a notebook where I develop such an approach. To get the full notebook interactivity, please check the one available at Kaggle.

Next Step

In the next article, we’ll go deeper into anomaly detection on time-series data and see how to build models that can perform this task. Stay tuned!

Sergio Virahonda grew up in Venezuela where obtained a bachelor's degree in Telecommunications Engineering. He moved abroad 4 years ago and since then has been focused on building meaningful data science career. He's currently living in Argentina writing code as a freelance developer.