Here we preprocess our data by converting four videos into two facesets.

Deep fakes - the use of deep learning to swap one person's face into another in video - are one of the most interesting and frightening ways that AI is being used today.

While deep fakes can be used for legitimate purposes, they can also be used in disinformation. With the ability to easily swap someone's face into any video, can we really trust what our eyes are telling us? A real-looking video of a politician or actor doing or saying something shocking might not be real at all.

In this article series, we're going to show how deep fakes work, and show how to implement them from scratch. We'll then take a look at DeepFaceLab, which is the all-in-one Tensorflow-powered tool often used for creating convincing deep fakes.

In the previous articles we’ve been going through tons of theory but now it’s time to get into the actual code to make this project work! In this article, I’ll guide you through what’s required to convert the source (src) and destination (dst) videos into actual images ready to be fed into our autoencoders. If you’re not familiar with these terms, I encourage you to quickly read over previous articles to get some context.

The dataset is made up of four videos with Creative Commons Attribution licenses, so reusing or modifying them is allowed. This dataset contains two videos for the source individual and two for the destination individual. You can find the datasets here. The notebook I’m going to be explaining is here. I did this preprocessing stage on Kaggle Notebooks but you can easily run the project locally if you have a powerful GPU. Let’s dive into the code!

Setting up the Basics on the Notebook

Let’s start by importing the required libraries and creating the directories we’re going to use:

import cv2

import pandas as pd

import os

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

!pip install mtcnn

from mtcnn import MTCNN

!cd /kaggle/working/

!mkdir frames_1

!mkdir frames_2

!mkdir frames_3

!mkdir frames_4

!mkdir results_1

!mkdir results_2

Extracting the Video Frames

As you may recall from the first article, I mentioned that we need to convert videos to images so we can extract the faces from the individual frames to finally train our models with them. In this step, we’re going to extract the images that make up the videos using OpenCV, a computer vision library widely used in these sorts of tasks. Let’s get hands on:

Defining the paths where the videos are located and where the outputs will be saved (modify them if required):

input_1 = '/kaggle/input/presidentsdataset/presidents/trump1.mp4'

input_2 = '/kaggle/input/presidentsdataset/presidents/trump2.mp4'

input_3 = '/kaggle/input/presidentsdataset/presidents/biden1.mp4'

input_4 = '/kaggle/input/presidentsdataset/presidents/biden2.mp4'

output_1 = '/kaggle/working/frames_1/'

output_2 = '/kaggle/working/frames_2/'

output_3 = '/kaggle/working/frames_3/'

output_4 = '/kaggle/working/frames_4/'

Defining the function that we’ll use to extract the frames:

def extract_frames(input_path,output_path):

videocapture = cv2.VideoCapture(input_path)

success,image = videocapture.read()

count = 0

while success:

cv2.imwrite(output_path+"frame%d.jpg" % count, image)

success,image = videocapture.read()

count += 1

return count

Extracting the frames:

total_frames_1 = extract_frames(input_1,output_1)

total_frames_2 = extract_frames(input_2,output_2)

total_frames_3 = extract_frames(input_3,output_3)

total_frames_4 = extract_frames(input_4,output_4)

Determining how many frames were extracted per video:

print('Total frames extracted in video 1: ',total_frames_1)

print('Total frames extracted in video 2: ',total_frames_2)

print('Total frames extracted in video 3: ',total_frames_3)

print('Total frames extracted in video 4: ',total_frames_4)

Total frames extracted in video 1: 1701

Total frames extracted in video 2: 1875

Total frames extracted in video 3: 1109

Total frames extracted in video 4: 1530

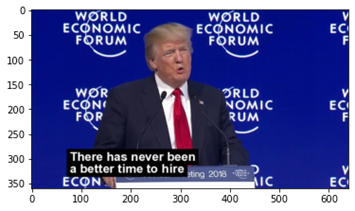

The last output means that we have 1701 and 1875 frames for the source individual, and 1109 and 1530 for the second one. That’s a good amount of images to train our models. If you want to plot one of the frames of the src individual, just run:

%matplotlib inline

plt.figure()

image = cv2.imread('/kaggle/working/frames_1/frame1.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = image.astype('float32')

image /= 255.0

plt.imshow(image)

plt.show()

It will show this:

Plotting a destination individual’s frame:

%matplotlib inline

plt.figure()

image = cv2.imread('/kaggle/working/frames_3/frame1.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

plt.imshow(image)

plt.show()

Face Detection and Extraction

Now that we’ve obtained all our frames, it’s time to extract the faces from them. To do this, we first detect where the face is and then crop it to save the image as a new file. We’ll use MTCNN detector, a Python library specially developed for face detection.

Using one of their examples, this is how its face detection looks:

We’re going to use their script as our base code to extract faces from our frames, but as you can see, the detector does not consider the whole face. The jaw, part of the forehead, and the cheekbones are not included, so we’ll take the MTCNN detection and add some extra padding to get the whole face in a square before cropping it and generating a new image. Run the following lines:

def extract_faces(source_1,source_2,destination,detector):

counter = 0

for dirname, _, filenames in os.walk(source_1):

for filename in filenames:

try:

image = cv2.imread(os.path.join(dirname, filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

detections = detector.detect_faces(image)

x, y, width, height = detections[0]['box']

x1,y1,x2,y2 = x-10,y+10,x-10 +width + 20,y+10+height

face = image[y1:y2, x1:x2]

face = cv2.resize(face, (120, 120), interpolation=cv2.INTER_LINEAR)

plt.imsave(os.path.join(destination,str(counter)+'.jpg'),face)

print('Saved: ',os.path.join(destination,str(counter)+'.jpg'))

except:

pass

counter += 1

for dirname, _, filenames in os.walk(source_2):

for filename in filenames:

try:

image = cv2.imread(os.path.join(dirname, filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

detections = detector.detect_faces(image)

x, y, width, height = detections[0]['box']

x1,y1,x2,y2 = x-10,y+10,x-10 +width + 20,y+10+height

face = image[y1:y2, x1:x2]

face = cv2.resize(face, (120, 120), interpolation=cv2.INTER_LINEAR)

plt.imsave(os.path.join(destination,str(counter)+'.jpg'),face)

print('Saved: ',os.path.join(destination,str(counter)+'.jpg'))

except:

pass

counter += 1

detector = MTCNN()

extract_faces('/kaggle/working/frames_1/','/kaggle/working/frames_2/', '/kaggle/working/results_1/',detector)

extract_faces('/kaggle/working/frames_3/','/kaggle/working/frames_4/', '/kaggle/working/results_2/',detector)

Saved: /kaggle/working/results_1/0.jpg

Saved: /kaggle/working/results_1/1.jpg

Saved: /kaggle/working/results_1/2.jpg

Saved: /kaggle/working/results_1/3.jpg

Saved: /kaggle/working/results_1/4.jpg

...

The final result should be several face images saved at results_1 and results_2, each folder containing the faces of the respective individuals. You might have noticed that I’m resizing all faces to 120x120 dimensions. This is to avoid any conflict when building the model and defining the input shape.

To plot a face and give us an idea of how it would look like extracted, run the following:

%matplotlib inline

plt.figure()

image = cv2.imread('/kaggle/working/results_1/700.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

plt.imshow(image)

plt.show()

It will plot this:

Notice that the image shows a wider range of the individual’s face. This will be very helpful for us to swap faces later, but it will also help us reach a lower error in the models’ training. If you’re following these steps in a Kaggle Notebook like me, issue the following command to then download the files easier:

!zip -r /kaggle/working/trump_faces.zip /kaggle/working/results_1/

!zip -r /kaggle/working/biden_faces.zip /kaggle/working/results_2/

If you’re following the project on your local computer, browse to the results_1 and results_2 folders to get the resulting faceset. If you’re in Kaggle Notebooks, look for the trump_faces.zip and biden_faces.zip files in the working folder, then download them or simply use them as the input of the next notebook.

Now that we have our facesets, it’s time to build and train our models with them. We’ll cover that in the next article. I hope you see you there!

Sergio Virahonda grew up in Venezuela where obtained a bachelor's degree in Telecommunications Engineering. He moved abroad 4 years ago and since then has been focused on building meaningful data science career. He's currently living in Argentina writing code as a freelance developer.