The first step in any data analysis process is to ingest the dataset, evaluate how clean it is, and decide what we need to do to fix inherited issues.

Introduction

This article is part of the Data Cleaning with Python and Pandas series. It’s aimed at getting developers up and running quickly with data science tools and techniques.

If you’d like to check out the other articles in the series, you can find them here:

Once we have a Jupyter Notebook set up and some basic libraries initialized, we need to load some data. To do this, we’ll load data from a CSV file, as well as from a local SQLite database. We’ll then show some of the basic analytics and functions available in Pandas that you can use to display and perform basic analysis on the data.

We've created a complete Jupyter Notebook with the source data files for this series of modules, which you can download and install locally.

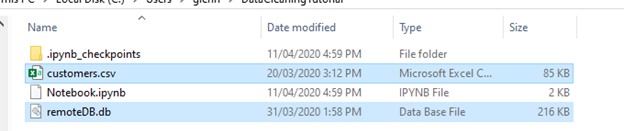

Before we get started, make sure the customers.csv file and the remoteDB.db that goes along with this series are copied into the same directory as the Notebook we saved.

Loading Data from CSV

Let's have a look at loading data into Pandas from a CSV file first. The customers.csv file we added to the same directory as the notebook will be loaded to keep things simple, but you could also load it remotely as well. Add a new input line to your notebook containing the following command:

pd.read_csv("customers.csv")

This command uses the Pandas library to load and display our customers.csv file.

Based on the lines displayed, you’ll immediately see some potential issues with the data, displayed as a NaN. You’ll also see the output generated as a table.

In Pandas, a table is referred to as a DataFrame, which is a two-dimensional data structure similar to a spreadsheet.

Let's move this into a variable and display the first five rows by modifying the code line to:

# Read data from the CSV files

customers = pd.read_csv("customers.csv")

print(customers.head(5))

(Note that here and throughout the article, you’ll see the results of running the code immediately following the code.)

This will read our CSV into a variable and display the first five rows of our data structure.

Loading Data from SQLite

Now let’s load some additional data into Pandas from a SQLite database. We’ll use the sqlite3 library to load and read from the database. You can use a similar process with regular databases as well as with different Python libraries, but SQLite is serverless and requires only the single database file we copied in earlier. Let’s access this file as a database.

To start, we’ll modify our first code block to include another Python library:

import sqlite3 as sql

Then, below where we read customers from the CSV files, add the following code:

con = sql.connect("remoteDB.db")

products = pd.read_sql_query("SELECT * from products", con)

purchases = pd.read_sql_query("SELECT * from purchases", con)

con.close()

print(products.head(5))

print(purchases.head(5))

If we step through this code, the first line connects to the database file, remoteDB.db. The next two lines use Pandas to create a DataFrame from the return of each SQL query. In this example, there are two tables, "products" and "purchases". Finally, the last line in this block closes the connection to the SQL database.

We then print a copy of the first five lines of each variable. The heads of these two data sets look pretty good, so we’ll have to see if there are any further issues with them later.

Basic Data Analysis

Before moving on, let's do a quick, basic analysis of our datasets. Create a new code block and enter the following code:

print(customers.shape)

print(customers.dtypes)

print(customers.describe(include="all"))

Here are some results:

For each of the three tables, this code will produce some basic statistics for the data we loaded.

- The

shape method shows the number of rows and columns for each data frame. - The

dtypes method shows the types of data involved. - The

describe method (with the include="all" modifier) shows statistical information about the data, such as a count of valid values, the number of unique values, the frequency of the values, and so on.

Notice that some of the counts don't match the totals. This is because of missing information in the cells, which we’ll need to find and correct. Our dataset includes customer and product information, with purchases, over the course of a few months, so there’s a lot of different information this defines for us.

Summary

We loaded data from our CSV file and our SQL server using the Pandas DataFrame data structure. We also took a quick look at some basic analysis functions that showed handy information about our datasets.

Now, let’s move on to data cleaning by exploring some of the options for finding and dealing with missing values.

Hi! I'm a Solution Architect, planning and designing systems based in Denver, Colorado. I also occasionally develop web applications and games, as well as write. My blog has articles, tutorials and general thoughts based on more than twenty years of misadventures in IT.