Here we add a trained model to an Android project and created a user interface for passing images into it.

In the previous entry of this series, we created a project that will be used for real-time hazard detection for a driver and prepared a detection model for use in TensorFlow Lite. Here, we will continue with loading the model and preparing it for image processing.

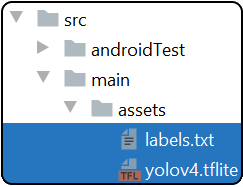

To add the model to the project, create a new folder named assets in src/main. Copy the TensorFlow Lite model and the text file containing the labels to src/main/assets to make it part of the project.

To make use of the model, we must write code that will load it and pass data through it. The detection code will be placed in a class that can be shared by two user interfaces so that the same code can be used on static images (used for testing) and on the live video stream.

Formatting Our Data for the Model

Before we start writing code for this, we need to know how the model expects its input data to be structured. The data is passed in and out as multidimensional arrays. This is also called the “shape” of the data. Generally, when you find a model, this information will be documented.

You can also inspect data using the tool Netron. When a model is opened from this tool, the nodes that make up the network are displayed. Clicking on the input node (displayed at the top of the graph) shows the format of the information for the input data (in this case, an image) and the output for the network. In this case, we see that the input data is an array of 32-bit floating point numbers. The dimensions of the array are 1x416x416x3. This means that the network will accept one image at a time that is 416 by 416 pixels with red, green, and blue components. If you were to use a different model for this project, you would need to examine the input and output for the model and adjust the code accordingly. We will examine the output data in more details when interpreting the results.

Add a new class to the project named Detector. All of the code for managing the trained network will be added to this class. When the class is built, it will accept an image and provide the results in a format that is easier to work with. There are a few constants and fields we should add to the class to start working with it. The fields include a TensorFlow Interpreter object to contain the trained network, a list of the classes of objects that the model recognizes, and the application context.

class Detector {

val TF_MODEL_NAME = "yolov4.tflite"

val IMAGE_WIDTH = 416

val IMAGE_HEIGHT = 416

val TAG = "Detector"

val useGpuDelegate = false;

val useNNAPI=true;

val context: Context;

lateinit var tfLiteInterpreter:Interpreter

var labelList = Vector<String>()

//These output values are structured to match the output of the trained model being used

var buf0 = Array(1) { Array(52) { Array(52) { Array(3) { FloatArray(85) } } } }

var buf1 = Array(1) { Array(26) { Array(26) { Array(3) { FloatArray(85) } } } }

var buf2 = Array(1) { Array(13) { Array(13) { Array(3) { FloatArray(85) } } } }

var outputBuffers: HashMap<Int, Any>? = null

}

The constructor for this class will create the output buffers, load the network model, and load the names of the object classes from the assets folder.

class Detector {

val TF_MODEL_NAME = "yolov4.tflite"

val IMAGE_WIDTH = 416

val IMAGE_HEIGHT = 416

val TAG = "Detector"

val useGpuDelegate = false;

val useNNAPI=true;

val context: Context;

lateinit var tfLiteInterpreter:Interpreter

var labelList = Vector<String>()

//These output values are structured to match the output of the trained model being used

var buf0 = Array(1) { Array(52) { Array(52) { Array(3) { FloatArray(85) } } } }

var buf1 = Array(1) { Array(26) { Array(26) { Array(3) { FloatArray(85) } } } }

var buf2 = Array(1) { Array(13) { Array(13) { Array(3) { FloatArray(85) } } } }

var outputBuffers: HashMap<Int, Any>? = null

}

Testing Out the Model

It only takes a few lines of code to execute the network model. When an image is supplied to the Detector class, it will be resized to match the requirements for the network. The data in Bitmap images is encoded as bytes. The values must be converted to 32-bit float values. The TensorFlow Lite libraries contain functionality to make common conversions like this easy. The TensorImage type also has a convenient method that allows it to be used as a buffer for methods that require input buffers.

public fun processImage(sourceImage: Bitmap) {

val imageProcessor = ImageProcessor.Builder()

.add(ResizeOp(IMAGE_HEIGHT, IMAGE_WIDTH, ResizeOp.ResizeMethod.BILINEAR))

.build()

var tImage = TensorImage(DataType.FLOAT32)

tImage.load(sourceImage)

tImage = imageProcessor.process(tImage)

tfLiteInterpreter.runForMultipleInputsOutputs(arrayOf<any>(tImage.buffer), outputBuffers!!)

}</any>

To test this, add a new layout to the project. The layout will have a simple interface to allow an image from the device be selected. The selected image will be processed by the detector.

="1.0"="utf-8"

<androidx.constraintlayout.widget.ConstraintLayout>

<ImageView

android:id="@+id/selected_image_view"

/>

<Button

android:id="@+id/select_image_button"

android:onClick="onSelectImageClicked"

/>

</androidx.constraintlayout.widget.ConstraintLayout>

The code for this activity opens the system image chooser. When an image is selected and passed back to the application, it passes the image to the detector.

public override fun onActivityResult(reqCode: Int, resultCode: Int, data: Intent?) {

super.onActivityResult(reqCode, resultCode, data)

if (resultCode == RESULT_OK) {

if (reqCode == SELECT_PICTURE) {

val selectedUri = data!!.data

val fileString = selectedUri!!.path

selected_image_view!!.setImageURI(selectedUri)

var sourceBitmap: Bitmap? = null

try {

sourceBitmap =

MediaStore.Images.Media.getBitmap(this.contentResolver, selectedUri)

RunDetector(sourceBitmap)

} catch (e: IOException) {

e.printStackTrace()

}

}

}

}

fun RunDetector(bitmap: Bitmap?) {

if (detector == null) detector = Detector(this)

detector!!.processImage(bitmap!!)

}

Result of the UI Layout

We can now select an image and the detector will process the image, recognizing objects within it. But what do the results mean? How do we use these results to warn the user about hazards? In the next entry of this series, we will interpret the results and provide the relevant information to the user.