Here we look at: Setting up TensorFlow.js, creating training data, defining the neural network model, and training the AI.

TensorFlow + JavaScript. The most popular, cutting-edge AI framework now supports the most widely used programming language on the planet, so let’s make magic happen through deep learning right in our web browser, GPU-accelerated via WebGL using TensorFlow.js!

In this article, I will show you how quickly and easily set up and use TensorFlow.js to train a neural network to make predictions from data points. You can find the code for this project along with other examples by clicking 'Browse Code' in the menu to the left or downloading the .zip file linked above.

Setting Up TensorFlow.js

The first step is to create an HTML web page file, such as index.html, and include the TensorFlow.js script inside the <head> tag, which will let us work with TensorFlow using the tf object.

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

Here is a starter template page we can use for our projects, with a section reserved for our code:

<html>

<head>

<title>Deep Learning in Your Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

</head>

<body>

<script>

(async () => {

})();

</script>

</body>

</html>

All of the code discussed in this article will be placed inside the async wrapper section of the web page.

To run the code, open the above web page in any modern browser, then launch the console debugger log (pressing F12 does the trick in most browsers).

The async wrapper will let us work with TensorFlow’s asynchronous function using the await keyword without a bunch of .then() code chains. It should be fairly straightforward; however, if you would like to get more familiar with this pattern, I recommend reading this guide.

Creating Training Data

We are going to replicate an XOR boolean logic gate, which takes two choices and checks that one of them is selected, but not both.

As a table, it looks like this:

| A | B | Output |

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1

| 1 | 0 |

In code, we can set it up as two arrays of numbers, one for our two input choices and one for our expected output:

onst data = [ [ 0, 0 ], [ 0, 1 ], [ 1, 0 ], [ 1, 1 ] ];

const output = [ [ 0 ], [ 1 ], [ 1 ], [ 0 ] ];

Next, we need to convert the values into data "tensors" to prepare it for TensorFlow. We can do that by creating a 1-dimensional tensor for each element and batching them into a "stack" like this:

const xs = tf.stack( data.map( a => tf.tensor1d( a ) ) );

const ys = tf.stack( output.map( a => tf.tensor1d( a ) ) );

Alternative: Here is a for-loop version if you aren’t familiar with the map() function.

const dataTensors = [];

const outputTensors = [];

for( let i = 0; i < data.length; i++ ) {

dataTensors.push( tf.tensor1d( data[ i ] ) );

outputTensors.push( tf.tensor1d( output[ i ] ) );

}

const xs = tf.stack( dataTensors );

const ys = tf.stack( outputTensors );

Checkpoint

Your code should now look similar to this:

<html>

<head>

<title>Deep Learning in Your Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

</head>

<body>

<script>

(async () => {

const data = [ [ 0, 0 ], [ 0, 1 ], [ 1, 0 ], [ 1, 1 ] ];

const output = [ [ 0 ], [ 1 ], [ 1 ], [ 0 ] ];

const xs = tf.stack( data.map( a => tf.tensor1d( a ) ) );

const ys = tf.stack( output.map( a => tf.tensor1d( a ) ) );

})();

</script>

</body>

</html>

Defining the Neural Network Model

Now let’s set up our network for some deep learning. "Deep" refers to the complexity of our network, and is generally defined as three or more "hidden layers" in the network. Let’s build one.

const model = tf.sequential();

model.add(tf.layers.dense( { units: 100, inputShape: [ 2 ] } ) );

model.add(tf.layers.dense( { units: 100, activation: 'relu' } ) );

model.add(tf.layers.dense( { units: 10, activation: 'relu' } ) );

model.add(tf.layers.dense( { units: 1 } ) );

model.summary();

We are defining our model sequentially. To match our data, our neural net needs to take an input shape of 2 and output of 1 number.

The first two hidden layers have 100 nodes, or units, and the third one has 10. Because the relu (Rectified Linear Unit) activation function learns quickly and performs well in most cases, it’s a good default choice here.

Training the AI

There’s just one step left, and that’s to train our neural network on our data. In TensorFlow, this means that we simply compile our model and fit our data with a specified number of iterations or "epochs."

model.compile( { loss: 'meanSquaredError', optimizer: "adam", metrics: [ "acc" ] } );

// Train the model using the data.

let result = await model.fit( xs, ys, {

epochs: 100,

shuffle: true,

callbacks: {

onEpochEnd: ( epoch, logs ) => {

console.log( "Epoch #", epoch, logs );

}

}

} );

Just like how we chose relu for the activation function in our model, we will use the built-in meanSquaredError and adam (loss and optimizer) functions that fit most scenarios.

Because our XOR training data isn’t order-dependent (not like, for example, time-series data such as weather or temperature over time), we want to enable the shuffle option, and we will train for 100 epochs.

Testing the Result

It’s finally time to use our trained neural network. All we need to do is run the model’s predict() function with our inputs and data and then print it out.

for( let i = 0; i < data.length; i++ ) {

let x = data[ i ];

let result = model.predict( tf.stack( [ tf.tensor1d( x ) ] ) );

let prediction = await result.data();

console.log( x[ 0 ] + " -> Expected: " + output[ i ][ 0 ] + " Predicted: " + prediction[ 0 ] );

}

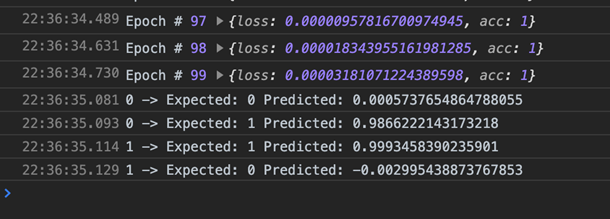

After the training is complete, the browser debugger console will show an output like this:

Finish Line

Here is the full script code:

<html>

<head>

<title>Deep Learning in Your Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

</head>

<body>

<script>

(async () => {

const data = [ [ 0, 0 ], [ 0, 1 ], [ 1, 0 ], [ 1, 1 ] ];

const output = [ [ 0 ], [ 1 ], [ 1 ], [ 0 ] ];

const xs = tf.stack( data.map( a => tf.tensor1d( a ) ) );

const ys = tf.stack( output.map( a => tf.tensor1d( a ) ) );

const model = tf.sequential();

model.add(tf.layers.dense( { units: 100, inputShape: [ 2 ] } ) );

model.add(tf.layers.dense( { units: 100, activation: 'relu' } ) );

model.add(tf.layers.dense( { units: 10, activation: 'relu' } ) );

model.add(tf.layers.dense( { units: 1 } ) );

model.summary();

model.compile( { loss: 'meanSquaredError', optimizer: "adam", metrics: [ "acc" ] } );

let result = await model.fit( xs, ys, {

epochs: 100,

shuffle: true,

callbacks: {

onEpochEnd: ( epoch, logs ) => {

console.log( "Epoch #", epoch, logs );

}

}

} );

for( let i = 0; i < data.length; i++ ) {

let x = data[ i ];

let result = model.predict( tf.stack( [ tf.tensor1d( x ) ] ) );

let prediction = await result.data();

console.log( x[ 0 ] + " -> Expected: " + output[ i ][ 0 ] + " Predicted: " + prediction[ 0 ] );

}

})();

</script>

</body>

</html>

A Note on Memory Usage

To keep things simple, this tutorial does not worry about the memory usage of tensors and disposing of them afterward; however, this matters for bigger, more complex projects. TensorFlow.js allocates tensors on the GPU, and we have to dispose of them ourselves if we want to prevent memory leaks. We can do that using the dispose() function on each tensor object. Alternatively, we can let TensorFlow.js automatically manage tensor disposal by wrapping our code inside the tf.tidy() function like this:

for( let i = 0; i < data.length; i++ ) {

let x = data[ i ];

let result = tf.tidy( () => {

return model.predict( tf.stack( [ tf.tensor1d( x ) ] ) );

});

let prediction = await result.data();

result.dispose();

console.log( x[ 0 ] + " -> Expected: " + output[ i ][ 0 ] + " Predicted: " + prediction[ 0 ] );

}

What’s Next? Dogs and Pizza?

So you’ve seen how easy it is to set up and use TensorFlow in a browser. Don’t stop here, there’s more! How about we build on the progress we’ve made so far and try something more interesting, like detecting animals and objects in an image?

Follow along with the next article in this series, Dogs and Pizza: Computer Vision in the Browser with TensorFlow.js.

Raphael Mun is a tech entrepreneur and educator who has been developing software professionally for over 20 years. He currently runs Lemmino, Inc and teaches and entertains through his Instafluff livestreams on Twitch building open source projects with his community.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin