Natural Language Processing needs source data to process. We'll look at some examples, then download some relevant datasets for use in tutorial exercises.

The goal of this series on Sentiment Analysis is to use Python and the open-source Natural Language Toolkit (NLTK) to build a library that scans replies to Reddit posts and detects if posters are using negative, hostile or otherwise unfriendly language.

To get started using a library like Natural Language Toolkit (NLTK) for natural language processing (NLP), we need some textual data to start exploring. We'll look at datasets provided by NLTK, as well as an example of capturing your own textual corpus for analysis.

As a bonus, we'll also explore how the Reddit API can be used to capture interesting comment data for NLP analysis.

If you haven't installed NLTK yet, see Introducing NLTK for Natural Language Processing with Python for a quick primer and setup instructions.

Exploring NLTK Datasets

One convenience that comes with NLTK is a rich corpus of data integrated with the toolkit. You can download a number of additional datasets directly using NLTK.

Let’s take a look at an example of one of the datasets included in the NLTK corpus, nltk.corpus.movie_reviews, to see what they look like. movie_reviews is a collection of 2,000 movie reviews from IMDB that are labeled as a positive or negative review.

If you were going to use these reviews, you'd start by downloading the movie_reviews corpus.

import nltk

nltk.download('movie_reviews')

from nltk.corpus import movie_reviews

It will vary depending on your operating system and installation settings, but if you inspect nltk.data.path, you can identify where the corpus is installed on your file system and explore the data itself. It is just a collection of text files containing the words originally written in the review.

~/nltk_data/corpora/movie_reviews

├── README

├── neg

│ ├── cv000_29416.txt

│ ├── cv001_19502.txt

│ ...

│ ├── cv997_5152.txt

│ ├── cv998_15691.txt

│ └── cv999_14636.txt

└── pos

├── cv000_29590.txt

├── cv001_18431.txt

├── cv002_15918.txt

├── ...

This labeled dataset can be useful for machine learning applications. The words most commonly used in a positive or negative review can be utilized in supervised training so that you can explore new datasets based on the model built from that analysis. We’ll come back to that idea in the second part. There are datasets on the NLTK website such as Amazon product reviews, Twitter social media posts, and so on.

What if you want to use a dataset other than the ones in the NLTK corpus?

NLTK doesn't come with a Reddit corpus, so let's use that as an example of how to assemble some NLP data ourselves.

Gathering NLP Data from Reddit

Let's say we wanted to perform an NLP sentiment analysis. We'd need a dataset that includes the text of public conversations between users or customers.

NLTK does not have an existing dataset for this kind of analysis, so we’ll need to gather something similar for ourselves. For this exercise, we'll use publicly available data from Reddit discussions

Why Reddit?

Reddit, if you are not familiar, is an aggregator of user-generated content for articles, photos, videos, and text-based posts from a very large community of diverse interests. With over 400 million users and 138,000 active areas of interest, called "subreddits," it is not surprising that the site consistently ranks among the 10 most-visited sites on the Internet.

It is also a place where people go to discuss and ask questions about technology, techniques, new products, books, and many other topics. It is particularly popular among the 18-35 age demographic sought after by many marketers.

Regardless of what your business does, there is a good chance somebody on Reddit is interested in discussing it and you can learn something about your fans and your critics.

Data is available to the public through the Reddit API, so we’re going to need to do a little setup to get access to the API.

Getting Started with the Reddit API

To start using the Reddit API you will need to go through a few steps.

- Create an account

- Register for Reddit API access

- Create a Reddit app

Creating an account should be straightforward. Go to https://reddit.com and click "Sign Up", or login if you already have an account.

Next, register for Reddit API access. You can find details about how to get access here: https://www.reddit.com/wiki/api.

For production purposes, it’s important to read through these terms of use and accept it for API access.

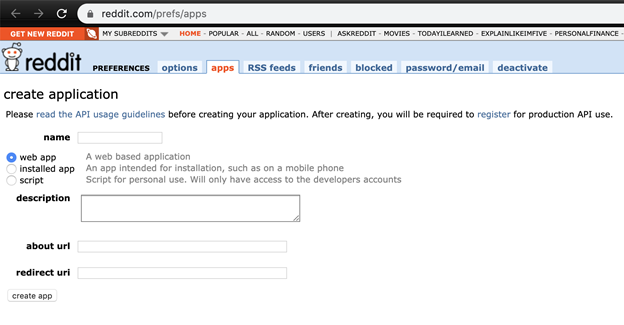

Upon registration, you’ll land on a URL for creating a Reddit app: https://www.reddit.com/prefs/apps/.

You can choose any values that suit your needs, but at a minimum, you need to enter a name (I'm using "sentiment analysis"), type ("script"), and redirect url ("http://localhost.com").

Once you click create app, you’ll be able to retrieve a few details for the application.

To learn more about the Reddit API, you can review the REST documentation or the r/redditdev subreddit community.

Installing a Reddit API Client

Rather than use the REST endpoints or making API calls directly, you can take advantage of a client library perfect for Python applications called Python Reddit API Wrapper (PRAW).

Install the Python API client with the Python pip package manager in a terminal or command prompt:

pip install praw

To initialize the client, you’ll need to pass your client_id, client_secret, and a user_agent as recommended by the Reddit Terms of Use or identifying your application. I like to set these credentials as environment variables so that they aren’t accidentally included in the codebase. You can read those values in by using functions from the standard Python os library. Here’s an example for initializing the os and praw client libraries:

import os

import praw

reddit = praw.Reddit(

client_id = os.environ['REDDIT_CLIENT_ID'],

client_secret = os.environ['REDDIT_CLIENT_SECRET'],

user_agent = "script:sentiment-analysis:v0.0.1 (by {})".format(os.environ['REDDIT_USERNAME'])

)

With the Reddit API initialized, we’ll look at a few useful ways to get data for our sentiment analysis.

Retrieving Submissions from a Subreddit

Apologies to the avid Redditor that has been making use of the site for years, but let’s cover a few basics. If you have a product, technology, activity, or community that interests you, you can find and subscribe to a subreddit where it's discussed. For example, the r/learnpython subreddit pictured below allows users to post submissions (topics for discussion) that other users can vote on (up or down) and post their own comments or questions about that topic.

There are a few ways to sort or order these posts depending on your preferences:

- New - the most recent posts submitted.

- Top - the posts that have the most upvotes in the reddit voting system.

- Rising - the posts that have seen the most recent increase in upvotes and comments.

- Hot - the posts that have been getting upvotes/comments recently.

- Controversial - the posts and comments getting both upvotes and downvotes.

Each of these has a corresponding method in the Reddit API. Here are a few examples:

sub = reddit.subreddit('learnpython')

top_posts_of_the_day = sub.top('day')

hot_posts = sub.hot(limit=20)

controversial_posts = sub.controversial('month', limit=10)

nltk_posts = sub.search(‘nltk’)

for submission in controversial_posts:

print("TITLE: {}".format(submission.title))

print("AUTHOR: {}".format(submission.author))

print("CREATED: {}".format(submission.created))

print("COMMENTS: {}".format(submission.num_comments))

print("UPS: {}".format(submission.ups))

print("DOWNS: {}".format(submission.downs))

print("URL: {}".format(submission.url))

Every submission on Reddit has a URL. So regardless of whether we used search, filtering by date or popularity, or just have a direct link, we can start looking at the submission for getting user comments.

How to Get Comments on a Post

When a "Redditor" (a registered Reddit user) makes a comment on a post, other users can reply. If we want to understand how our audience is responding to a post that mentions our product, service, or content, the primary data we’re after is the user-generated comments so that we can do sentiment analysis on them.

If you know the URL of a particular post that is interesting, you can retrieve the submission from that link directly rather than querying a subreddit:

post = "https://www.reddit.com/r/learnpython/comments/fwhcas/whats_the_difference_between_and_is_not"

submission = reddit.submission(url=post)

submission.comments.replace_more(limit=None)

comments = submission.comments.list():

The use of replace_more is an API quirk in that it mirrors the behavior of the web interface. If you were looking at the website, you’d only see comments above a particular threshold. You need to click a button to load more comments. By unsetting the limit before querying for the comments we exclude those nested placeholders and get only user comments we need for our sentiment analysis.

Next Steps

You've seen some techniques for accessing pre-configured datasets for NLP, as well as building your own dataset from the Reddit API and Reddit comments.

The next step in NLP analysis is covered in the article Using Pre-trained VADER Models for NLTK Sentiment Analysis.

If you need to back up and learn more about natural language processing with NLTK, see Introducing NLTK for Natural Language Processing with Python.

Jayson manages Developer Relations for Dolby Laboratories, helping developers deliver spectacular experiences with media.

Jayson likes learning and teaching about new technologies with a wide range applications and industries. He's built solutions with companies including DreamWorks Animation (Kung Fu Panda, How to Train Your Dragon, etc.), General Electric (Predix Industrial IoT), The MathWorks (MATLAB), Rackspace (Cloud), and HERE Technologies (Maps, Automotive).