The article provides an FAQ about using CodeProject.AI Server and Blue Iris, including topics such as setting up CodeProject.AI Server in Blue Iris, detecting objects, setting up alerts, analyzing with AI, using the AI tab in the Blue Iris Status, and setting up face detection.

Over the past few weeks, we've noticed a lot of questions about using CodeProject.AI Server and Blue Iris. We and the Blue Iris team are constantly working to make the union between CodeProject.AI and Blue Iris smoother, and easier. Nevertheless, there are times when the Blue Iris User Manual, our articles on using CodeProject.AI Server and Blue Iris aren't enough, so here is an FAQ that hopefully contains any questions you might have about using CodeProject.AI Server and Blue Iris. If you have additional questions, feel free to ask them in the comments below and this article will be updated. Also, if we find a question to add to this FAQ, this article will be updated.

We also have a series of articles on using CodeProject.AI Server and Blue Iris that offer in-depth, step-by-step guides. If some nuance is missing here, like setting up a camera in Blue Iris, chances are it is covered in one of these articles:

In this article, I am using Blue Iris 5, v5.7.3.0. Your version may look slightly different.

How Do I Set Up CodeProject.AI Server in Blue Iris

In the main Blue Iris settings, click on the AI tab.

Ensure the Use AI Server on IP/port box is checked. The IP should be filled in by default. For me, this was 127.0.0.1. I've seen others put in localhost and that works too.

Check the Use custom models box if you're interested in using custom models to detect the following. CodeProject.AI Server automatically comes with all these custom models, and you don't necessarily need to use these models to detect these items, but checking that box ensures you use the following models to detect the following:

- actionnetv2 - This model detects human actions:

- calling

- clapping

- cycling

- dancing

- drinking

- eating

- fighting

- hugging

- kissing

- laughing

- listening-to-music

- running

- sitting

- sleeping

- texting

- using-laptop

- ipcam-animal - This model detects the following animals: bird,cat,dog,horse,sheep,cow,bear,deer,rabbit,raccoon,fox,skunk,squirrel,pig

- ipcam-combined - This model detects the following items: person,bicycle,car,motorcycle,bus,truck,bird,cat,dog,horse,sheep,cow,bear,deer,rabbit,raccoon,fox,skunk,squirrel,pig

- ipcam-dark - This model detects the following items, and has been trained to recognize these items from darker images (potentially night images): bicycle,bus,car,cat,dog,motorcycle,person

- ipcam-general - This model detects the following items, and has been trained to recognize these items from darker images (potentially night images): person,vehicle

- license-plate - This model detects the license plates, and reports the plate numbers. This works for license plates during the day (DayPlate) and at night (NightPlate)

Check the Default object detection box to determine the complexity of the models being used. The bigger the size you choose (Medium, Large) the more CPU (or GPU) resources are consumed. I recommend leaving this at the default, which is Medium.

Check the Facial recognition box if you intend to detect faces, check the ALPR box if you intend to detect license plates, and check the Use GPU if you want to use your computer's GPU to do all these detections.

Hit OK to accept these settings.

And that's it! That's all you need to do to set up CodeProject.AI Server with Blue Iris.

How Do I Detect Something with Blue Iris

The whole point of using CodeProject.AI Server with Blue Iris is to do detections. Once you've set up CodeProject.AI Server with Blue Iris, you're ready to detect something. In this section, we'll look at how to detect something that is included in CodeProject.AI Server. As an example, I'll demonstrate how to detect a person.

Make sure CodeProject.AI Server is open, and Object Detection (YOLOv5 6.2) is started. You can do this either by clicking Open AI dashboard in the AI tab in Blue Iris main settings, or by simply putting http://localhost:32168/ into your browser.

It's worth noting that, if you don't have a CUDA enabled GPU, then you can use Object Detection (YOLOv5 .NET). Or you can experiment between .NET and YOLOv5 6.2 to see which works best for you. If you have an older GPU, Object Detection (YOLOv5 3.1) may be of use. And if you're using Raspberry Pi? Use the TFLite module (new in 2.1). For the purposes of keeping this FAQ entry simple, I'll assume you're using Object Detection (YOLOv5 6.2).

If for some reason Object Detection (YOLOv5 6.2) is off, click the ... next to it and from the dropdown, select Start.

The first step is to go to the Camera Settings.

Then click on the Triggers tab.

Then, click Artificial Intelligence. The majority of what you need to set up to detect something with CodeProject.AI Server is here.

Ensure that CodeProject.AI or DeepStack is selected (it should be). In the To confirm box, type "person". In this box, you want to list the item you wish to detect. You can detect anything that is listed in the previous section, How Do I Set Up CodeProject.AI Server in Blue Iris provided you input the correct name of the custom model. You can also list multiple objects you wish to detect, provided they are separated by a single comma, and no space. For example, if I wanted to detect people, dogs, and rabbits, I would input: "person,dog,rabbit".

For our case, we want to detect a person and the best mode for that is ipcam-combined. So in the Custom models field, input "ipcam-combined".

As for the multiple check-box settings, it's not a bad idea to mimic the following boxes checked in the image above. Particularly Save AI analysis details, which is what we can use in another FAQ entry on how to look at the AI in the Blue Iris Status window.

You can play around with the min confidence, + real-time images, and analyze one each settings to see what works for you. min confidence indicates how confident CodeProject.AI in what it detected from the To confirm list. Some people want this confidence to be high to avoid false detections, but 50 is generally a good starting point. + real-time images is how many additional images are going to be sent to CodeProject.AI Server for analysis, at the interval of analyze one each. No matter what number you put for + real-time images, CodeProject.AI is going to stop analyzing once the desired object is detected. The default settings for these two settings are 2 additional real time images every 750ms. This will depend on your CPU or GPU capability, but my personal recommendation is to send 10 additional real-time images, every 500ms.

And that's it. You can leave all the other default settings checked. This should be all you need to successfully detect a person.

How Do I Set Up Alerts in Blue Iris

There are a number of alerts you can send in Blue Iris. The ones you want to send for CodeProject.AI Server are based on AI detections. Here's a quick example of how to set up a simple sound notification, which is one that I always use while testing.

Go to the Camera Settings.

Then go to the Alerts tab. Under the Actions heading at the bottom is a button that says On alert.... Click it.

Next, click on the + Add button, and from the dropdown, select Sound.

From here, it's very simple. What we really need from this menu is the field Required AI objects. This allows you to input the items you want to trigger the Alert. In the previous entry on How Do I Detect Something with Blue Iris, I detected a person. So here, I'll use "person" too. Hit OK to accept, then OK two more times to exit the Camera Settings.

And that's it! That's how you set up alerts.

There are a lot of other potential alerts to choose from, like a push notification to a phone, an MQTT message, an SMS text, an email. If the setups for these are something people would like entries on, please let me know in the comments below.

How Do I Set Up Analyze with AI

If you've seen other people use Blue Iris, you've probably seen those pretty bounding boxes around objects being detected that indicate the object, and the percentage of confidence. Here's how you set that up.

Make sure CodeProject.AI Server is open, and Object Detection (YOLOv5 6.2) is started. You can do this either by clicking Open AI dashboard in the AI tab in Blue Iris main settings, or by simply putting http://localhost:32168/ into your browser.

It's worth noting that, if you don't have a CUDA enabled GPU, then you can use Object Detection (YOLOv5 .NET). Or you can experiment between .NET and YOLOv5 6.2 to see which works best for you. If you have an older GPU, Object Detection (YOLOv5 3.1) may be of use. And if you're using Raspberry Pi? Use the TFLite module (new in 2.1). For the purposes of keeping this FAQ entry simple, I'll assume you're using Object Detection (YOLOv5 6.2).

If for some reason Object Detection (YOLOv5 6.2) is off, click the ... next to it and from the dropdown select Start.

Assuming we've already used previous two entries of How Do I Detect Something with Blue Iris and How Do I Set Up Alerts in Blue Iris to set up Blue Iris to detect a person, here's how to use Analyze with AI to detect a person.

First, we have to make sure that recording is set up correctly. Click on the Camera Settings.

Then click on the Video tab. Make sure that the Video box is checked, and that the dropdown says When triggered. Hit OK.

Now, make sure the camera is turned on. If testing, make sure a person is in front of the camera. Mine is just sitting on my desk, so that's me.

Automatically the record button starts in the upper-right corner, because a person has been detected, so the Video recording has been triggered.

Now double-click on the clip in the Clips window to open up the recording so far. Notice how there's the little person icon in the top-right. This means a person was detected on that clip.

The clip footage automatically starts playing. While it plays, right-click on the footage and from the Testing & Tuning option, select Analyze with AI. Now anytime you're watching this clip playback if a person is detected, the bounding box should appear with "person" and the percentage confidence.

Like that! And that's how you set up the Analyze with AI feature in Blue Iris.

How Do I Use the AI Tab in the Blue Iris Status

There is what is essentially a log window in Blue Iris that also has multiple tabs with other options. You can look at the logs, but in some cases, having AI specific logs is more helpful. Here's how you set this up.

Make sure CodeProject.AI Server is open, and Object Detection (YOLOv5 6.2) is started. You can do this either by clicking Open AI dashboard in the AI tab in Blue Iris main settings, or by simply putting http://localhost:32168/ into your browser.

It's worth noting that, if you don't have a CUDA enabled GPU, then you can use Object Detection (YOLOv5 .NET). Or you can experiment between .NET and YOLOv5 6.2 to see which works best for you. If you have an older GPU, Object Detection (YOLOv5 3.1) may be of use. And if you're using Raspberry Pi? Use the TFLite module (new in 2.1). For the purposes of keeping this FAQ entry simple, I'll assume you're using Object Detection (YOLOv5 6.2).

If for some reason Object Detection (YOLOv5 6.2) is off, click the ... next to it and from the dropdown select Start.

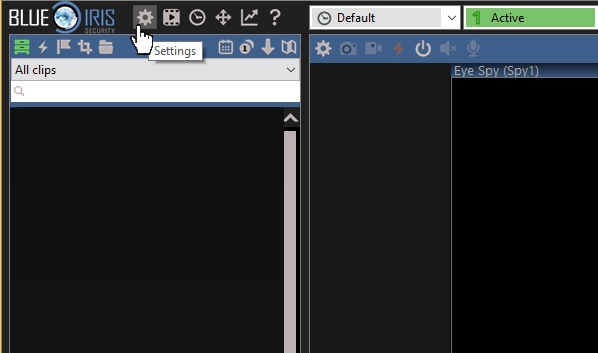

In the upper-left of the main Blue Iris window, is the Blue Iris Status window.

In the Blue Iris Status window are a series of tabs, Log, Cameras, Connections, Storage, AI, and Remote. From the Log, you are able to see AI notifications AI notifications (for example, AI: [ipcam-combined] person 92% [228,86 1036, 1063] 341ms), but you can also use the AI tab.

If you click on the AI tab, you'll see nothing. What we want to do is, drag .dat files from our Alerts folder into this window. But in order to do that, we need to know where the .dat files are. In order to find them, we need to go to the main Blue Iris settings, under what used to be Clips and archiving, but is now Storage.

You can specify the file path for multiple items. I manually created C:\Blue Iris, then all the sub-folders C:\Blue Iris\db, C:\Blue Iris\New, and C:\Blue Iris\Alerts. The only requirement for placing these is that it is recommended that the database folder be local, and on a faster storage, like SSD.

Under the Alerts folder, specify the location and keep note of it. For me, I created C:\Blue Iris\Alerts.

Now open Windows Explorer, and navigate to the Alerts folder. Then go back to the Blue Iris Status window, and the AI. I happen to know that in Spy1.20230405_151332.0.3-1.dat is person detection, so I click and drag that file into the Blue Iris Status AI tab.

And that's it! Now the AI tab displays an entirely AI-focused report of what was detected, which is more useful for larger .dat files.

How Do I Set Up Face Detection in Blue Iris

Though not the most popular Blue Iris use for CodeProject.AI Server, there is the ability to do facial recognition. It's simple to set up, here's how you do it.

Make sure CodeProject.AI Server is open, and Face Processing is started. You can do this either by clicking Open AI dashboard in the AI tab in Blue Iris main settings, or by simply putting http://localhost:32168/ into your browser.

If for some reason Face Processing is off, click the ... next to it and from the dropdown select Start.

Go to the Camera Settings.

Now go to the AI tab, and check the box for Facial recognition. Then click the Faces... button to create a face profile. From here, click the + symbol, then select the image file that contains the face you want recognized, then hit Open. For me, this added the snapshot as face1.

Select face1, click the Edit button (the pencil icon), rename the face to whatever you want (in my case, "Sean"), then hit OK to exit the faces profile window, then OK again to exit the Blue Iris settings.

Now go to the Trigger tab in the camera settings, then hit Artificial Intelligence to launch the camera's AI settings. In the To confirm box, input the name of the face profile just created. In my case, this is "Sean". Hit OK to exit the AI settings, then OK to exit the camera settings.

And that's it! That's how you set up facial recognition in Blue Iris.

How Do I Set Up Detection for Custom Models in Blue Iris

For some, the default detection models that come with CodeProject.AI Server aren't enough. There are ton of excellent, well-trained models out there, or models that happen to be exactly what you're looking for, that you can download and use with CodeProject.AI Server. Here's how you would set up Blue Iris to use a custom model, using an example of a model that detects packages.

Make sure CodeProject.AI Server is open, and Object Detection (YOLOv5 6.2) is started. You can do this either by clicking Open AI dashboard in the AI tab in Blue Iris main settings, or by simply putting http://localhost:32168/ into your browser.

Also make sure that the Use custom model folder box is checked, since that's where the custom models we add are located.

If for some reason Object Detection (YOLOv5 6.2) is off, click the ... next to it and from the dropdown, select Start.

First we need to get a model that detects packages. The great Mike Lud, CodeProject Community Engineer is training many models and has developed a model that detects packages. So the first step is to download the package model, and put it in the custom model folder. Go to Mike Lud's GitHub, and download package.pt. Then, copy package.pt into the custom model folder for CodeProject.AI Server, which is C:\Program Files\CodeProject\AI\modules\ObjectDetectionYolo\custom-models.

Next, go to the Trigger tab in the camera settings, then hit Artificial Intelligence.

Make sure To confirm says "package" and Custom models also says "package". Hit OK to accept the Artificial Intelligence settings, then OK to accept the camera settings.

It's important if you're using custom models to specify the name of the specific model in the Custom models field, because otherwise Blue Iris cycles through each custom model by default, causing unnecessary processing.

And that's it! You're set up to use your custom model in Blue Iris, which in this case, is to detect packages.

Sean Ewington is the Content Manager for CodeProject.

His background in programming is primarily C++ and HTML, but has experience in other, "unsavoury" languages.

He loves movies, and likes to say inconceivable often, even if it does not mean what he thinks it means.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin