Here we’ll discuss how to convert the output values into the detected object labels, confidence scores, and the corresponding boxes.

Introduction

This series assumes that you are familiar with Python, Conda, and ONNX, as well as have some experience with developing iOS applications in Xcode. You are welcome to download the source code for this project. We’ll run the code using macOS 10.15+, Xcode 11.7+, and iOS 13+.

Understanding the YOLO v2 Output

YOLO v2 accepts fixed-resolution 416 x 416 input images, which are split into a 13 x 13 grid. Prediction from this model returns a single array shaped (1, 425, 13, 13). The first dimension represents a batch (which is not important for our purposes), the last two correspond to the 13 x 13 grid. But what about the 425 values we saw in each cell in the previous article?

These values contain the encoded information about the detected objects’ confidence scores and the corresponding bounding box coordinates:

[x1,y1,w1,h1,s1,c011,c021,c031,…,c791,c801,x2,y2,…,x5,y5,w5,h5,s5,c015,…c795,c805],

where:

- i – bounding box index within a given grid cell (values: 1-5)

- xi, yi, wi, hi – coordinates of the box (x, y, width and height, respectively) for box i

- si – confidence score that a given cell contains an object

- c01i - c80i – confidence score for each of the 80 object classes inclided in the COCO dataset.

A quick check: 5 boxes per cell times 85 values (four coordinates, one confidence score per cell + 80 confidence scores per object class) equals precisely 425.

Preparing for YOLO Output Decoding

We need several constants:

GRID_SIZE = 13

CELL_SIZE = int(416 / GRID_SIZE)

BOXES_PER_CELL = 5

ANCHORS = [[0.57273, 0.677385],

[1.87446, 2.06253],

[3.33843, 5.47434],

[7.88282, 3.52778],

[9.77052, 9.16828]]

GRID_SIZE reflects how YOLO splits an image into cells, CELL_SIZE describes the width and height of each cell (in pixels), and BOXES_PER_CELL is the number of predefined boxes that the model considers per cell.

The ANCHORS array contains factors used to calculate the coordinates of each of the five boxes in each cell. Note that the different YOLO versions use the various anchors, so you always need to check which values were used for model training. The values above were found in the original YOLO repository (the yolov2.cfg file).

We also need to load labels for the detected objects from the attached coco_names.txt file:

with open('./models/coco_names.txt', 'r') as f:

COCO_CLASSES = [c.strip() for c in f.readlines()]

First few elements of the COLO_CLASSES list are:

['person', 'bicycle', 'car', 'motorbike', 'aeroplane', 'bus', 'train', 'truck', 'boat', 'traffic light', ...]

Decoding the YOLO Output

Our YOLO v2 model returns "raw" neural network outputs, not normalized by an activation function. To make sense of them, we’ll need two additional functions:

def sigmoid(x):

k = np.exp(-x)

return 1 / (1 + k)

def softmax(x):

e_x = np.exp(x - np.max(x))

return e_x / e_x.sum()

Without going into details: sigmoid returns a value from the 0-1 range for any input, and softmax returns a normalized value for any input vector, with the sum of its values equal to 1.

Now we can write our primary decoding function:

def decode_preds(raw_preds: []):

num_classes = len(COCO_CLASSES)

decoded_preds = []

for cy in range(GRID_SIZE):

for cx in range(GRID_SIZE):

for b in range(BOXES_PER_CELL):

box_shift = b*(num_classes + 5)

tx = float(raw_preds[0, box_shift , cy, cx])

ty = float(raw_preds[0, box_shift + 1, cy, cx])

tw = float(raw_preds[0, box_shift + 2, cy, cx])

th = float(raw_preds[0, box_shift + 3, cy, cx])

ts = float(raw_preds[0, box_shift + 4, cy, cx])

x = (float(cx) + sigmoid(tx)) * CELL_SIZE

y = (float(cy) + sigmoid(ty)) * CELL_SIZE

w = np.exp(tw) * ANCHORS[b][0] * CELL_SIZE

h = np.exp(th) * ANCHORS[b][1] * CELL_SIZE

box_confidence = sigmoid(ts)

classes_raw = raw_preds[0, box_shift + 5:box_shift + 5 + num_classes, cy, cx]

classes_confidence = softmax(classes_raw)

box_class_idx = np.argmax(classes_confidence)

box_class_confidence = classes_confidence[box_class_idx]

combined_confidence = box_confidence * box_class_confidence

decoded_preds.append([box_class_idx, combined_confidence, x, y, w, h])

return sorted(decoded_preds, key=lambda p: p[1], reverse=True)

First, the function iterates over boxes within each of the grid cells (cy, cx, and b loops) to decode subsequent values per each bounding box (assuming a single image in the batch, thus we use raw_preds[0,…]). The raw tx, ty, tw, th, and ts values returned by the model are then used to calculate the bounding box coordinates (center x, center y, width, and height), box_confidence (confidence that a given box contains an object), and class_confidence (a vector with the normalized confidence for each of the 80 COCO classes). Equipped with these values, we calculate the class of the most likely object detected using the current box (box_class_idx with its combined_confidence).

After all the calculations, the method returns a list of decoded values sorted in the descending order by the confidence score.

Let's see if it works on our image from the Open Images dataset:

image = load_and_scale_image('https://c2.staticflickr.com/4/3393/3436245648_c4f76c0a80_o.jpg')

cml_model = ct.models.MLModel('./models/yolov2-coco-9.mlmodel')

preds = cml_model.predict(data={'input.1': image})['218']

decoded_preds = decode_preds(preds)

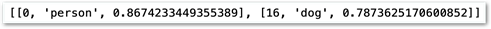

print([p[:3] for p in decoded_preds[:2]])

The model seems to be confident that the picture contains a person and a dog. We should check if this is true:

import copy

def annotate_image(image, preds, min_score=0.5, top=10):

annotated_image = copy.deepcopy(image)

draw = ImageDraw.Draw(annotated_image)

w,h = image.size

colors = ['red', 'orange', 'yellow', 'green', 'blue', 'white']

for class_id, label, score, xc, yc, w, h in decoded_preds[:top]:

if score < min_score:

continue

x0 = xc - (w / 2)

y0 = yc - (h / 2)

color = ImageColor.colormap[colors[class_id % len(colors)]]

draw.rectangle([(x0, y0), (x0 + w, y0 + h)], width=2, outline=color)

draw.text((x0 + 5, y0 + 5), "{} {:0.2f}".format(label, score), fill=color)

return annotated_image

annotate_image(image, decoded_preds)

Not bad… but what is wrong with the duplicate boxes? Nothing. It is a side effect of how YOLO works - multiple boxes (out of the total of 425) may detect the same object. We could improve this slightly by setting a minimal confidence score to present. For the moment though, let’s not worry about it. We’ll address this soon using an algorithm called Non-Maximum Suppression.

Next Steps

We have successfully decoded YOLO v2 output, which allows us to visualize the model’s predictions. If you don’t like loops in our code… you are right. This is not how we should perform calculations on arrays. We structured the code this way only to understand the decoding process. In the next article, we’ll do the same but with array operations. This will allow us to include the decoding logic directly in the model.

Jarek has two decades of professional experience in software architecture and development, machine learning, business and system analysis, logistics, and business process optimization.

He is passionate about creating software solutions with complex logic, especially with the application of AI.