Here we debug an NLP model exposed via Rest API service using Fast API and Gunicorn.

Introduction

Container technologies, such as Docker, simplify dependency management and improve portability of your software. In this series of articles, we explore Docker usage in Machine Learning (ML) scenarios.

This series assumes that you are familiar with AI/ML, containerization in general, and Docker in particular.

In the previous article of the series, we have exposed inference NLP models via Rest API using Fast API and Gunicorn with Uvicorn worker. It allowed us to run our NLP models in a web browser.

In this article, we’ll use Visual Studio Code to debug our service running in the Docker container. You are welcome to download the code used in this article.

Setting Up Visual Studio Code

We are assuming that you are familiar with Visual Studio Code, a very light and flexible code editor. It is available for Windows, macOS, and Linux. Thanks to its extensibility, it supports an endless list of languages and technologies. You can find plenty of tutorials at the official website.

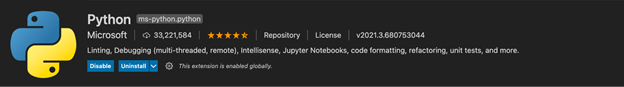

We’ll use it to run and debug our Python service. First, we need to install three extensions:

After installation, we can open our project (created in the previous article). Alternatively, you can download this article’s source code. In the following examples, we are assuming that you chose the latter option.

Container Configuration

We’ll use the same Dockerfile and almost the same docker-compose.yml that we have used before. The only change we introduce is the new image name, solely to keep one-to-one relation between an article and an image name. ur docker-compose.yml looks like this:

version: '3.7'

volumes:

mluser_cache:

name: mluser_cache

services:

mld09_transformers_api_debug:

build:

context: '.'

dockerfile: 'Dockerfile'

args:

USERID: ${USERID}

image: 'mld09_transformers_api_debug'

volumes:

- mluser_cache:/home/mluser/.cache

ports:

- '8000:8000'

user: '${USERID}:${GROUPID}'

Configuring Project to Work in Docker

Now, we need to instruct Visual Studio Code on how to run our container. We do it with two new files in the .devcontainer folder (don’t miss the dot at the beginning).

The first file, docker-compose-overwrites.yml, defines parts of our docker-compose.yml that we want to add or change when working with our container from Visual Studio Code. Because during development we’ll want to edit our local code, we need a new volume to map local files to the container’s folder.

Additionally, we want to overwrite the command statement because in this scenario we don't want to always start our service together with the container. Instead, we want an endless loop keeping our container alive.

It leads us to the following docker-compose-overwrites.yml:

version: '3.7'

services:

mld09_transformers_api_debug:

volumes:

- .:/home/mluser/workspace:cached

command: /bin/sh -c "while sleep 1000; do :; done"

Next, we need a configuration file, devcontainer.json:

{

"name": "Inference Service NLP Debug Container",

"dockerComposeFile": [

"../docker-compose.yml",

"docker-compose-overwrites.yml"

],

"service": "mld09_transformers_api_debug",

"workspaceFolder": "/home/mluser/workspace",

"settings": {

"terminal.integrated.shell.linux": null

},

"extensions": ["ms-python.python"],

"forwardPorts": [8000],

"remoteUser": "mluser"

}

Most options are pretty self-explanatory. Among other things, we define docker-compose files to load (and in which order). In the workspaceFolder attribute, we define which container’s folder will be opened by our Visual Studio Code instance as its workspace. The extensions attribute ensures installation of the Python extension that will allow us to debug code. The file ends with ports to forward to the host machine, and the name of the user selected to run the container. You can cave a look at the full reference of options available in this file.

Starting Container

You might remember from our previous series that, when you map the local folder as a container’s volume, you need to properly match your local user and container’s user. When working with containers, Visual Studio Code on Windows and macOS attempts to do it for you automatically, as described here.

You still need to handle it yourself on Linux. We will do it by setting the required environment variables when starting the Visual Studio Code environment:

$ USERID=$(id -u) GROUPID=$(id -g) code .

Do this from the folder containing the project Dockerfile.

Note that you'll also need to use this method to set Visual Studio Code's environment variables on macOS if you have the mluser_cache volume created using the code from the previous article. This will ensure that files written to the volume by running the earlier container will be accessible to the current container user.

If you think that you could add the USERID and GROUPID environment variables to a separate file and use the env_file setting in the docker-compose.yml, no – you can’t. We need these values when building the image but env_file values propagate only to a running container.

We are now ready to start our container. To do it, we click the Open a remote window button in the bottom left corner:

We then select the Reopen in Container option from the drop-down menu:

And... that’s it. Now we wait for our image to be built, our container to start, and Visual Studio Code to install all its dependencies in it.

When the job completes, we get a standard workspace view. The only way to make sure that we really are "inside" the container in to verify the paths visible in the terminal window and Dev Container name in the bottom left corner:

Debugging Code in the Container

Now we can work with our application in the same way, as we did locally, which includes debugging.

There are at least two ways to debug our FastAPI service. In the first one we add code to start the development server directly to our main.py script. The second one requires us to define a dedicated debug configuration for the Uvicorn server module.

Let’s try the first approach. All we need is to add a few lines of code to the end of the main.py file from the previous article:

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

With this change in place, we simply run our code using the default Python: Current File debug configuration.

When you hover over the IP and port of the running service (http://0.0.0.0:8000) in the logs above, you will notice a popup: "Follow link using forwarded port". It is really important because the port of our service is forwarded to a random one when running within Visual Studio Code. When we click the popup, we go to the correct address, though. When we manually add the /docs suffix to it, we get to the OpenAPI interface as before:

Now, when we define and hit a breakpoint in our code, it behaves how we expect:

Summary

We have successfully configured the Visual Studio Code to edit, run and debug our code in a Docker container. Thanks to it, we can easily use the same environment on our local development machine, on an on-premises server, or in the cloud. In the following article, we will publish our NLP API service to Azure using Azure Container Instances. Stay tuned!

Jarek has two decades of professional experience in software architecture and development, machine learning, business and system analysis, logistics, and business process optimization.

He is passionate about creating software solutions with complex logic, especially with the application of AI.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin

.

.