Here we’ll discuss CNNs, then design one and implement it in Python using Keras.

Introduction

If you’ve seen the Minority Report movie, you probably remember the scene where Tom Cruise walks into a Gap store. A retinal scanner reads his eyes, and plays a customized ad for him. Well, this is 2020. We don’t need retinal scanners, because we have Artificial Intelligence (AI) and Machine Learning (ML)!

In this series, we’ll show you how to use Deep Learning to perform facial recognition, and then – based on the face that was recognized – use a Neural Network Text-to-Speech (TTS) engine to play a customized ad. You are welcome to browse the code here on CodeProject or download the .zip file to browse the code on your own machine.

We assume that you are familiar with the basic concepts of AI/ML, and that you can find your way around Python.

In this article, we’ll discuss CNNs, then design one and implement it in Python using Keras.

What is a CNN?

A CNN is a type of Neural Network (NN) frequently used for image classification tasks, such as face recognition, and for any other problem where the input has a grid-like topology. In CNNs, not every node is connected to all nodes of the next layer; in other words, they are not fully connected NNs. This helps prevent overfitting issues that come up in fully connected NNs, not to mention extra-slow convergence that results from too many connections in a NN.

The concept of CNN relies on a mathematical operation known as convolution, which is very common in the field of Digital Signal Processing. Convolution is defined as a product of two functions – a third function – that expresses the amount of overlap between the first two functions. In the area of CNN, convolution is achieved by sliding a filter (a.k.a. kernel) through the image.

In face recognition, the convolution operation allows us to detect different features in the image. The different filters can detect the vertical and horizontal edges, texture, curves, and other image features. This is why one of the first layers in any CNN is a convolutional layer.

Another layer common in CNNs is the pooling layer. Pooling is used to reduce the size of the image representation, which translates to reduction in the number of parameters and, ultimately, the computational effort. The most common type of pooling is "max," which uses a sliding window - similar to the one in the convolution operations – to harvest, in every location, the maximum value from the group of cells being matched. At the end, it builds a new representation of the image from the harvested maximum values.

The most common CNN architectures typically start with a convolutional layer, followed by an activation layer, then a pooling layer, and end with a traditional fully connected network such as a Multi-Layer NN. This type of model where layers are put one after the other is known as Sequential. Why a fully connected network at the end? To learn a non-linear combination of features in the transformed image (after convolution and pooling).

Design a CNN

Here is the architecture we’ll implement in our CNN:

- Input layer – a NumPy array (img_width, img_height, 1); "1" because we are dealing with grayscale images; for RGB images, it would have been (img_width, img_height, 3)

- Conv2D layer – 32 filters, filter size of 3

- Activation layer – must use a nonlinear function for learning, in this case, the function is ReLU

- Conv2D layer – 32 filters, filter size of 3, stride of 3

- Activation Layer using the ReLU function

- MaxPooling2D layer – applies the (2, 2) pooling window

- DropOut layer, at 25% – prevents overfitting by randomly dropping some of the values from the previous layer (setting them to 0); a.k.a. the dilution technique

- Conv2D layer – 64 filters, filter size of 3

- Activation layer using the ReLU function

- Conv2D layer – 64 filters, filter size of 3 and, stride of 3

- Activation layer using the ReLU function

- MaxPooling2D layer – applies the (2, 2) pooling window

- DropOut layer, at 25%

- Flatten layer – transforms the data to be used in the next layer

- Dense layer – represents a fully connected traditional NN

- Activation layer using the ReLU function

- DropOut layer, at 25%

- Dense layer, with the number of nodes matching the number of classes in the problem – 15 for the Yale dataset

- Activation layer using the ReLU function

The above architecture is pretty common; layer parameters had been fine-tuned experimentally.

Implement the CNN

Now let’s implement our CNN architecture – the set of layers we’ve selected – in code. To create an easy-to-extend solution, we’ll use the ML model with a set of abstract methods:

class MLModel(metaclass=abc.ABCMeta):

def __init__(self, dataSet=None):

if dataSet is not None:

self.objects = dataSet.objects

self.labels = dataSet.labels

self.obj_validation = dataSet.obj_validation

self.labels_validation = dataSet.labels_validation

self.number_labels = dataSet.number_labels

self.n_classes = dataSet.n_classes

self.init_model()

@abstractmethod

def init_model(self):

pass

@abstractmethod

def train(self):

pass

@abstractmethod

def predict(self, object):

pass

@abstractmethod

def evaluate(self):

score = self.get_model().evaluate(self.obj_validation, self.labels_validation, verbose=0)

print("%s: %.2f%%" % (self.get_model().metrics_names[1], score[1] * 100))

@abstractmethod

def get_model(self):

pass

In our case, dataset is an instance of the FaceDataSet class described in the previous article of this series. The ConvolutionalModel class, which inherits from MLModel and implements all its abstract methods, is the one that will contain our CNN architecture. Here it is:

class ConvolutionalModel(MLModel):

def __init__(self, dataSet=None):

if dataSet is None:

raise Exception("DataSet is required in this model")

self.shape = numpy.array([constant.IMG_WIDTH, constant.IMG_HEIGHT, 1])

super().__init__(dataSet)

self.cnn.compile(loss=constant.LOSS_FUNCTION,

optimizer=Common.get_sgd_optimizer(),

metrics=[constant.METRIC_ACCURACY])

def init_model(self):

self.cnn = Sequential()

self.cnn.add(Convolution2D(32, 3, padding=constant.PADDING_SAME, input_shape=self.shape))

self.cnn.add(Activation(constant.RELU_ACTIVATION_FUNCTION))

self.cnn.add(Convolution2D(32, 3, 3))

self.cnn.add(Activation(constant.RELU_ACTIVATION_FUNCTION))

self.cnn.add(MaxPooling2D(pool_size=(2, 2)))

self.cnn.add(Dropout(constant.DROP_OUT_O_25))

self.cnn.add(Convolution2D(64, 3, padding=constant.PADDING_SAME))

self.cnn.add(Activation(constant.RELU_ACTIVATION_FUNCTION))

self.cnn.add(Convolution2D(64, 3, 3))

self.cnn.add(Activation(constant.RELU_ACTIVATION_FUNCTION))

self.cnn.add(MaxPooling2D(pool_size=(2, 2)))

self.cnn.add(Dropout(constant.DROP_OUT_O_25))

self.cnn.add(Flatten())

self.cnn.add(Dense(constant.NUMBER_FULLY_CONNECTED))

self.cnn.add(Activation(constant.RELU_ACTIVATION_FUNCTION))

self.cnn.add(Dropout(constant.DROP_OUT_0_50))

self.cnn.add(Dense(self.n_classes))

self.cnn.add(Activation(constant.SOFTMAX_ACTIVATION_FUNCTION))

self.cnn.summary()

def train(self, n_epochs=20, batch=32):

self.cnn.fit(self.objects, self.labels,

batch_size=batch,

epochs=n_epochs, shuffle=True)

def get_model(self):

return self.cnn

def predict(self, image):

image = Common.to_float(image)

result = self.cnn.predict(image)

print(result)

def evaluate(self):

super(ConvolutionalModel, self).evaluate()

In the constructor, we set the self.shape variable, which defines the shape of the input layer. In our case, with the Yale dataset images 320 pixels tall and 243 pixels wide, self.shape=(320, 243, 1).

We then call super() to get all dataset-related variables set from the parent constructor, as well as to call the init_model() method that initializes the model.

Finally, we call the compile method, which configures the model for training and sets the objective function to use in the loss parameter. The objective function is optimized – minimized or maximized – during the training process. The accuracy parameter defines the metric to evaluate the model during training. The optimizer parameter defines the way the weights are calculated; the most common optimizer is Gradient Descent.

Our CNN model is defined as Sequential, with all layers added as the architecture requires. The train() method uses the fit method of the sequential class, representing an arrangement of layers, to train CNN. This method receives as input the data to train the CNN on, the correct classification of this data, and some optional parameters such as the number of epochs to run.

Train the CNN

Now the code is ready – time to train our CNN. Let’s instantiate the ConvolutionalModel class, train on the Yale dataset, and call the evaluate method.

cnn = ConvolutionalModel(dataSet)

cnn.train(n_epochs=50)

cnn.evaluate()

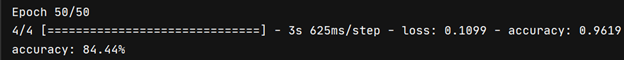

After running the training for 50 epochs, we got to the accuracy of almost 85% on the test images.

This means that our CNN will now recognize each one of the 15 subjects in the dataset with a probability of 85%. Not bad for a brief exercise, eh?

Now that we trained our CNN, if we would like to predict new incoming data, meaning new faces from images, we can do it using the predict(image) method from the ConvolutionalModel class detailed before. How would it work? The call would look like the next one and it should comply with certain assumptions.

cnn.predict(np.expand_dims(image, axis=0))

First, the input image needs to have the same dimensions or shape as the input layer of the CNN that was previously trained. Second, it should be the same type of input, i.e. a matrix of pixel values, inside the predict() method we normalize data so there is no need to provide a normalized image pixel matrix. Third, we may need to add a dimension to the inputted face image, since in the trained CNN we considered a 4th dimension for the number of samples in the dataset. This can be achieved using the expand_dims() method of numpy. Fourth, it is assumed that a face image will be provided, in case of a larger picture the face detection method provided in previous articles can prove useful.

Finally, the output of the predict() method can be seen in the previous figure. This method will output a probability of the face belonging to each of the possible classes or individuals (15 for the trained dataset). In this case we can see that the highest probability will be for class 4, which is precisely the class or person the inputted face image refers to.

Next Step?

Now we know how to build our own CNN from scratch. In the next article, we’ll investigate an alternative approach – utilizing a pre-trained model. We’ll take a CNN that had been previously trained for face recognition on a dataset with millions of images – and adapt it to solve our problem. Stay tuned!