Here we use a TensorFlow Lite interpreter to examine an image and produce its output.

This is the third article in a series on using Neural Networks with TensorFlow Lite on Android. In part 2 of this series, we had ended with making a TensorFlow Lite model from a pretrained model. In this part, we will create an Android application and import that model into it. You will need the .tflite file that was made in the previous section (yolo.tflite).

The flow of the application will be as follows:

- An image is selected for analysis.

- The image is resized to match the requirements of the TensorFlow Lite model being used.

- An input buffer is made from the image.

- A TensorFlow Lite interpreter with optional delegates is instantiated.

- A GPU Delegate will run some of the calculations on the graphics hardware.

- An NNAPI Delegate (Android 8.1 and later) may run on the GPU, a DSP, or a Neural Processing Unit (NPU).

- An output buffer is instantiated.

- The interpreter runs the model against the input and places the results in the output.

The source bitmap for the application can be acquired in a number of different ways: It can be loaded from the file system, taken from the device’s camera, downloaded from a network, or acquired through other means. As long as the image can be loaded as a bitmap then the rest of the code presented here can be easily adapted for it. In this example program, I will have the source image come from the picture chooser.

Create a new Android application using the Empty Activity template. After the application is created there are some configuration steps that we need to do for the application. First, we will add references to TensorFlow so that the application has the necessary libraries for using TensorFlow Lite and TensorFlow Lite delegates for the CPU and NPU. Open the build.gradle for the application and add the following to the dependencies section:

implementation 'org.tensorflow:tensorflow-lite:0.0.0-nightly'

implementation 'org.tensorflow:tensorflow-lite-gpu:0.0.0-nightly'

implementation 'org.tensorflow:tensorflow-lite-support:0.0.0-nightly'

Save the changes and build the application. If you receive an error about the required version of the NDK not being present then refer to Part 1 of this series. If the application builds, there is an additional change to make to the build.gradle. In the Android section, a setting must be added to direct Android Studio not to compress .tflite files. In the android section of the file, add the following lines:

aaptOptions {

noCompress "tflite"

}

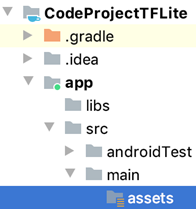

The .tflite file will go into the project "assets" folder. The folder doesn’t exist in a new project. You can create the folder in your project within apps/src/main.

Copy the file yolo.tflite to the assets folder.

We will start with an application that lets the user select an image and displays it. The activity_main.xml file only needs a couple of elements for this: a button for activating the image chooser and an Image View.

="1.0"="utf-8"

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<ImageView

android:id="@+id/selectedImageView"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_marginBottom="64dp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/selectImageButton"

android:text="@string/button_select_image"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginTop="8dp"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toBottomOf="@+id/selectedImageView" />

</androidx.constraintlayout.widget.ConstraintLayout>

In the file MainActivity.java we add the executional code. To reference the image view from this code, add a field named selectedImageView.

ImageView selectedImageView;

In onCreate() add a line after setContentView() to assign to the selectedImageView the instance of the ImageView that was loaded.

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

selectedImageView = findViewById(R.id.selectedImageView);

}

The button defined in the layout will trigger the function that opens the image chooser.

final int SELECT_PICTURE = 1;

public void onSelectImageButtonClicked(View view) {

Intent intent = new Intent(Intent.ACTION_GET_CONTENT);

intent.setType("image/*");

Intent chooser = Intent.createChooser(intent, "Choose a Picture");

startActivityForResult(chooser, SELECT_PICTURE);

}

When the user activates this function, the system’s image chooser will open. After the user selects an image, control is returned to the application. To retrieve the selection, the activity must implement the method onActivityResult(). The URI to the selected image is within the data object that is passed to this method.

public void onActivityResult (int reqCode, int resultCode, Intent data) {

super.onActivityResult(reqCode, resultCode, data);

if (resultCode == RESULT_OK) {

if (reqCode == SELECT_PICTURE) {

Uri selectedUri = data.getData();

String fileString = selectedUri.getPath();

selectedImageView.setImageURI(selectedUri);

}

}

}

The button defined in activity_main.xml has not yet been attached to any code. Add the following line to the button’s definition:

android:onClick="onSelectImageButtonClicked"

If you run the application now, you’ll see it is able to load and display the image. We want to pass this image through a TensorFlow Interpreter. Let’s get the code in place for that. There are various implementations of the YOLO algorithm. The one that I am using here expects the image to be divided into 13 columns and 13 rows. Each unit within this grid is 32x32 pixels. The input image will be 416x416 pixels (13 * 32 = 416). These values are represented in the constants being added to MainActivity.java. Also added is a constant for holding the name of the .tflite file to be loaded and a variable to hold the TensorFlow Lite interpreter.

final String TF_MODEL_NAME = "yolov4.tflite";

final int IMAGE_SEGMENT_ROWS = 13;

final int IMAGE_SEGMENT_COLS = 13;

final int IMAGE_SEGMENT_WIDTH = 32;

final int IMAGE_SEGMENT_HEIGHT = 32;

final int IMAGE_WIDTH = IMAGE_SEGMENT_COLS * IMAGE_SEGMENT_WIDTH;

final int IMAGE_HEIGHT = IMAGE_SEGMENT_ROWS * IMAGE_SEGMENT_HEIGHT;

Interpreter tfLiteInterpreter;

There are several options for resizing the image. I’m going to use the TensorFlow ImageProcessor. The ImageProcessor is built with a list of the operations that we want to apply to the image. When it is given a TensorImage, the ImageProcessor will perform those operations on the image and return a new TensorImage ready for further processing.

void processImage(Bitmap sourceImage) {

ImageProcessor imageProcessor =

new ImageProcessor.Builder()

.add(new ResizeOp(IMAGE_HEIGHT, IMAGE_WIDTH, ResizeOp.ResizeMethod.BILINEAR))

.build();

TensorImage tImage = new TensorImage(DataType.FLOAT32);

tImage.load(sourceImage);

tImage = imageProcessor.process(tImage);

//...

}

We need to initialize the TensorFlow Lite interpreter with our model so that it can process this image. The constructor for the Interpreter class accepts a byte buffer containing the model and an object containing the options to be applied to the Interpreter instance. For the options, we add a GPU Delegate and a NNAPI Delegate. If the device has compatible hardware for accelerating some of the operations then that hardware will be used when the TF Interpreter runs.

void prepareInterpreter() throws IOException {

if(tfLiteInterpreter == null) {

GpuDelegate gpuDelegage = new GpuDelegate();

Interpreter.Options options = new Interpreter.Options();

options.addDelegate(gpuDelegage);

//Only add the NNAPI delegate of this were build for Android P or later.

if(Build.VERSION.SDK_INT >= Build.VERSION_CODES.P) {

NnApiDelegate nnApiDelegate = new NnApiDelegate();

options.addDelegate(nnApiDelegate);

}

MappedByteBuffer tfLiteModel = FileUtil.loadMappedFile(this, TF_MODEL_NAME);

tfLiteInterpreter = new Interpreter(tfLiteModel, options);

}

}

You may remember that tensors are often represented as arrays. In the function processImage, a few buffers are created to receive the output from the network model.

float[][][][][] buf0 = new float[1][52][52][3][85];

float[][][][][] buf1 = new float[1][26][26][3][85];

float[][][][][] buf2 = new float[1][13][13][3][85];

These multidimensional arrays may look a little intimidating at first. How does one work with the data from these arrays?

Let’s focus on the last of the three arrays. Some network models are made to process multiple instances of the datasets of interest at once. You might recall that this algorithm divides a 416x416 pixel image into 13 rows and 13 columns. The second and third dimension of the array are for an image row and column. Within each one of these grids the algorithm can detect up to 3 bounding boxes for objects that it recognizes within that specific grid location. The fourth dimension of size 3 is for each of these bounding boxes. The last dimension is for 85 ielement. The first four items in the list are to define the bounding box coordinates (x, y, width, height). The fifth element in this list is a value between 0 and 1 expressing the confidence of the box being a match for an object. The next 80 elements are the probabilities of the matched item being a specific object.

For the implementation of YOLO that I am using here up to 80 types of objects are recognized. There exists other implementations of YOLO that detect some other number of elements. You can sometimes guess the number of items that the network identifies by number of elements in the last dimension. However, don’t rely on this. To know which positions represent which objects you will need to consult the documentation for the network that you are using. The meaning and interpretation of the values will be discussed in detail in the next part of this series.

These values are packaged together in a HashMap and passed to the TensorFlow Lite Interpreter.

//...

HashMap<Integer, Object> outputBuffers = new HashMap<Integer, Object>();

outputBuffers.put(0, buf0);

outputBuffers.put(1, buf1);

outputBuffers.put(2, buf2);

tfLiteInterpreter.runForMultipleInputsOutputs(new Object[]{tImage.getBuffer()}, outputBuffers);

The line that executes the YOLO neural network is runForMultipleInputsOutputs(). When there is a single input and output then a call to a function named run() would be used instead. The results are stored in the arrays passed in the second argument.

The network runs and produces output, but for these outputs to be useful or meaningful, we need to know how to interpret them. For a test, I used this image.

Digging into one of the output arrays, I get a series of numbers. Let’s examine the first four.

00: 0.32 01: 0.46 02: 0.71 03: 0.46

The first four numbers are an X and Y coordinate for a match and the width and height. The values are scaled from -1 to 1. They must be adjusted to 0 to 416 to convert them to the pixel dimensions.

Most of the rest of the values of the array are 0.0. For the result that I’m looking at a non-zero value is encountered at position 19

12: 0.00 13: 0.00 14: 0.00 15: 0.00

16: 0.00 17: 0.00 18: 0.00 19: 0.91

20: 0.00 21: 0.00 22: 0.00 23: 0.00

The entirety of the array is 85 elements, but the rest of the values in this case are also zero and omitted. The values from position 5 on are confidence ratings for each class of item that this neural network can recognize. If we subtract 5 from the values position we get the index of the object class that it is for. For the list of classes to match this value to an object we would look at position 14 (19-5 = 14).

00: person 01: bicycle 02: car 03: motorbike

04: airplane 05: bus 06: train 07: truck

08: boat 09: traffic light 10: fire hydrant 11: stop sign

12: parking meter 13: bench 14: bird 15: cat

16: dog 17: horse 18: sheep

In this case, the program has recognized a bird.

Next Steps

Now that we’ve successfully run the model on an image, it’s time to do something fun with the model’s output. Continue to the next article to learn how to interpret these results and create visualizations for them.