|

I have exactly the same problem after updating YOLOv5.Net to version 1.10.2.

Blue IRIS is up to date and i am using an up to date Windows 11 machine.

Remove CodeProject and install again didn't solve the problem.

|

|

|

|

|

Click the start button on the YOLOv5.Net module, and the stop button on the YOLO 6.2 module to have the .NET module startup rather than the Python version. The settings will be persisted. What you're seeing are just the defaults.

cheers

Chris Maunder

|

|

|

|

|

I didn't have the YOLO 6.2 module installed and I had clicked the start button on the NET version, but YOLO5.NET kept stopping after a while for some reason.

I don't know what I changed, but it appears to still be running now.

Thanks

Mike S

|

|

|

|

|

Whatever i do still the message: AI not responding. Don't know what to do now

|

|

|

|

|

Chris, I'm seeing the same thing at times, see my later posts.

YOLOv5.NET starts then apparently stops when BlueIris starts.

|

|

|

|

|

Hi, sadly I performed the update on Coral 2.3.4 and now the module doesn't work anymore as before with my EdgeTPU in conjunction with BlueIris. When the module is started it recognizes the EdgeTPU but is falling back to CPU only mode a few moments later. I uninstalled and re-installed the module itself and also the Codeserver AI several times but that didn't help. What can I do to bring it back to stable operation again?

|

|

|

|

|

I cannot start the AIServer (latest version) withe Face Processing disabled. How can I do this with a docker run command?

What I have tried:

Including

-e Modules:FaceProcessing:AutoStart=False

in the run command

|

|

|

|

|

Running CodeprojectAI 2.6.5 with Object Detection(Coral) 2.3.4.

In Blue Iris when AI is triggered I take 10 images. When I check those AI results with the .dat files. I can see on almost every AI check, that at least one of those then images results in a ""code":500". Full result can be seen here:

[

{

"api":"objects",

"found":{

"success":false,

"error":"Unable to run inference: There is at least 1 reference to internal data\n in the interpreter in the form of a numpy array or slice. Be sure to\n only hold the function returned from tensor(

)

if you are using raw\n data access.",

"inferenceMs":0,

"processMs":59,

"predictions":[

]

,

"message":"",

"count":0,

"moduleId":"ObjectDetectionCoral",

"moduleName":"Object Detection (

Coral)

",

"code":500,

"command":"detect",

"requestId":"d076fa17-2373-4747-9de5-94fb1ca0f6b2",

"inferenceDevice":null,

"analysisRoundTripMs":103,

"processedBy":"localhost",

"timestampUTC":"Thu,

04 Jul 2024 11:52:24 GMT"}

}

]

I've tested different models (MobileNet SSD, YoloV5 and YoloV8). It seems to be happening to all of them, the Yolo models a bit more, but also the MobileNet SSD.

A good result looks like this:

T-560 msec [180 msec]

[

{

"api":"objects",

"found":{

"success":true,

"inferenceMs":6,

"processMs":62,

"message":"Found car,

car,

car...",

"count":4,

"predictions":[

{

"confidence":0.76953125,

"label":"car",

"x_min":617,

"y_min":621,

"x_max":2838,

"y_max":2113}

,

{

"confidence":0.69921875,

"label":"car",

"x_min":601,

"y_min":226,

"x_max":799,

"y_max":378}

,

{

"confidence":0.41796875,

"label":"car",

"x_min":3109,

"y_min":320,

"x_max":3214,

"y_max":459}

,

{

"confidence":0.40625,

"label":"car",

"x_min":2580,

"y_min":98,

"x_max":2700,

"y_max":183}

]

,

"moduleId":"ObjectDetectionCoral",

"moduleName":"Object Detection (

Coral)

",

"code":200,

"command":"detect",

"requestId":"7d709542-d6cc-4031-9466-42a3791d6b47",

"inferenceDevice":null,

"analysisRoundTripMs":103,

"processedBy":"localhost",

"timestampUTC":"Thu,

04 Jul 2024 11:52:24 GMT"}

}

]

AI seems to work fine, only this Error 500 pops up every time a camera is triggered. Would like to know if this a "real" Error?

|

|

|

|

|

I've been having the same issues since Multi-TPU support was added to the Coral module. Only thing I have been able to find is a reference to Python, Windows, and threading issues.

|

|

|

|

|

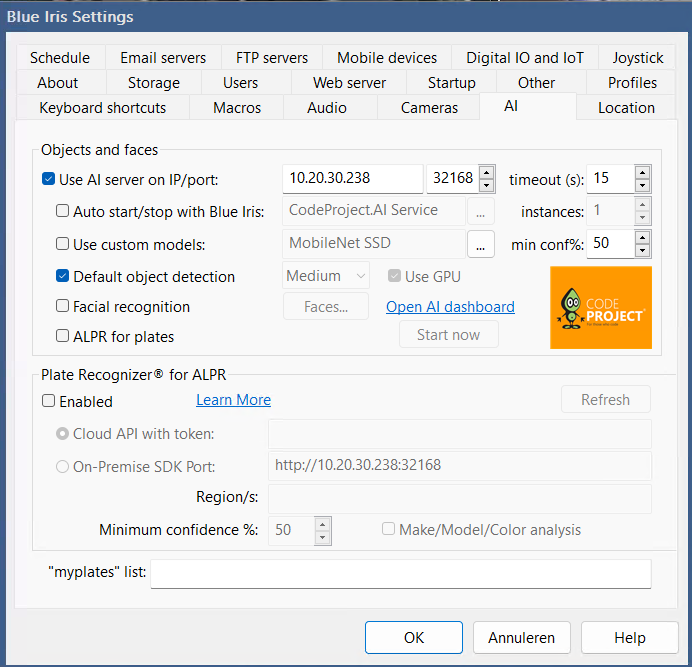

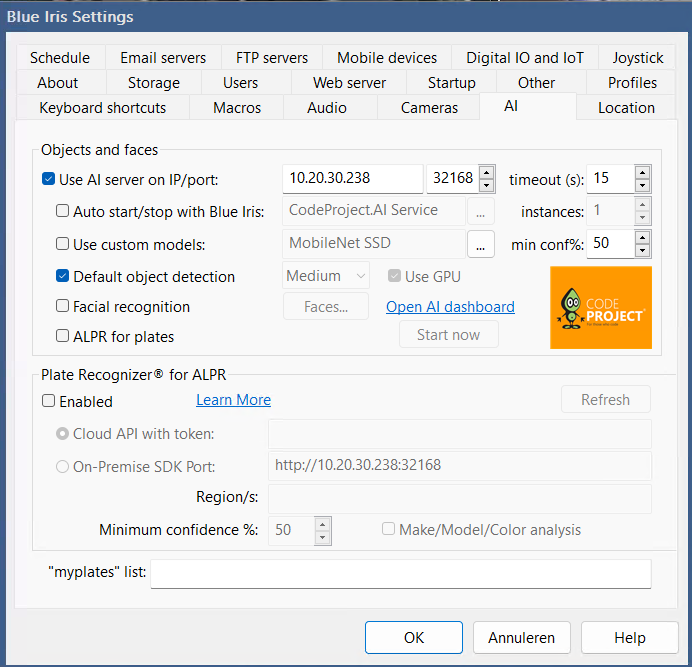

Thanks very much for your report. Could you please share your System Info tab from your CodeProject.AI Server dashboard? Also, could you please share your AI settings in Blue Iris, and version?

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

I'm running CodeProject AI in docker on Unraid.

Here my System Info tab:

Server version: 2.6.5

System: Docker (c7694d71d408)

Operating System: Linux (Ubuntu 22.04)

CPUs: 12th Gen Intel(R) Core(TM) i5-12600K (Intel)

1 CPU x 10 cores. 16 logical processors (x64)

System RAM: 63 GiB

Platform: Linux

BuildConfig: Release

Execution Env: Docker

Runtime Env: Production

Runtimes installed:

.NET runtime: 7.0.19

.NET SDK: Not found

Default Python: 3.10.12

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

System GPU info:

GPU 3D Usage 0%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

BlueIris AI settings:

BlueIris version: 5.9.3.4 x64 (18.jun.2024)

|

|

|

|

|

Question, I updated the YOLOv8 to the latest version and it is still having problems to load the custom models (license-plate) model. To be more precise, when the AgentDVR is trying to run the ALPR function, it could not call out the license-plate model and think it doesn't exist. However, my YOLOv8 does have the license-plate model. In order to make it work, I have to go to the Explorer and select the dropdown list so the AgentDVR can realize that the "license-plate" custom model is there.

I looked at the code in detect_adapter.py and modified the code from:

elif data.command == "custom": # Perform custom object detection

if not self.custom_model_names:

return { "success": False, "error": "No custom models found" }

to

elif data.command == "custom": # Perform custom object detection

# Check if there are any custom models available

if not self.custom_model_names:

# Load the custom models if they haven't been loaded yet

self._list_custom_models()

# After attempting to load, check again if there are any custom models available

if not self.custom_model_names:

# If still no custom models are found, return an error response

return { "success": False, "error": "No custom models found" }

After that, AgentDVR has no problem to call YOLOv8's custom models.

Is it possible to include this for the next update so I don't have to manually load the custom model by going to the Explorer to select the dropdown list?

If anyone solve this problem without modifying the code, please let me know.

|

|

|

|

|

Everything is functioning perfect. I'm diving in and having fun. With the Llama chat module, I have been messing with different models. I'm finding a need to manipulate the system prompt dynamically. So, looking at llama_chat.py, I see where it is generated. It looks like the module was designed to expose that there for llama_chat_adapter.py. I went ahead and open a text file into system_prompt. Then I tried the model from the home assistant integration. Home-3B-v3.q5_k_m.gguf. They have it very fine tuned for their function factory, but I did get some fun results with my prompt.

Then I switched back to mistral-7b-instruct-v0.2.Q5_K_M.gguf, and now I see much better results and the need for an API input for the system_prompt...

Also, does the system_prompt have a token limit? I saw 1024?

|

|

|

|

|

I've just posted an update to the Llama module that allows you to modify the system prompt. The number of tokens is left at 0, meaning it depends on the model. We use the Microsoft Phi-3 4K model, so 4096 tokens supported. There's a 128K model also available[^]

cheers

Chris Maunder

|

|

|

|

|

Awesome, thank you. Works like a charm. It does slow things a bit on submit, processing the text. I know there are other ways to augment the models. I see where it can load documents and quantify the data?

I tried to load just all my device data and it was to many tokens. "ValueError: Requested tokens (312238) exceed context window of 32768"

lol, I have to figure out just how much data is enough to keep the response constrained to the task.

Funny thing happened with the Home LLM model from Home Assistant integrations. They have done a bit of detailed training to it. As I was feeding it, obviously constraints that it did not like, it got mad and scheduled a meeting with IT and Management and then wouldn't answer anymore till I flushed it...

|

|

|

|

|

Jebus59 wrote: it got mad and scheduled a meeting with IT and Management and then wouldn't answer anymore

🤣

cheers

Chris Maunder

|

|

|

|

|

When I start CodeProject.AI, I notice that the following are not loaded;

.NET SDK: Not Found

Default Python: Not Found

Go: Not Found

NodeJS: Not Found

Rust: Not Found

what wonders will open up if these run-times were to be loaded?

|

|

|

|

|

I believe CPAI just needs this to show, to work. .NET runtime: 7.xx at least

The default python will show if you have a path setting, to it, in your environment variables.

I would imagine the other three would show with a path statement. Here's mine.

Runtimes installed:

.NET runtime: 7.0.5

.NET SDK: 7.0.203

Default Python: 3.9.6

Go: Not found

NodeJS: Not found

Rust: Not found

|

|

|

|

|

I've downloaded the ALPR 3.2.2 and while it is installing, I got the error of "No module named 'paddle'".

When I first downloaded, I have selected "Do not use download cache" and I got the following error:

00:20:34:Module 'License Plate Reader' 3.2.2 (ID: ALPR)

00:20:34:Valid: True

00:20:34:Module Path: <root>\modules\ALPR

00:20:34:Module Location: Internal

00:20:34:AutoStart: True

00:20:34:Queue: alpr_queue

00:20:34:Runtime: python3.9

00:20:34:Runtime Location: Local

00:20:34:FilePath: ALPR_adapter.py

00:20:34:Start pause: 3 sec

00:20:34:Parallelism: 0

00:20:34:LogVerbosity:

00:20:34:Platforms: all,!windows-arm64

00:20:34:GPU Libraries: installed if available

00:20:34:GPU: use if supported

00:20:34:Accelerator:

00:20:34:Half Precision: enable

00:20:34:Environment Variables

00:20:34:AUTO_PLATE_ROTATE = True

00:20:34:CROPPED_PLATE_DIR = <root>\Server\wwwroot

00:20:34:MIN_COMPUTE_CAPABILITY = 6

00:20:34:MIN_CUDNN_VERSION = 7

00:20:34:OCR_OPTIMAL_CHARACTER_HEIGHT = 60

00:20:34:OCR_OPTIMAL_CHARACTER_WIDTH = 30

00:20:34:OCR_OPTIMIZATION = True

00:20:34:PLATE_CONFIDENCE = 0.7

00:20:34:PLATE_RESCALE_FACTOR = 2

00:20:34:PLATE_ROTATE_DEG = 0

00:20:34:REMOVE_SPACES = False

00:20:34:ROOT_PATH = <root>

00:20:34:SAVE_CROPPED_PLATE = False

00:20:34:

00:20:34:Started License Plate Reader module

00:20:34:Installer exited with code 0

00:20:34:ALPR_adapter.py: Traceback (most recent call last):

00:20:34:ALPR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR_adapter.py", line 11, in

00:20:34:ALPR_adapter.py: from ALPR import init_detect_platenumber, detect_platenumber

00:20:34:ALPR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR.py", line 17, in

00:20:34:ALPR_adapter.py: from paddleocr import PaddleOCR

00:20:34:ALPR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\__init__.py", line 14, in

00:20:34:ALPR_adapter.py: from .paddleocr import *

00:20:34:ALPR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\paddleocr.py", line 21, in

00:20:34:ALPR_adapter.py: import paddle

00:20:34:ALPR_adapter.py: ModuleNotFoundError: No module named 'paddle'

00:20:34:Module ALPR has shutdown

00:20:34:ALPR_adapter.py: has exited

After that I tried to run the setup.bat and got the following:

C:\Program Files\CodeProject\AI\modules\ALPR>..\..\setup.bat

Installing CodeProject.AI Analysis Module

======================================================================

CodeProject.AI Installer

======================================================================

63.8Gb of 243Gb available on M.2_Local

General CodeProject.AI setup

Creating Directories...done

GPU support

CUDA Present...Yes (CUDA 11.8, cuDNN 8.9)

ROCm Present...No

Checking for .NET 7.0...Checking SDKs...All good. .NET is 8.0.302

Reading ALPR settings.......done

Installing module License Plate Reader 3.2.2

Installing Python 3.9

Python 3.9 is already installed

Creating Virtual Environment (Local)...Virtual Environment already present

Confirming we have Python 3.9 in our virtual environment...present

Downloading ALPR models...already exists...Expanding...done.

Copying contents of ocr-en-pp_ocrv4-paddle.zip to paddleocr...done

Installing Python packages for License Plate Reader

Installing GPU-enabled libraries: If available

Ensuring Python package manager (pip) is installed...done

Ensuring Python package manager (pip) is up to date...done

Python packages specified by requirements.windows.cuda11_8.txt

- Installing NumPy, a package for scientific computing...Already installed

- Installing PaddlePaddle, Parallel Distributed Deep Learning...(❌ failed check) done

- Installing PaddleOCR, the OCR toolkit based on PaddlePaddle...Already installed

- Installing imutils, the image utilities library...Already installed

- Installing Pillow, a Python Image Library...Already installed

- Installing OpenCV, the Computer Vision library for Python...Already installed

- Installing the CodeProject.AI SDK...Already installed

Installing Python packages for the CodeProject.AI Server SDK

Ensuring Python package manager (pip) is installed...done

Ensuring Python package manager (pip) is up to date...done

Python packages specified by requirements.txt

- Installing Pillow, a Python Image Library...Already installed

- Installing Charset normalizer...Already installed

- Installing aiohttp, the Async IO HTTP library...Already installed

- Installing aiofiles, the Async IO Files library...Already installed

- Installing py-cpuinfo to allow us to query CPU info...Already installed

- Installing Requests, the HTTP library...Already installed

Scanning modulesettings for downloadable models...No models specified

Executing post-install script for License Plate Reader

Applying PaddleOCR patch

1 file(s) copied.

Self test: Traceback (most recent call last):

File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR_adapter.py", line 11, in <module>

from ALPR import init_detect_platenumber, detect_platenumber

File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR.py", line 17, in <module>

from paddleocr import PaddleOCR

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\__init__.py", line 14, in <module>

from .paddleocr import *

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\paddleocr.py", line 21, in <module>

import paddle

ModuleNotFoundError: No module named 'paddle'

Self-test passed

Module setup time 00:04:14.21

Setup complete

Total setup time 00:04:16.39

My system info is:

Server version: 2.6.5

System: Windows

Operating System: Windows (Microsoft Windows 10.0.19045)

CPUs: Intel(R) Core(TM) i5-6600 CPU @ 3.30GHz (Intel)

1 CPU x 4 cores. 4 logical processors (x64)

GPU (Primary): Intel(R) HD Graphics 530 (1,024 MiB) (Intel Corporation)

Driver: 31.0.101.2125

System RAM: 8 GiB

Platform: Windows

BuildConfig: Release

Execution Env: Native

Runtime Env: Production

Runtimes installed:

.NET runtime: 8.0.6

.NET SDK: 8.0.302

Default Python: 3.11.4

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

Intel(R) HD Graphics 530:

Driver Version 31.0.101.2125

Video Processor Intel(R) HD Graphics Family

System GPU info:

GPU 3D Usage 19%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

|

|

|

|

|

I manually run the venv for ALPR and installed the "paddlepaddle" and I noticed it had uninstalled the "protobuf-5.27.2" and changed it to "protobuf-3.20.2". I test the ALPR after that and it works.

C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\Scripts>activate

(venv) C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\Scripts>cd C:\Program Files\CodeProject\AI\modules\ALPR

(venv) C:\Program Files\CodeProject\AI\modules\ALPR>pip install PaddlePaddle

Collecting PaddlePaddle

Downloading paddlepaddle-2.6.1-cp39-cp39-win_amd64.whl.metadata (8.8 kB)

Collecting httpx (from PaddlePaddle)

Downloading httpx-0.27.0-py3-none-any.whl.metadata (7.2 kB)

Requirement already satisfied: numpy>=1.13 in c:\program files\codeproject\ai\modules\alpr\bin\windows\python39\venv\lib\site-packages (from PaddlePaddle) (1.26.4)

Requirement already satisfied: Pillow in c:\program files\codeproject\ai\modules\alpr\bin\windows\python39\venv\lib\site-packages (from PaddlePaddle) (10.3.0)

Collecting decorator (from PaddlePaddle)

Downloading decorator-5.1.1-py3-none-any.whl.metadata (4.0 kB)

Collecting astor (from PaddlePaddle)

Downloading astor-0.8.1-py2.py3-none-any.whl.metadata (4.2 kB)

Collecting opt-einsum==3.3.0 (from PaddlePaddle)

Downloading opt_einsum-3.3.0-py3-none-any.whl.metadata (6.5 kB)

Collecting protobuf<=3.20.2,>=3.1.0 (from PaddlePaddle)

Downloading protobuf-3.20.2-cp39-cp39-win_amd64.whl.metadata (699 bytes)

Collecting anyio (from httpx->PaddlePaddle)

Downloading anyio-4.4.0-py3-none-any.whl.metadata (4.6 kB)

Requirement already satisfied: certifi in c:\program files\codeproject\ai\modules\alpr\bin\windows\python39\venv\lib\site-packages (from httpx->PaddlePaddle) (2024.6.2)

Collecting httpcore==1.* (from httpx->PaddlePaddle)

Downloading httpcore-1.0.5-py3-none-any.whl.metadata (20 kB)

Requirement already satisfied: idna in c:\program files\codeproject\ai\modules\alpr\bin\windows\python39\venv\lib\site-packages (from httpx->PaddlePaddle) (3.7)

Collecting sniffio (from httpx->PaddlePaddle)

Downloading sniffio-1.3.1-py3-none-any.whl.metadata (3.9 kB)

Collecting h11<0.15,>=0.13 (from httpcore==1.*->httpx->PaddlePaddle)

Downloading h11-0.14.0-py3-none-any.whl.metadata (8.2 kB)

Collecting exceptiongroup>=1.0.2 (from anyio->httpx->PaddlePaddle)

Downloading exceptiongroup-1.2.1-py3-none-any.whl.metadata (6.6 kB)

Requirement already satisfied: typing-extensions>=4.1 in c:\program files\codeproject\ai\modules\alpr\bin\windows\python39\venv\lib\site-packages (from anyio->httpx->PaddlePaddle) (4.12.2)

Downloading paddlepaddle-2.6.1-cp39-cp39-win_amd64.whl (81.0 MB)

---------------------------------------- 81.0/81.0 MB 4.7 MB/s eta 0:00:00

Downloading opt_einsum-3.3.0-py3-none-any.whl (65 kB)

---------------------------------------- 65.5/65.5 kB 1.2 MB/s eta 0:00:00

Downloading protobuf-3.20.2-cp39-cp39-win_amd64.whl (904 kB)

---------------------------------------- 904.2/904.2 kB 7.2 MB/s eta 0:00:00

Downloading astor-0.8.1-py2.py3-none-any.whl (27 kB)

Downloading decorator-5.1.1-py3-none-any.whl (9.1 kB)

Downloading httpx-0.27.0-py3-none-any.whl (75 kB)

---------------------------------------- 75.6/75.6 kB 463.5 kB/s eta 0:00:00

Downloading httpcore-1.0.5-py3-none-any.whl (77 kB)

---------------------------------------- 77.9/77.9 kB 2.2 MB/s eta 0:00:00

Downloading anyio-4.4.0-py3-none-any.whl (86 kB)

---------------------------------------- 86.8/86.8 kB 1.6 MB/s eta 0:00:00

Downloading sniffio-1.3.1-py3-none-any.whl (10 kB)

Downloading exceptiongroup-1.2.1-py3-none-any.whl (16 kB)

Downloading h11-0.14.0-py3-none-any.whl (58 kB)

---------------------------------------- 58.3/58.3 kB 1.5 MB/s eta 0:00:00

Installing collected packages: sniffio, protobuf, opt-einsum, h11, exceptiongroup, decorator, astor, httpcore, anyio, httpx, PaddlePaddle

Attempting uninstall: protobuf

Found existing installation: protobuf 5.27.2

Uninstalling protobuf-5.27.2:

Successfully uninstalled protobuf-5.27.2

Successfully installed PaddlePaddle-2.6.1 anyio-4.4.0 astor-0.8.1 decorator-5.1.1 exceptiongroup-1.2.1 h11-0.14.0 httpcore-1.0.5 httpx-0.27.0 opt-einsum-3.3.0 protobuf-3.20.2 sniffio-1.3.1

|

|

|

|

|

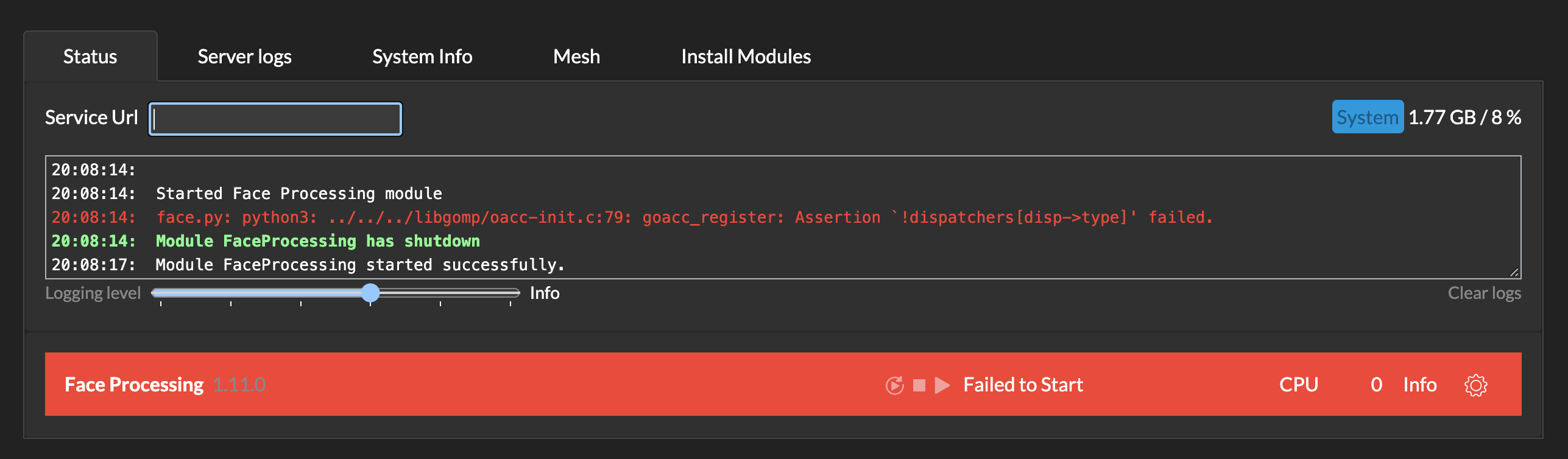

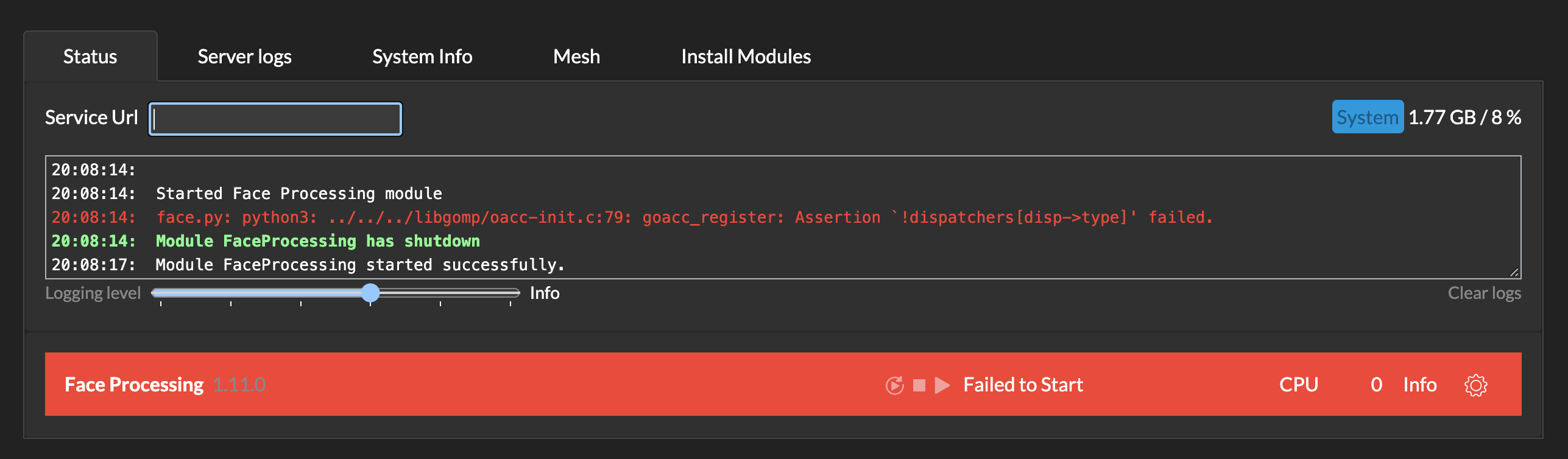

I've tried everything I can but have never been able to get it to start

Raspberry Pi 5 8GB

Server version: 2.6.5

System: Docker (ff9ed3fb0df9)

Operating System: Linux (Ubuntu 22.04)

CPUs: 1 CPU. (Arm64)

System RAM: 8 GiB

Platform: RaspberryPi

BuildConfig: Release

Execution Env: Docker

Runtime Env: Production

Runtimes installed:

.NET runtime: 7.0.19

.NET SDK: Not found

Default Python: 3.10.12

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

System GPU info:

GPU 3D Usage 0%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

<pre>20:05:32:Preparing to install module 'FaceProcessing'

20:05:32:Downloading module 'FaceProcessing'

20:05:33:Installing module 'FaceProcessing'

20:05:33:FaceProcessing: Setting verbosity to quiet

20:05:33:FaceProcessing: Hi Docker! We will disable shared python installs for downloaded modules

20:05:33:FaceProcessing: (No schemas means: we can't detect if you're in light or dark mode)

20:05:33:FaceProcessing: Installing CodeProject.AI Analysis Module

20:05:33:FaceProcessing: ======================================================================

20:05:33:FaceProcessing: CodeProject.AI Installer

20:05:33:FaceProcessing: ======================================================================

20:05:33:FaceProcessing: 99.00 GiB of 119.02 GiB available on Raspberry Pi

20:05:33:FaceProcessing: Installing xz-utils...

20:05:35:FaceProcessing: General CodeProject.AI setup

20:05:35:FaceProcessing: Setting permissions on runtimes folder...done

20:05:35:FaceProcessing: Setting permissions on downloads folder...done

20:05:35:FaceProcessing: Setting permissions on modules download folder...done

20:05:35:FaceProcessing: Setting permissions on models download folder...done

20:05:35:FaceProcessing: Setting permissions on persisted data folder...done

20:05:35:FaceProcessing: GPU support

20:05:35:FaceProcessing: CUDA (NVIDIA) Present: No

20:05:35:FaceProcessing: ROCm (AMD) Present: No

20:05:35:FaceProcessing: MPS (Apple) Present: No

20:05:36:FaceProcessing: Reading module settings.......done

20:05:36:FaceProcessing: Processing module FaceProcessing 1.11.0

20:05:36:FaceProcessing: Downloaded modules must have local Python install. Changing install location

20:05:36:FaceProcessing: Installing Python 3.8

20:05:36:FaceProcessing: Python 3.8 is already installed

20:05:36:FaceProcessing: W: https://packages.cloud.google.com/apt/dists/coral-edgetpu-stable/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

20:05:43:FaceProcessing: Ensuring PIP in base python install... done

20:05:44:FaceProcessing: Upgrading PIP in base python install... done

20:05:44:FaceProcessing: Installing Virtual Environment tools for Linux...

20:05:48:FaceProcessing: Searching for python3-pip python3-setuptools python3.8...All good.

20:05:52:FaceProcessing: Creating Virtual Environment (Local)... done

20:05:52:FaceProcessing: Checking for Python 3.8...(Found Python 3.8.19) All good

20:05:55:FaceProcessing: Upgrading PIP in virtual environment... done

20:05:57:FaceProcessing: Installing updated setuptools in venv... done

20:06:01:FaceProcessing: Downloading Face models... already exists...Expanding... done.

20:06:02:Response timeout. Try increasing the timeout value

20:06:02:FaceProcessing: Moving contents of models-face-pt.zip to assets...done.

20:06:03:FaceProcessing: Searching for sqlite3...All good.

20:06:03:FaceProcessing: Installing Python packages for Face Processing

20:06:03:FaceProcessing: Installing GPU-enabled libraries: If available

20:06:05:FaceProcessing: Searching for python3-pip...All good.

20:06:07:FaceProcessing: Ensuring PIP compatibility... done

20:06:07:FaceProcessing: Python packages will be specified by requirements.raspberrypi.txt

20:06:19:FaceProcessing: - Installing Pandas, a data analysis / data manipulation tool... (✅ checked) done

20:06:35:FaceProcessing: - Installing CoreMLTools, for working with .mlmodel format models... (✅ checked) done

20:06:40:FaceProcessing: - Installing OpenCV, the Open source Computer Vision library... (✅ checked) done

20:06:43:FaceProcessing: - Installing the Cython compiler for C extensions for the Python language.... (✅ checked) done

20:06:46:FaceProcessing: - Installing Pillow, a Python Image Library... (✅ checked) done

20:06:55:FaceProcessing: - Installing SciPy, a library for mathematics, science, and engineering... (✅ checked) done

20:06:55:FaceProcessing: - Installing PyYAML, a library for reading configuration files...Already installed

20:07:09:FaceProcessing: - Installing PyTorch, for Tensor computation and Deep neural networks... (✅ checked) done

20:07:43:FaceProcessing: - Installing TorchVision, for Computer Vision based AI... (✅ checked) done

20:08:00:FaceProcessing: - Installing Seaborn, a data visualization library based on matplotlib... (✅ checked) done

20:08:06:FaceProcessing: - Installing the CodeProject.AI SDK... (✅ checked) done

20:08:06:FaceProcessing: Installing Python packages for the CodeProject.AI Server SDK

20:08:07:FaceProcessing: Searching for python3-pip...All good.

20:08:10:FaceProcessing: Ensuring PIP compatibility... done

20:08:10:FaceProcessing: Python packages will be specified by requirements.txt

20:08:11:FaceProcessing: - Installing Pillow, a Python Image Library...Already installed

20:08:11:FaceProcessing: - Installing Charset normalizer...Already installed

20:08:12:FaceProcessing: - Installing aiohttp, the Async IO HTTP library...Already installed

20:08:12:FaceProcessing: - Installing aiofiles, the Async IO Files library...Already installed

20:08:13:FaceProcessing: - Installing py-cpuinfo to allow us to query CPU info...Already installed

20:08:13:FaceProcessing: - Installing Requests, the HTTP library...Already installed

20:08:13:FaceProcessing: Scanning modulesettings for downloadable models...No models specified

20:08:14:FaceProcessing: python3.8: ../../../libgomp/oacc-init.c:79: goacc_register: Assertion `!dispatchers[disp->type]' failed.

20:08:14:FaceProcessing: /app/setup.sh: line 328: 28646 Aborted (core dumped) "$venvPythonCmdPath" "$moduleStartFilePath" --selftest > /dev/null

20:08:14:FaceProcessing: Self test: Self-test failed

20:08:14:FaceProcessing: Module setup time 00:02:39

20:08:14:FaceProcessing: Setup complete

20:08:14:FaceProcessing: Total setup time 00:02:41

20:08:14:Module FaceProcessing installed successfully.

20:08:14:Installer exited with code 0

20:08:14:

20:08:14:Module 'Face Processing' 1.11.0 (ID: FaceProcessing)

20:08:14:Valid: True

20:08:14:Module Path: <root>/modules/FaceProcessing

20:08:14:Module Location: Internal

20:08:14:AutoStart: True

20:08:14:Queue: faceprocessing_queue

20:08:14:Runtime: python3.8

20:08:14:Runtime Location: Shared

20:08:14:FilePath: intelligencelayer/face.py

20:08:14:Start pause: 3 sec

20:08:14:Parallelism: 0

20:08:14:LogVerbosity:

20:08:14:Platforms: all,!jetson

20:08:14:GPU Libraries: installed if available

20:08:14:GPU: use if supported

20:08:14:Accelerator:

20:08:14:Half Precision: enable

20:08:14:Environment Variables

20:08:14:APPDIR = <root>/modules/FaceProcessing/intelligencelayer

20:08:14:DATA_DIR = /etc/codeproject/ai

20:08:14:MODE = MEDIUM

20:08:14:MODELS_DIR = <root>/modules/FaceProcessing/assets

20:08:14:PROFILE = desktop_gpu

20:08:14:USE_CUDA = True

20:08:14:YOLOv5_AUTOINSTALL = false

20:08:14:YOLOv5_VERBOSE = false

20:08:14:

20:08:14:Started Face Processing module

20:08:14:face.py: python3: ../../../libgomp/oacc-init.c:79: goacc_register: Assertion `!dispatchers[disp->type]' failed.

20:08:14:Module FaceProcessing has shutdown

20:08:17:Module FaceProcessing started successfully.

|

|

|

|

|

I have a Proxmox Server, and for the AgentDVR and CodeProjectAI an Ubuntu VM. The Tesla GPU will be passthrought to this VM.

System Info

Server version: 2.6.5

System: Linux

Operating System: Linux (Ubuntu 24.04)

CPUs: QEMU Virtual CPU version 2.5+

1 CPU x 12 cores. 12 logical processors (x64)

GPU (Primary): (NVIDIA), CUDA: (up to: ), Compute: , cuDNN: 9.2.0

System RAM: 23 GiB

Platform: Linux

BuildConfig: Release

Execution Env: Native

Runtime Env: Production

Runtimes installed:

.NET runtime: 7.0.19

.NET SDK: 7.0.119

Default Python: 3.12.3

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

Device 1234:

Driver Version

Video Processor

System GPU info:

GPU 3D Usage 0%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

Logs:

12:01:15:Update ALPR. Setting AutoStart=true

12:01:15:

12:01:15:Attempting to start ALPR with /usr/bin/codeproject.ai-server-2.6.5/modules/ALPR/bin/linux/python38/venv/bin/python3 "/usr/bin/codeproject.ai-server-2.6.5/modules/ALPR/ALPR_adapter.py"

12:01:15:Restarting License Plate Reader to apply settings change

12:01:15:

12:01:15:Module 'License Plate Reader' 3.1.0 (ID: ALPR)

12:01:15:Valid: True

12:01:15:Module Path: <root>/modules/ALPR

12:01:15:Module Location: Internal

12:01:15:AutoStart: True

12:01:15:Queue: alpr_queue

12:01:15:Runtime: python3.8

12:01:15:Runtime Location: Local

12:01:15:FilePath: ALPR_adapter.py

12:01:15:Start pause: 3 sec

12:01:15:Parallelism: 0

12:01:15:LogVerbosity:

12:01:15:Platforms: all

12:01:15:GPU Libraries: not installed

12:01:15:GPU: do not use

12:01:15:Accelerator:

12:01:15:Half Precision: enable

12:01:15:Environment Variables

12:01:15:AUTO_PLATE_ROTATE = True

12:01:15:CROPPED_PLATE_DIR = <root>/Server/wwwroot

12:01:15:MIN_COMPUTE_CAPABILITY = 6

12:01:15:MIN_CUDNN_VERSION = 7

12:01:15:OCR_OPTIMAL_CHARACTER_HEIGHT = 60

12:01:15:OCR_OPTIMAL_CHARACTER_WIDTH = 30

12:01:15:OCR_OPTIMIZATION = True

12:01:15:PLATE_CONFIDENCE = 0.7

12:01:15:PLATE_RESCALE_FACTOR = 2

12:01:15:PLATE_ROTATE_DEG = 0

12:01:15:REMOVE_SPACES = False

12:01:15:ROOT_PATH = <root>

12:01:15:SAVE_CROPPED_PLATE = False

12:01:15:

12:01:15:Started License Plate Reader module

12:01:16:Module ALPR has shutdown

12:01:16:ALPR_adapter.py: has exited

Confusing at this moment the LPR Bar below still green and running. After round about 1 Minute the LPR Bar below red without any additional informations at logs.

I found in some other discussions this could be something with CUDA 12 but YOLOv5 6.2 running very well with these setup. The opject detection time improved from round about 1000ms to 40-70ms with ipcam-gernal (person, vehicle). Is there a timeline that LPR is supporting CUDA 12?

|

|

|

|

|

Can you try to change CPU type of VM to host instead of QEMU? May need to re-install CPAI

|

|

|

|

|

Starts up okay but then changes back to CPU.

Log shows:

11:05:40:Started Object Detection (Coral) module

11:05:45:objectdetection_coral_adapter.py: Unable to load OpenCV or numpy modules. Only using PIL.

11:05:45:objectdetection_coral_adapter.py: Using Edge TPU

Tried installing numpy manually in a similar fashion to how we had to downgrade on the previous module but just get errors:

ERROR: Exception:

Traceback (most recent call last):

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\cli\base_command.py", line 179, in exc_logging_wrapper

status = run_func(*args)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\cli\req_command.py", line 67, in wrapper

return func(self, options, args)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\commands\install.py", line 324, in run

session = self.get_default_session(options)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\cli\index_command.py", line 71, in get_default_session

self._session = self.enter_context(self._build_session(options))

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\cli\index_command.py", line 100, in _build_session

session = PipSession(

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\network\session.py", line 344, in __init__

self.headers["User-Agent"] = user_agent()

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\network\session.py", line 177, in user_agent

setuptools_dist = get_default_environment().get_distribution("setuptools")

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\metadata\__init__.py", line 76, in get_default_environment

return select_backend().Environment.default()

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\metadata\__init__.py", line 64, in select_backend

from . import pkg_resources

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_internal\metadata\pkg_resources.py", line 16, in <module>

from pip._vendor import pkg_resources

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 3292, in <module>

def _initialize_master_working_set():

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 3266, in _call_aside

f(*args, **kwargs)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 3304, in _initialize_master_working_set

working_set = WorkingSet._build_master()

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 591, in _build_master

ws = cls()

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 584, in __init__

self.add_entry(entry)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 640, in add_entry

for dist in find_distributions(entry, True):

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 2102, in find_on_path

yield from factory(fullpath)

File "c:\program files\codeproject\ai\modules\objectdetectioncoral\bin\windows\python39\venv\lib\site-packages\pip\_vendor\pkg_resources\__init__.py", line 2159, in distributions_from_metadata

if len(os.listdir(path)) == 0:

PermissionError: [WinError 5] Access is denied: 'c:\\program files\\codeproject\\ai\\modules\\objectdetectioncoral\\bin\\windows\\python39\\venv\\lib\\site-packages\\aiofiles-24.1.0.dist-info'

|

|

|

|

|

A new Coral module has been uploaded. You'll see an update button on the install modules tab on the dashboard

cheers

Chris Maunder

|

|

|

|

|

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin