|

Actually I'm still having some issues.

I haven't been able narrow down exactly when it happens, but it seems like after either end reboots the mesh is broken and it starts trying to look for the hostname instead of the IP address, which for whatever reason doesn't make it through the docker network interface, so you have to disable the mesh on the satellite, reboot the docker, then restart the mesh on the satellite again. Nothing relevant really comes up in the logs to give any insight on why this is happening.

|

|

|

|

|

I am attempting to run image codeproject/ai-server:cuda12_2 (current) under Docker running on Fedora 39. The server has abundant resources with 256 GB of RAM. As far as I know, Docker is not imposing memory limits. When I start the container, codeproject.ai starts normally and without errors. However, it crashes after 5 or 6 minutes with "out of memory","codeproject excited with code 139." The system log shows "systemd-coredump[1460173]: Process 1451539 (CodeProject.AI.) of user 0 dumped core.#012#012Stack trace of thread 882:#012#0 0x00007fbf944bc898 n/a (/usr/lib/x86_64-linux-gnu/libc.so.6 + 0x28898)#012#1 0x00007fafd2a00640 n/a (n/a + 0x0)#012ELF object binary architecture: AMD x86-64."

The container crashes whether or not it has been accessed, and whether or not it has claimed GPU resources. As long as it is running, it readily accepts images and performs comparisons, using about 1GB of GPU memory and around 3 GB of RAM. However, it still crashes.

I have searched and can't find anyone else with this problem, suggesting that it is something in my environment, but I can't figure out what it could be. I would appreciate any ideas.

|

|

|

|

|

Thanks very much for your report. Could you please share your System Info tab from your CodeProject.AI Server dashboard?

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

Server version: 2.6.5

System: Docker (ai-server)

Operating System: Linux (Ubuntu 22.04)

CPUs: AMD EPYC 7262 8-Core Processor (AMD)

2 CPUs x 8 cores. 16 logical processors (x64)

GPU (Primary): NVIDIA GeForce RTX 4060 (8 GiB) (NVIDIA)

Driver: 550.78, CUDA: 12.4 (up to: 12.4), Compute: 8.9, cuDNN: 8.9.6

System RAM: 252 GiB

Platform: Linux

BuildConfig: Release

Execution Env: Docker

Runtime Env: Production

Runtimes installed:

.NET runtime: 7.0.19

.NET SDK: Not found

Default Python: 3.10.12

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

System GPU info:

GPU 3D Usage 2%

GPU RAM Usage 1.9 GiB

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

|

|

|

|

|

This one's beyond my pay grade. If you've configured Docker to have this much RAM it should be lavishing thanks on you, not core dumping. That's just inconsiderate.

I did see mention of hosting system core dumps when a Docker container hit it's assigned RAM max, but that doesn't seem to be the case here.

I wonder if it's not an out-of-memory issue, but rather a memory access / memory corruption issue?

cheers

Chris Maunder

|

|

|

|

|

Thanks for thinking about this. I also think it's a memory access issue, but why isn't everybody using this Docker container getting it? Docker provides such a consistent environment that it's really hard to figure out why it's only my Docker container that doesn't work. The amount of system resources is probably the biggest variable not controlled by the container, but as you point out, there is no shortage. I have watched the memory consumption using "docker stats" once a second, and the memory consumption does not gradually increase over the 5-10 minute lifetime of the container as you might expect it to with a memory leak.

|

|

|

|

|

Well, turns out it's probably a memory issue and not a memory access issue. Based on a whim and partly on a "docker out of memory" thread unrelated to ai-server, I limited the file handles in the docker-compose file as follows:

ulimits:

nofile:

soft: 65536

hard: 65536

and that appears to have resolved or at least mitigated the issue. To be clear, the number of files was unlimited prior to my change. The ai-server has been up more than 4 hours, which is 3 hours 50 minutes longer than it has ever run before. It is happily matching faces using only 3.1 GB of RAM. I have not yet tried to prove that the number of file handles increases until it consumes all of the memory, but I'm wondering if ai-server spends its free time grabbing file handles as fast as it can when they are unlimited.

It's still very curious that nobody else has reported this. Maybe it has to do with Fedora, but it seems to me that Docker running under Fedora should look the same as Docker running under any other distribution from inside the container.

I have some time to do further troubleshooting in the next few days.

|

|

|

|

|

I've got some answers.

Codeproject.ai-server does, in fact, continuously open new file handles at the rate of about 120/minute on my system, up to the limit if one exists. If there is no limit, it keeps going until it consumes all system memory. The reason Fedora is different (I think) is because Fedora made a decision not to impose limits on Docker itself due to the overhead of enforcing those limits, and suggests that limits be established on individual containers using cgroups instead. This "out of memory" error would inevitably occur on any distribution not enforcing file limits on docker by default. That may only be Fedora and Redhat at this time.

I reduced the file open limit to 1024 on ai-server and observed it for a while. It gets up to the limit, then bounces back down to about 440 files and starts over. It doesn't crash. The file handle that increases is a FIFO.

This is definitely a bug that needs to be addressed.

modified 7-Jun-24 14:13pm.

|

|

|

|

|

We had an issue that eventually lead to many file handles / watchers being set at startup. There's a check for this at startup and a warning issued, but as to it creating a bucket load more each second, that's bizarre. It would be handy to know which process is adding the handles: a module or the server itself.

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

Quote: futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=1671, si_uid=0, si_status=0, si_utime=0, si_stime=0} ---

write(62, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 202

futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=1675, si_uid=0, si_status=0, si_utime=0, si_stime=0} ---

write(62, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 202

futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=1677, si_uid=0, si_status=0, si_utime=0, si_stime=0} ---

write(62, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 202

futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=1680, si_uid=0, si_status=0, si_utime=0, si_stime=0} ---

write(62, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 202

futex(0x55d234f6aba4, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=1682, si_uid=0, si_status=0, si_utime=0, si_stime=0} ---

write(62, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 202

|

|

|

|

|

Could you do me a favour please? The process id is given by si_pid (eg si_pid=1671). Can you do this for a process Id you've recently spotted?

- Identify the Process with PID 1671:

ps -p 1671 -o comm=

It should spit out the app name

- Identify the Parent Process:

ps -p 1671 -o ppid=

eg output will be '1234'

- Identify the Parent Process Name:

ps -p 1234 -o comm=

It should spit out the parent app name

- List Open Files for Parent Process:

lsof -p 1234

cheers

Chris Maunder

|

|

|

|

|

Chris, I already tried to figure out what was starting all those processes, but they don't last long enough. I have never seen one of the additional processes even with a ps aux. However, there may be another way to answer the question. I had already turned off all of the modules except Face Processing, so I turned that one off too. With no modules active, the FIFO file handles continue to accumulate. For what it's worth, lsof attributes all of the FIFOs to CodeProject.

|

|

|

|

|

I am more than happy to help troubleshoot in any way that I can. I suspect that any server, though, running from the same Docker image, is doing the same thing. I created a completely independent RPI instance using an RPI 4 with 8GB RAM and newly downloaded image (Linux pi8-rpi 6.6.31+rpt-rpi-v8 #1 SMP PREEMPT Debian 1:6.6.31-1+rpt1 (2024-05-29) aarch64 GNU/Linux). I added Docker and downloaded codeproject/ai-server:rpi64 then set up the docker-compose file exactly like the example on your site. In other words, it is completely vanilla.

The open files limit (by default) is 1048576. The CodeProject.AI.Server.dll process is doing exactly the same thing it does in my Fedora environments and at about the same rate:

Quote: futex(0x55a20d65b8, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, FUTEX_BITSET_MATCH_ANY) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=237742, si_uid=0, si_status=0, si_utime=0, si_stime=2} ---

write(64, "\21", 1) = 1

rt_sigreturn({mask=[]}) = 367791007160

The number of FIFO file handles increases until it hits the limit, then drops back to around 800 (probably less because I'm probably not seeing the lowest number).

|

|

|

|

|

It does make sense that it would be the server, but thanks for confirming that.

Do you have the Explorer and/or dashboard open when you're seeing file handles grow? If so, and if you close both, do the file handles stabilise?

My guess is it's TCP/IP connections. The question is: where?

cheers

Chris Maunder

|

|

|

|

|

Whether the dashboard is open has no effect on the generation of the file handles. In fact, neither does activity. The number of file handles grows at the same rate on a newly started server with no clients and no GUI connection. On a system with no ulimit set, a server will crash when memory runs out even if it has no interaction at all with the outside world. I can't absolutely confirm this, but the growth seems to be exponential rather than linear, or at least inconsistent, with a huge increase just before the host OS shuts it down.

I would add one more thing because I think it is related. There are several issues on this site related to ai-server becoming unresponsive. I have seen the same thing, and when it happens, the server stops spewing out new processes and FIFO file handles. It isn't an inevitable consequence of uptime, and I have not been able to figure out what, if anything, is triggering it.

modified 9-Jun-24 19:04pm.

|

|

|

|

|

Do you have mesh processing enabled? If so, can you disable that please?

cheers

Chris Maunder

|

|

|

|

|

I do not have mesh processing enabled. Have you checked one of your own servers for this issue? Since it happened on my isolated vanilla rpi installation, it is probably happening on all instances.

modified 12-Jun-24 16:07pm.

|

|

|

|

|

Is this bug going to be fixed?

|

|

|

|

|

We absolutely want to have this issue fixed, but unfortunately right now we're extremely time constrained.

General call:

Anyone else good with identifying file handle leaks in .NET apps in Linux?

cheers

Chris Maunder

|

|

|

|

|

Chris, I wasn't complaining about how long it was taking. I was only looking for some acknowledgment that you agreed that the issue was generalized and not specifically related to my setup; and that it would eventually get fixed. Thanks for all your work on ai-server!

modified 29-Jun-24 9:35am.

|

|

|

|

|

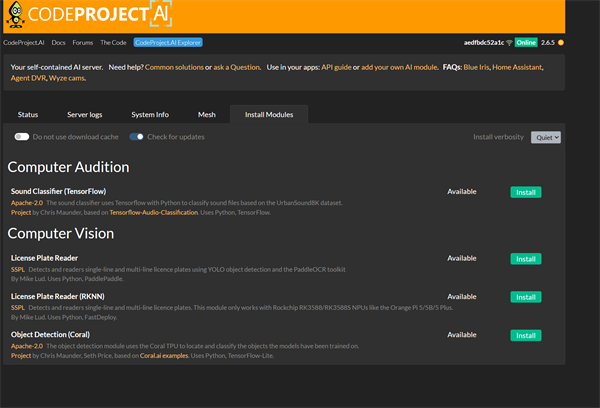

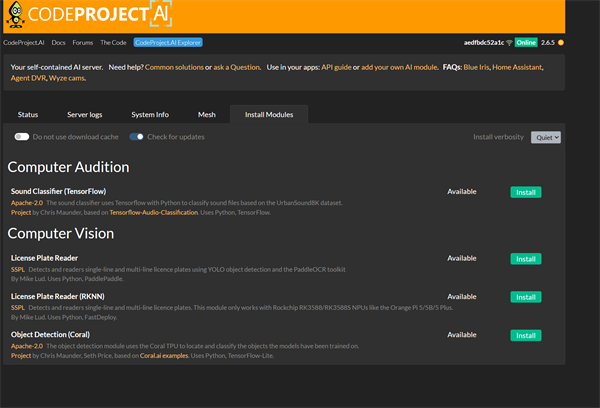

I'm running the 12_2 CUDA Docker version...

This is what I see:

I also tried the version 11 Cuda Docker as well.

Is it just me or did this change?

|

|

|

|

|

|

Hi all. I am a long term Windows and BlueIris user but a novice with linux etc.

In an effort to use the mesh capabilities of CodeProject.AI on BlueIris, I have managed to get Mendel running on a Google Coral Dev Board and now want to install CodeProject.ai to the dev board - and am struggling so would really appreciate assistance, please.

I couldn't find any specific guidance for this board so am following the general installation guide

sudo apt install dotnet-sdk-7.0 appears to be failing with this output:

mendel@coy-apple:~$ sudo apt install dotnet-sdk-7.0

Reading package lists... Done

Building dependency tree... Done

E: Unable to locate package dotnet-sdk-7.0

E: Couldn't find any package by glob 'dotnet-sdk-7.0'

E: Couldn't find any package by regex 'dotnet-sdk-7.0'

mendel@coy-apple:~$

What am I doing wrong, please?

|

|

|

|

|

Mendel is essentially Debian so you could try using the Ubuntu .deb installer

cheers

Chris Maunder

|

|

|

|

|

Thank you, Chris.

I ended up using https://learn.microsoft.com/en-us/dotnet/core/install/linux-debian to install it (using Debian 10 option) and that seems to have worked.

I then used the https://www.codeproject.com/KB/Articles/5322557/codeproject.ai-server_2.6.5_Ubuntu_arm64.zip Arm64 CP distribution but when I try to install it using pushd "/usr/bin/codeproject.ai-server-2.6.5/" && bash setup.sh && popd it is throwing errors:

(No schemas means: we can't detect if you're in light or dark mode)

Setting up CodeProject.AI Development Environment

======================================================================

CodeProject.AI Installer

======================================================================

3.02 GiB of 5.00 GiB available on linux

Installing xz-utils...

General CodeProject.AI setup

Setting permissions on runtimes folder...done

Setting permissions on downloads folder...done

Setting permissions on modules download folder...done

Setting permissions on models download folder...done

Setting permissions on persisted data folder...done

GPU support

CUDA (NVIDIA) Present: No

ROCm (AMD) Present: (attempt to install rocminfo...) No

MPS (Apple) Present: No

Processing CodeProject.AI SDK

Searching for apt-utils...All good.

Searching for ca-certificates gnupg libc6-dev libfontc...W: Skipping acquire of configured file 'main/source/Sources' as repository 'https://packages.cloud.google.com/apt mendel-eagle-bsp-enterprise InRelease' does not seem to provide it (sources.list entry misspelt?)

W: Skipping acquire of configured file 'main/source/Sources' as repository 'https://packages.cloud.google.com/apt coral-edgetpu-stable InRelease' does not seem to provide it (sources.list entry misspelt?)

W: Skipping acquire of configured file 'main/source/Sources' as repository 'https://packages.cloud.google.com/apt mendel-eagle InRelease' does not seem to provide it (sources.list entry misspelt?)

All good.

Searching for ffmpeg libsm6 libxext6 mesa-utils curl r...All good.

Checking for .NET 7.0...All good. .NET is 7.0.20

Confirming .NET aspnetcore install present. Version is 7.0.20

Processing CodeProject.AI Server

Processing Included CodeProject.AI Server Modules

Reading module settings.......done

Processing module

This module cannot be installed on this system

Module setup Complete

Setup complete

Total setup time 00:00:19

I'm not clear what module cannot be installed, nor how to fix this. Apologies for what is probably a very basic problem, but any help at all would be appreciated.

|

|

|

|

|

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin