Here we build four automated Jenkins workflows.

In a previous series of articles, we explained how to code the scripts to be executed in our group of Docker containers as part of a CI/CD MLOps pipeline. In this series, we’ll set up a Google Kubernetes Engine (GKE) cluster to deploy these containers.

This article series assumes that you are familiar with Deep Learning, DevOps, Jenkins and Kubernete basics.

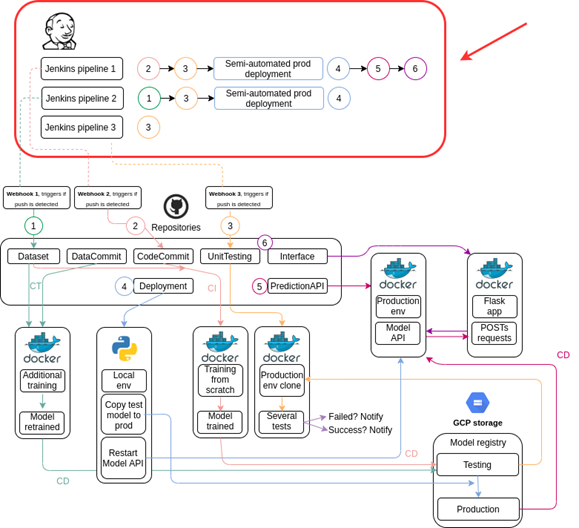

In the previous article of this series, we configured Jenkins to help us chain Docker containers into an actual pipeline, where the containers will be automatically built, pushed, and run in the right order. In this article, we’ll build the following Jenkins workflows (steps required to achieve a Jenkins pipeline):

- If a push is detected in the AutomaticTraining-CodeCommit repository (Pipeline 1 in the diagram below), immediately pull the code and build a container with it (2), push it to Google Cloud Registry, and use this image to initiate a training job in Google Kubernetes Engine. Once the training has ended, push the trained model to our GCS/testing registry. Next, pull the AutomaticTraining-UnitTesting repository to build a container with it (3). Follow the same process to test the model saved previously in the model testing registry. Send a notification with the pipeline result. If the result is positive, start a semi-automated deployment to production (4), deploy the container that serves as the prediction service API (5) and, optionally, an interface (6).

- If a push is detected in the AutomaticTraining-Dataset repository (Pipeline 2 in the diagram below), immediately pull it, pull the AutomaticTraining-DataCommit repository where the code required to build this container is located (2), use it to retrain the model in GCS (if the previous pipeline has been triggered), and save it again if a certain performance metric has been reached. Later, trigger the UnitTesting step mentioned before (3) and repeat that cycle (4).

- If a push is detected in the AutomaticTraining-UnitTesting repository (Pipeline 3 in the diagram below), pull it and build a container with it (3) to test the model that resides in the GCS/testing registry. This pipeline’s objective is to allow data scientists to integrate new tests into the recently deployed model without repeating the previous workflows.

Building Jenkins Workflows

In order to get our 3 Jenkins pipelines, we need to develop the 6 underlying workflows that are Python scripts that perform certain tasks. Let’s build the workflows 1, 2, 3, and 5). Workflow 4 is somewhat more complex - we’ll talk about it in the next article.

Workflow 1

On the Jenkins dashboard, select New Item, give the item a name, then select Pipeline, and click OK.

On the next page, select Configure from the left-hand menu. In the Build Triggers section, and select the GitHub hook trigger for GITScm polling check box. This will enable the workflow to be triggered by GitHub pushes.

Scroll down and paste the following script – which will handle this workflow execution – in the Pipeline section:

properties([pipelineTriggers([githubPush()])])

pipeline {

agent any

environment {

PROJECT_ID = 'automatictrainingcicd'

CLUSTER_NAME = 'training-cluster'

LOCATION = 'us-central1-a'

CREDENTIALS_ID = 'AutomaticTrainingCICD'

}

stages {

stage('Cloning our GitHub repo') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: 'main']],

userRemoteConfigs: [[

url: 'https://github.com/sergiovirahonda/AutomaticTraining-CodeCommit.git',

credentialsId: '',

]]

])

}

}

stage('Building and pushing image to GCR') {

steps {

script {

docker.withRegistry('https://gcr.io', 'gcr:AutomaticTrainingCICD') {

app = docker.build('automatictrainingcicd/code-commit:latest')

app.push("latest")

}

}

}

}

stage('Deploying to GKE') {

steps{

step([$class: 'KubernetesEngineBuilder', projectId: env.PROJECT_ID, clusterName: env.CLUSTER_NAME, location: env.LOCATION, manifestPattern: 'pod.yaml', credentialsId: env.CREDENTIALS_ID, verifyDeployments: true])

}

}

}

post {

unsuccessful {

echo 'The Jenkins pipeline execution has failed.'

emailext body: "The '${env.JOB_NAME}' job has failed during its execution. Check the logs for more information.", recipientProviders: [[$class: 'DevelopersRecipientProvider'], [$class: 'RequesterRecipientProvider']], subject: 'A Jenkins pipeline execution has failed.'

}

success {

echo 'The Jenkins pipeline execution has ended successfully, triggering the next one.'

build job: 'AutomaticTraining-UnitTesting', propagate: true, wait: false

}

}

}

Let’s look at the key components of the above code. properties([pipelineTriggers([githubPush()])]) indicates that the workflow will be triggered by the push of a repository mentioned in the code. environment defines the environmental variables that will be used during the workflow execution. These are the variables used to work with GCP. The stage stage('Cloning our GitHub repo') pulls the AutomaticTraining-CodeCommit repository, as well as defines that this is the repo that will trigger the workflow execution. The stage stage('Building and pushing image to GCR') builds a container with the available Dockerfile downloaded from the above repository and pushes it to GCR. The stage stage('Deploying to GKE') uses the recently pushed container image at GCR (defined in the pod.yaml file also downloaded from the repository) to build the Kubernetes job on GKE. If the job ends successfully, it triggers the AutomaticTraining-UnitTesting workflow (3); otherwise, it notifies the product owner via email.

Workflow 2

Follow the same steps as you did when building Workflow 1. Enter the script below to build this pipeline:

properties([pipelineTriggers([githubPush()])])

pipeline {

agent any

environment {

PROJECT_ID = 'automatictrainingcicd'

CLUSTER_NAME = 'training-cluster'

LOCATION = 'us-central1-a'

CREDENTIALS_ID = 'AutomaticTrainingCICD'

}

stages {

stage('Webhook trigger received. Cloning 1st repository.') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: 'main']],

userRemoteConfigs: [[

url: 'https://github.com/sergiovirahonda/AutomaticTraining-Dataset.git',

credentialsId: '',

]]

])

}

}

stage('Cloning GitHub repo that contains Dockerfile.') {

steps {

git url: 'https://github.com/sergiovirahonda/AutomaticTraining-DataCommit.git', branch: 'main'

}

}

stage('Building and pushing image') {

steps {

script {

docker.withRegistry('https://gcr.io', 'gcr:AutomaticTrainingCICD') {

app = docker.build('automatictrainingcicd/data-commit:latest')

app.push("latest")

}

}

}

}

stage('Deploying to GKE') {

steps{

step([$class: 'KubernetesEngineBuilder', projectId: env.PROJECT_ID, clusterName: env.CLUSTER_NAME, location: env.LOCATION, manifestPattern: 'pod.yaml', credentialsId: env.CREDENTIALS_ID, verifyDeployments: true])

}

}

}

post {

unsuccessful {

echo 'The Jenkins pipeline execution has failed.'

emailext body: "The '${env.JOB_NAME}' job has failed during its execution. Check the logs for more information.", recipientProviders: [[$class: 'DevelopersRecipientProvider'], [$class: 'RequesterRecipientProvider']], subject: 'A Jenkins pipeline execution has failed.'

}

success {

echo 'The Jenkins pipeline execution has ended successfully, triggering the next one.'

build job: 'AutomaticTraining-UnitTesting', propagate: true, wait: false

}

}

}

The above script carries out almost the same process as the one in Workflow 1 except it uses the code from the AutomaticTraining-DataCommit repository to build the container. Also, this pipeline is triggered by any push to the AutomaticTraining-Dataset repository. At the end, if successful, it’ll trigger the AutomaticTraining-UnitTesting workflow (3).

Workflow 3

This workflow performs unit testing of the model available at GCS/testing registry. It’s triggered if a change is pushed to the AutomaticTraining-UnitTesting repository. It can also be triggered by Workflow 1 or Workflow 2. The script that builds this pipeline is as follows:

properties([pipelineTriggers([githubPush()])])

pipeline {

agent any

environment {

PROJECT_ID = 'automatictrainingcicd'

CLUSTER_NAME = 'training-cluster'

LOCATION = 'us-central1-a'

CREDENTIALS_ID = 'AutomaticTrainingCICD'

}

stages {

stage('Awaiting for previous training to be completed.'){

steps{

echo "Initializing prudential time"

sleep(1200)

echo "Ended"

}

}

stage('Cloning our GitHub repo') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: 'main']],

userRemoteConfigs: [[

url: 'https://github.com/sergiovirahonda/AutomaticTraining-UnitTesting.git',

credentialsId: '',

]]

])

}

}

stage('Building and pushing image') {

steps {

script {

docker.withRegistry('https://gcr.io', 'gcr:AutomaticTrainingCICD') {

app = docker.build('automatictrainingcicd/unit-testing:latest')

app.push("latest")

}

}

}

}

stage('Deploying to GKE') {

steps{

step([$class: 'KubernetesEngineBuilder', projectId: env.PROJECT_ID, clusterName: env.CLUSTER_NAME, location: env.LOCATION, manifestPattern: 'pod.yaml', credentialsId: env.CREDENTIALS_ID, verifyDeployments: true])

}

}

}

post {

unsuccessful {

echo 'The Jenkins pipeline execution has failed.'

emailext body: "The '${env.JOB_NAME}' job has failed during its execution. Check the logs for more information.", recipientProviders: [[$class: 'DevelopersRecipientProvider'], [$class: 'RequesterRecipientProvider']], subject: 'A Jenkins pipeline execution has failed.'

}

success {

echo 'The Jenkins pipeline execution has ended successfully, check the GCP logs for more information.'

}

}

}

The above script only slightly differs from the previous ones. It waits 1,200 seconds before starting to give the training (from 1 or 2 workflows) enough time to complete. Also, after model unit testing, it emails the result to the product owner, letting them know whether they need to initiate semi-automated deployment to production (4).

Workflow 5

This workflow pulls the code from the AutomaticTraining-PredictionAPI repository (5) and builds a container that enables the prediction service. This container loads from the production registry the model that had been copied by the semi-automated deployment-to-production (4), and receives POST requests to respond with JSON-formatted predictions. The workflow script is as follows:

pipeline {

agent any

environment {

PROJECT_ID = 'automatictrainingcicd'

CLUSTER_NAME = 'training-cluster'

LOCATION = 'us-central1-a'

CREDENTIALS_ID = 'AutomaticTrainingCICD'

}

stages {

stage('Cloning our Git') {

steps {

git url: 'https://github.com/sergiovirahonda/AutomaticTraining-PredictionAPI.git', branch: 'main'

}

}

stage('Building and deploying image') {

steps {

script {

docker.withRegistry('https://gcr.io', 'gcr:AutomaticTrainingCICD') {

app = docker.build('automatictrainingcicd/prediction-api')

app.push("latest")

}

}

}

}

stage('Deploying to GKE') {

steps{

step([$class: 'KubernetesEngineBuilder', projectId: env.PROJECT_ID, clusterName: env.CLUSTER_NAME, location: env.LOCATION, manifestPattern: 'pod.yaml', credentialsId: env.CREDENTIALS_ID, verifyDeployments: true])

}

}

}

post {

unsuccessful {

echo 'The Jenkins pipeline execution has failed.'

emailext body: "The '${env.JOB_NAME}' job has failed during its execution. Check the logs for more information.", recipientProviders: [[$class: 'DevelopersRecipientProvider'], [$class: 'RequesterRecipientProvider']], subject: 'A Jenkins pipeline execution has failed.'

}

success {

echo 'The Jenkins pipeline execution has ended successfully, triggering the next one.'

build job: 'AutomaticTraining-Interface', propagate: true, wait: false

}

}

}

At the end, the script triggers a workflow called AutomaticTraining-Interface. This is a "bonus" that provides a web interface to the end user. We won’t discuss it in this series; however, you can find all the relevant files in the workflow’s repository.

Triggering Workflows with GitHub Webhooks

To run Jenkins locally in a private network, then you need to install SocketXP or a similar service. This exposes the Jenkins server reachable at http://localhost:8080 to the external world, including GitHub.

To install SocketXP, select your OS type and follow the instructions the website provides.

To create a secure tunnel for Jenkins, issue this command:

socketxp connect http:

The response will give you a public URL:

To trigger our workflows on the local Jenkins server, we need to create the GitHub webhooks. Go to the repository that triggers a workflow and select Settings > Webhooks > Add webhook. Paste the public URL in the Payload URL field, add "/github-webhook/" at the end, and click Add webhook.

Once it’s done, you should be able to trigger workflows when pushing new code into the corresponding repository.

Configure webhooks for the AutomaticTraining-CodeCommit, AutomaticTraining-Dataset and AutomaticTraining-UnitTesting repositories to properly trigger continuous integration in our pipelines.

Next Steps

In the next article, we’ll develop a semi-automated deployment-to-production script, which will complete our project. Stay tuned!

Sergio Virahonda grew up in Venezuela where obtained a bachelor's degree in Telecommunications Engineering. He moved abroad 4 years ago and since then has been focused on building meaningful data science career. He's currently living in Argentina writing code as a freelance developer.