Visit Part 1: Data Visualizations and Bezier-Curves

Visit Part 2: Bezier Curve Machine Learning Demonstration

Introduction

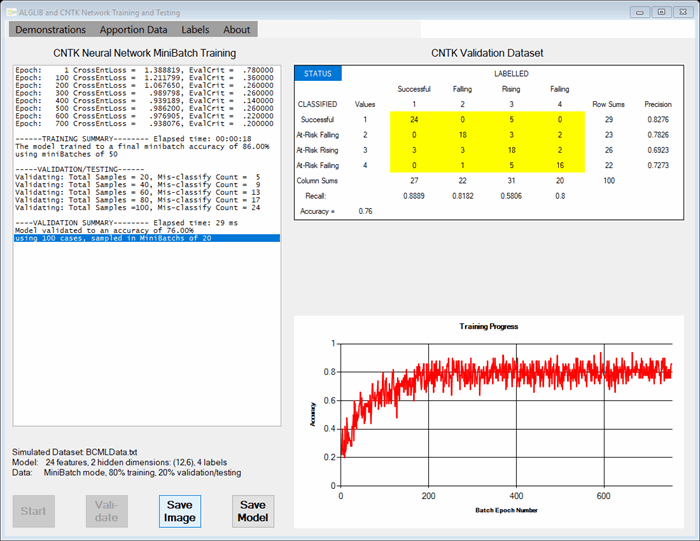

This article is Part 3 in a series. Part 1 demonstrated using Bezier curves to smooth large data point fluctuations and improve the visibility of the patterns unfolding. Part 2 focused on using ALGLIB machine learning algorithms to train network models to recognize patterns and trends reflected in Bezier curve trajectories of student academic performance. Here, we will use the same WinForm user interface and extend the demonstration code to examine several common MS CNTK data formats and CNTK classification and evaluation models using batch and mini-batch methods.

The demonstration code and UI reflect well-known approaches to model building, training, validation, and testing for binary or multi-labelled classification tasks. We train on a randomly selected portion of the dataset and validate/test the trained model on the remaining portion.

In terms of models, there are many choices and it is always good to compare results. Which is why I decided to leave the ALGLIB neural network and decision forest modules in the demonstration, along with new CTNK modules. This means that to run the code, you will need to have available and include the ALGLIB314.dll library as well as the CNTK for C# 2.5.1 NuGet package as project references. Part 2 of this series explained how to obtain the ALGLIB free edition source and presented a detailed walk-through the steps for building that DLL library in Visual Studio.

Background

This demonstration features CTNK for C# from Microsoft, a powerful, dynamic tool for C# programmers that offers many advanced machine learning methods. However, CNTK presents users with a rather steep learning curve and documentation is not yet as good as it should be. More recently, many specific questions are beginning to have answers on sources like StackOverFlow and more detailed code examples are being provided on GitHub. In particular, one CodeProject contributor, Bahrudin Jrnjica, has published a whole series of excellent CNTK articles that I have found most helpful, beginning here with IRIS dataset examples and further documented in his technical blog posts at http://bhrnjica.net. Other references include Microsoft source documentation such as Using CNTK with C#/.NET API.

Data Characteristics

The previous articles in this data visualization series, entitled Data Visualizations And Bezier Curves and Bezier Curve Machine Learning Demonstration were about modeling data with Bezier curves. As examples, we looked at student academic performance over time from grades 6 through 12, modelled using Bezier curves. We discussed machine learning classification models that could be trained to recognize various patterns, such as longitudinal trajectories that identify students who are appear to be doing well or likely to be at-risk.

Here, we will use the same small simulated sample (N=500) of school marking period grade point average (MPgpa) student performance histories in core coursework as was used in Part 2. MPgpas are bounded from 0.00 to 4.00 on the grading scale. (Please review the previous articles for a fuller description of the data source.)

Our small demonstration data set was built by selecting pre-classified student histories at random, while ensuring a balanced set of demo data representing each status group. Each curve was labeled either as

- indicating a relatively successful academic history up to the status estimation point (9.0, end of middle school) on the curriculum timeline

- as possibly at-risk due to a falling MPgpa pattern below 2.0 on the grading scale

- still at-risk but exhibiting a rising MPgpa pattern toward or slightly above 2.0

- seriously at risk of academic failure, a pattern trending below 1.0

Working with CNTK

MS CNTK for C# provides multi-threaded code for running on CPUs or GPUs. Here, we use the CPU as the default device for the CPUOnly version. MS CNTK for C# requires x64 compilation. Before building the demonstration project, you should check the Configuration Manager to insure that Debug and Release builds for all projects in the solution are set to x64. (“Any CPU” will not work.)

CNTK processes require data in various formats and provide tools for re-shaping that data as needed. One particular format is the |features … |label … (in hot vector style) used for minibatch processing. For our data, it looks like this:

|features 2.383 2.624888 2.740712 2.794445 2.814961 2.816335

2.807138 2.793529 2.779638 2.767359 2.756607 2.74636 2.736153

2.727306 2.723097 2.727629 2.744186 2.77428 2.817792 2.873763

2.941018 3.018575 3.105723 3.202 |label 1 0 0 0

|features 1.134 1.090346 1.049686 1.012011 0.9773244 0.9455433

0.9165261 0.8900167 0.8656217 0.8427486 0.8205234 0.7977431 0.7727986

0.7436811 0.7081886 0.6644097 0.611671 0.5517164 0.4896953 0.4342301

0.3965976 0.3895209 0.4262405 0.52 |label 0 0 1 0

…

Another important CNTK format is simply a 1D data vector; one for features and one for labels; one pair for the training dataset and another pair for the validation/test dataset. In this new demo, the DataReader object has been modified to provide in-memory datasets in these various formats.

Using the Code

In this “BezierCurveMachineLearningDemoCNTK” project written in C# using Visual Studio 2017 and .NET 4.7, we first read a data file, construct student histories, and shuffle records randomly. From each history, a sub-history is extracted. Associated with each sub-history is a pre-classified status value. Next, a Bezier curve is fitted to model each student sub-history. That smooth Bezier curve itself is modeled by extracting a list of 24 MPgpa values at equal time intervals from the starting point to ending point, inclusive. These become the label and the features use to train and validate various machine learning classification models.

Download the project and open the solution file in Visual Studio. In the Solution Explorer, right-click on the project references folder and delete any existing reference to the ALGLIB314.dll library. Then use the Add References option to locate and add the release version of ALGLIB214.dll library (that you created in Part 2 of this series) to the project. MS CNTK for C# requires x64 compilation. As mentioned above, you should check the Configuration Manager to insure that Debug and Release builds for all projects in the solution are set to x64.

Then click Start to build and run the application. The solution requires several packages (CNTK for C# 2.5.1 and System.ValueTuple 4.3.1) which should download and restore automatically. A WinForm should open with a listbox, a chart, a datagridview, and a few buttons. Other details are explained in Part 2 of this series. For this demonstration, additional menu options are provided to select CNTK model training with batch or mini-batch data processing.

Points of Interest

The philosophy here is that most of the benefit to be derived from a CodeProject “demonstration,” is supposed to come from examination of the code. Readers are certainly encouraged to do that (any suggestions for improvements will be appreciated). Much if not most of the code is unremarkable, for building the form and the demo User Interface. However, the most important methods are those for creating and manipulating the dataset (discussed above) and those that call the actual machine learning processes implemented by CNTK.

TRAINING MODELS: This demonstration illustrates CNTK mini-batch training using the !features …!label … format (with the data saved as a disk datafile) and batch training using the 1D format (with data stored in-memory). For instance, this is the CNTKTraining neural net code block for batch processing in the demo that defines and implements a (24:12:6:4) neural network that has been shown to be adequate for classifying Bezier curves representing academic performance. (Much of the code in this class has been adapted from example source code provided by Bahrudin Jrnjica at references noted above.)

class CNTKTraining

{

public static CNTK.Function CNTK_ffnn_model;

private static CNTK.Trainer _trainer;

private static double _accuracy;

public static void RunExample3()

{

int inputDim = Form1.rdr.NofFeatures;

int numOutputClasses = Form1.rdr.NofLabels;

int[] hiddenLayerDim = new int[2] { 12, 6 };

int numHiddenLayers = hiddenLayerDim.Count();

uint batchSize = (uint)Form1.rdr.NofTrainingCases;

ModelInfoSummary_B(hiddenLayerDim);

var xValues = Value.CreateBatch<float>(new NDShape(1, inputDim),

Form1.rdr.GetCNTK_Train_1DFeatures, Form1.device);

var yValues = Value.CreateBatch<float>(new NDShape(1, numOutputClasses),

Form1.rdr.GetCNTK_Train_1DLabels, Form1.device);

var features = Variable.InputVariable(new NDShape(1, inputDim), DataType.Float);

var labels = Variable.InputVariable(new NDShape(1, numOutputClasses), DataType.Float);

Dictionary<Variable, Value> dic = new Dictionary<Variable, Value>

{

{ features, xValues },

{ labels, yValues }

};

CNTK_ffnn_model = CreateFFNN(features, numHiddenLayers, hiddenLayerDim,

numOutputClasses, ACTIVATION.Tanh, "DemoData", Form1.device);

var trainingLoss = CNTKLib.CrossEntropyWithSoftmax(new Variable(CNTK_ffnn_model),

labels, "lossFunction");

var classError = CNTKLib.ClassificationError( new Variable(CNTK_ffnn_model),

labels, "classificationError");

PairSizeTDouble p1 = new PairSizeTDouble( 1, 0.05);

PairSizeTDouble p2 = new PairSizeTDouble( 2, 0.03);

PairSizeTDouble p3 = new PairSizeTDouble( 2, 0.02);

var vlr = new VectorPairSizeTDouble() { p1, p2, p3};

var learningRatePerEpoch =

new CNTK.TrainingParameterScheduleDouble(vlr, batchSize);

PairSizeTDouble m1 = new PairSizeTDouble(1, 0.005);

PairSizeTDouble m2 = new PairSizeTDouble(2, 0.001);

PairSizeTDouble m3 = new PairSizeTDouble(2, 0.0005);

var vm = new VectorPairSizeTDouble() { m1, m2, m3 };

var momentumSchedulePerSample =

new CNTK.TrainingParameterScheduleDouble

(vm, (uint)Form1.rdr.NofTrainingCases);

CNTKLib.MomentumAsTimeConstantSchedule(256);

new TrainingParameterScheduleDouble(.001);

var vp = new ParameterVector();

foreach (Parameter p in CNTK_ffnn_model.Parameters()) vp.Add(p);

var myLearner = Learner.MomentumSGDLearner(CNTK_ffnn_model.Parameters(),

learningRatePerEpoch, momentumSchedulePerSample, true);

_trainer = Trainer.CreateTrainer(CNTK_ffnn_model, trainingLoss,

classError, new Learner[] { myLearner });

int epoch = 1;

int epochmax = 4000;

int reportcycle = 200;

double prevCE = 0.0;

double currCE = 0.0;

double losscritierion = 0.00001;

Dictionary<int, double> traindic = new Dictionary<int, double>();

while (epoch <= epochmax)

{

_trainer.TrainMinibatch(dic, true, Form1.device);

currCE = _trainer.PreviousMinibatchLossAverage();

if (Math.Abs(prevCE - currCE) < losscritierion) break;

prevCE = currCE;

_accuracy = 1.0 - _trainer.PreviousMinibatchEvaluationAverage();

traindic.Add(epoch, _accuracy);

PrintTrainingProgress(_trainer, epoch, reportcycle);

Charts.ChartAddaPoint(epoch, _accuracy);

epoch++;

}

TrainingSummary_B();

…

}

…

}

As you can see, first there is further data processing and reshaping. Most of the time, this would also involve a normalization step. However, in our case, the Bezier curve feature data is already bounded 0.0-to-4.0 MPgpa values and the models seem to work fine with data within that range. Then a network is defined, in this case by a 24 node input layer for the 24 points describing a Bezier curve, two hidden layers – the first with 12 nodes and the second with 6 nodes, and an output layer with 4 or 2 nodes for tertiary or binary classification. Next, various parameters (e.g., learning rate and/or momentum schedules) can be set. These are used to define a learner (e.g., either the built-in SGDLearner or MomentumSGDLearner or, as another example, the AdamLearner provided in CNTK.CNTKLib. Given the learner, a trainer is created. Each epoch, the batch dataset is presented to the trainer and “step-wise” intermediate output is produced. This is used here to update the listbox information table and the Training Progress chart visualization.

Once the model is trained, it is a simple matter to save it as a disk file and to reload that model into a different program for classifying new data records. CNTK provides functions for this purpose, e.g.:

public static CNTK.Function CNTK_ffnn_model;

public static CNTK.Trainer _trainer;

…

public static void SaveTrainedCNTKModel(string pathname)

{

CNTK_ffnn_model.Save(pathname);

_trainer.SaveCheckpoint(pathname + "(CKP)");

}

and:

public static void LoadTrainedCNTKModel(string networkPathName)

{

if (!File.Exists(networkPathName))

throw new FileNotFoundException("CNTK_NN Classifier file not found.");

CNTK_ffnn_model = Function.Load(networkPathName, Form1.device, ModelFormat.CNTKv2);

}

NOTE: In this demonstration, a point of interest is that all data files happen to be either copied to or saved into the project’s runtime “Application folder” (e.g., the x64 debug or release folder).

VALIDATION AND TESTING: Once training is complete, you want to apply the trained model to the validation test data. A CNTK model contains definitional information that can be extracted. Typically, you see code similar to this EvaluateModel_Batch routine, which makes use of in-memory data, the 1D format style, and CNTK data reshaping. The demonstration also includes a more complex CNTK Evaluate_MiniBatch method as well as a somewhat simpler MLModel IClassifier object for production runs. (It is important to note that trained CBTK models are NOT thread-safe. However, the IClassifier object is thread-safe and will be described in detail in the next article in this series.)

class CNTKTesting

{

private static double _accuracy;

public static void EvaluateModel_Batch(Function ffnn_model, bool validationFlag = false)

{

var features = (Variable)ffnn_model.Arguments[0];

var labels = ffnn_model.Outputs[0];

int inputDim = features.Shape.TotalSize;

int numOutputClasses = labels.Shape.TotalSize;

Value xValues, yValues;

if (validationFlag == true)

{

xValues = Value.CreateBatch<float>(new NDShape(1, inputDim),

Form1.rdr.GetCNTK_Validate_1DFeatures, Form1.device);

yValues = Value.CreateBatch<float>(new NDShape(1, numOutputClasses),

Form1.rdr.GetCNTK_Validate_1DLabels, Form1.device);

}

else

{

xValues = Value.CreateBatch<float>(new NDShape(1, inputDim),

Form1.rdr.GetCNTK_Train_1DFeatures, Form1.device);

yValues = Value.CreateBatch<float>(new NDShape(1, numOutputClasses),

Form1.rdr.GetCNTK_Train_1DLabels, Form1.device);

}

var inputDataMap = new Dictionary<Variable, Value>() { { features, xValues} };

var expectedDataMap = new Dictionary<Variable, Value>() { { labels, yValues } };

var outputDataMap = new Dictionary<Variable, Value>() { { labels, null } };

var expectedData = expectedDataMap[labels].GetDenseData<float>(labels);

var expectedLabels = expectedData.Select(l => l.IndexOf(l.Max())).ToList();

ffnn_model.Evaluate(inputDataMap, outputDataMap, Form1.device);

var outputData = outputDataMap[labels].GetDenseData<float>(labels);

var actualLabels = outputData.Select(l => l.IndexOf(l.Max())).ToList();

if (validationFlag == true)

{

int NofMatches = actualLabels.Zip(expectedLabels,

(a, b) => a.Equals(b) ? 1 : 0).Sum();

int totalCount = yValues.Data.Shape.Dimensions[2];

_accuracy = (double)NofMatches / totalCount;

ValidationSummary_B();

}

List<int> predict = new List<int>();

List<int> status = new List<int>();

for (int k = 0; k < actualLabels.Count; k++)

{

predict.Add(actualLabels[k] + 1);

status.Add(expectedLabels[k] + 1);

}

Support.RunCrosstabs(predict, status);

}

…

}

The crosstabs.cs module contains methods to display actual and predicted classification results in a “confusion” matrix format in the datagridview control in the WinForm, along with various precision, recall, and accuracy statistics. Readers interested in using that routine for their own purposes are referred to another CodeProject article entitled Crosstabs/Confusion Matrix for AI Classification Projects that I wrote some time ago.

In previous research, using a much larger data set (N>14,000) and 60%/40% for training/validation, stable validation accuracies >.98 for Bezier curves have typically been achieved using these classification models. Here is an example (using Python) of a (24:12:6:2) binary neural network compared to other classification models (logistic regression, linear discriminant analysis, K-nearest neighbor, CART – a decision forest, and a support vector machine) applied to that data set.

Conclusion

The principal conclusion drawn from the first demonstration in this series was that Bezier curves can be very useful models for noisy longitudinal data collected at varying time or X-axis points. A second inference was that if models are good, then inferences about such models may be good as well. Machine learning models that could classify performance trajectories in various ways would surely be a beneficial tool for data-driven decision-making.

Schools and school districts constantly gather data using commercial student information systems (SIS). But this “raw” information is seldom accessible. Once a trained model is in hand, dashboards and other visualization methods can access that data, analyze it, and present educators with decision-making tools for individual or aggregated cohort analyses in real time. These perhaps are topics for future articles in this series. If you are interested in some of the actual research done using approaches described in this project series, you may be interested in this recent AERA presentation entitled Data Visualizations in Public Education.

Beyond that, this project presents a variety of useful techniques and methods for working with Bezier curves and for training machine learning models to recognize patterns and trends reflected by such curves that can be adapted for other similar projects.

History

- 3rd September, 2018: Version 1.0