In the previous articles we’ve developed models to work with time series data. We’ve created a Bitcoin’s price forecaster, as well as an anomaly detector. In this article, we’re going to put these two together. This will enable us to detect anomalies in the future.

Introduction

This series of articles will guide you through the steps necessary to develop a fully functional time series forecaster and anomaly detector application with AI. Our forecaster/detector will deal with the cryptocurrency data, specifically with Bitcoin. However, after following along with this series, you’ll be able to apply the concepts and approaches you’ve learned to any data type of similar nature.

To fully benefit from this series, you should have some Python, Machine Learning, and Keras skills. The entire project is available in my "GitHub repository. You can also check out the fully interactive notebooks here and here.

In the previous articles we’ve developed models to work with time series data. We’ve created a Bitcoin’s price forecaster, as well as an anomaly detector. In this article, we’re going to put these two together. This will enable us to detect anomalies in the future.

Gathering Bitcoin Data from the Poloniex API

What’s a better way to get the current data for predictions than via an API? We’re going to use the Poloniex API to get the most recent past data. We want to get the last 24 hours’ Bitcoin prices to then predict the value for the next hour.

Let’s start by getting the yesterday’s and today’s dates in the Unix format with the UTC time zone:

past = datetime.now(tz=timezone.utc) - timedelta(days=1)

past = datetime.strftime(past, '%s')

current = datetime.now(tz=timezone.utc).strftime('%s')

Now, let’s pass these dates to the Poloniex API and transform the result into the JSON format to ensure we get a decent Pandas DataFrame:

url = 'https://poloniex.com/public?command=returnChartData¤cyPair=USDT_BTC&start='+str(past)+'&end='+str(current)+'&period=300'

result = requests.get(url)

result = result.json()

To get the DataFrame with the actual data, run:

last_data = pd.DataFrame(result)

This data collection includes some columns and formats that we don’t need, so let’s clean it a little bit:

last_data = last_data[['date','weightedAverage']]

last_data = last_data.resample('H', on='date')[['weightedAverage']].mean()

last_data = last_data[-24:]

unscaled = last_data.copy()

Before using this data to make predictions, let’s use the scaler we’ve trained before to get the values compatible with our two models:

last_data_scaled = scale_samples(last_data,last_data.columns[0],scaler)

Forecasting and Detecting Anomalies in Poloniex API’s Data

Now that we’ve polished the data obtained from Poloniex, let’s forecast and detect anomalies in it:

predictions = regressor.predict(last_data_scaled.values.reshape(1,24,1))

unscaled = unscaled.iloc[1:]

unscaled = unscaled.append(pd.DataFrame(scaler.inverse_transform(predictions)[0], index= [unscaled.index[len(unscaled)-1] + timedelta(hours=1)],columns =['weightedAverage']))

future_scaled = scale_samples(unscaled.copy(),unscaled.columns[0],scaler)

future_scaled_pred = detector.predict(future_scaled.values.reshape(1,24,1))

future_loss = np.mean(np.abs(future_scaled_pred - future_scaled.values.reshape(1,24,1)), axis=1)

unscaled['threshold'] = threshold

unscaled['loss'] = future_loss[0][0]

unscaled['anomaly'] = unscaled.loss > threshold

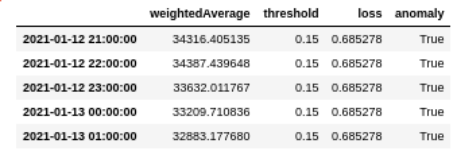

unscaled.head()

You should get a DataFrame that looks like this:

Finally, to plot the results:

fig = go.Figure()

fig.add_trace(go.Scatter(x=unscaled.index, y=unscaled.weightedAverage.values,mode='lines',name='BTC Price'))

fig.add_trace(go.Scatter(x=unscaled.index, y=unscaled[unscaled['anomaly']==True]['weightedAverage'].values,mode='markers',marker_symbol='x',marker_size=10,name='Anomaly'))

fig.add_vrect(x0=unscaled.index[-2], x1=unscaled.index[-1],fillcolor="LightSalmon", opacity=1,layer="below", line_width=0)

fig.update_layout(showlegend=True,title="BTC price predictions and anomalies",xaxis_title="Time (UTC)",yaxis_title="Prices",font=dict(family="Courier New, monospace"))

fig.show()

As output, we should get something like this scatter plot:

Conclusions

As you can see, the forecasted value is very low compared with the previous ones. If you inspect the historical data, you won’t see a sequence of such high values. This is because the current Bitcoin price is an anomaly that hasn’t happened before, and the actual model hasn’t seen data like that. Even when the anomaly detector can identify observations as anomalies, the forecaster doesn’t perform accurately. It should be accurate for data values that have been seen before. To mitigate this problem, you’ll need to gather some current data that contains more recent price values.

To perform accurate predictions on data streamed constantly over time, a good approach would be to run the last chunks of code inside a loop that refreshes itself each hour. That way, you’ll always get the next hour’s Bitcoin price and detect any anomaly in the current pattern. If you want to go beyond that, we suggest implementing a framework such as Streamlit for the above task. You’ll also get a nice user interface.

Happy forecasting and detection!

Sergio Virahonda grew up in Venezuela where obtained a bachelor's degree in Telecommunications Engineering. He moved abroad 4 years ago and since then has been focused on building meaningful data science career. He's currently living in Argentina writing code as a freelance developer.