Wouldn't it be cool to finish up by detecting movements in our faces? Here we'll show you how to use the key facial points to check for when we open our mouths and blink our eyes to activate on-screen events.

Introduction

Apps like Snapchat offer an amazing variety of face filters and lenses that let you overlay interesting things on your photos and videos. If you’ve ever given yourself virtual dog ears or a party hat, you know how much fun it can be!

Have you wondered how you’d create these kinds of filters from scratch? Well, now’s your chance to learn, all within your web browser! In this series, we’re going to see how to create Snapchat-style filters in the browser, train an AI model to understand facial expressions, and do even more using Tensorflow.js and face tracking.

You are welcome to download the demo of this project. You may need to enable WebGL in your web browser for performance.

You can also download the code and files for this series.

If you are new to TensorFlow.js, we recommend that you first check out this guide: Getting Started with Deep Learning in Your Browser Using TensorFlow.js.

If you would like to see more of what is possible in the web browser with TensorFlow.js, check out these AI series: Computer Vision with TensorFlow.js and Chatbots using TensorFlow.js.

Welcome to the finale of this AI series on virtual fun with face tracking! Wouldn’t it be cool to finish up by detecting movements in our faces? Let us show you how to use the key facial points to check for when we open our mouths and blink our eyes to activate on-screen events.

Detecting Eye Blinks and Mouth Opens

We are going to use key facial points from the face tracking code we developed in the first article of this series, Real-Time Face Tracking, to detect eye blinks and the mouth opens.

The annotated face points give us enough information to determine when our eyes are closed and when our mouth is open. The trick is to scale the positions with the relative size of the full face.

To do so, we can call on our handy eye-to-eye distance to approximate the relative scale in the trackFace function:

async function trackFace() {

...

faces.forEach( face => {

const eyeDist = Math.sqrt(

( face.annotations.leftEyeUpper1[ 3 ][ 0 ] - face.annotations.rightEyeUpper1[ 3 ][ 0 ] ) ** 2 +

( face.annotations.leftEyeUpper1[ 3 ][ 1 ] - face.annotations.rightEyeUpper1[ 3 ][ 1 ] ) ** 2 +

( face.annotations.leftEyeUpper1[ 3 ][ 2 ] - face.annotations.rightEyeUpper1[ 3 ][ 2 ] ) ** 2

);

const faceScale = eyeDist / 80;

});

requestAnimationFrame( trackFace );

}

Then, we can calculate the distance between the upper part of the eye and the lower part of the eye for both the left and right eyes, and use the faceScale value to approximate when a threshold has been crossed. We can use a similar calculation to detect mouth opens.

Take a look:

async function trackFace() {

...

let areEyesClosed = false, isMouthOpen = false;

faces.forEach( face => {

...

const leftEyesDist = Math.sqrt(

( face.annotations.leftEyeLower1[ 4 ][ 0 ] - face.annotations.leftEyeUpper1[ 4 ][ 0 ] ) ** 2 +

( face.annotations.leftEyeLower1[ 4 ][ 1 ] - face.annotations.leftEyeUpper1[ 4 ][ 1 ] ) ** 2 +

( face.annotations.leftEyeLower1[ 4 ][ 2 ] - face.annotations.leftEyeUpper1[ 4 ][ 2 ] ) ** 2

);

const rightEyesDist = Math.sqrt(

( face.annotations.rightEyeLower1[ 4 ][ 0 ] - face.annotations.rightEyeUpper1[ 4 ][ 0 ] ) ** 2 +

( face.annotations.rightEyeLower1[ 4 ][ 1 ] - face.annotations.rightEyeUpper1[ 4 ][ 1 ] ) ** 2 +

( face.annotations.rightEyeLower1[ 4 ][ 2 ] - face.annotations.rightEyeUpper1[ 4 ][ 2 ] ) ** 2

);

if( leftEyesDist / faceScale < 23.5 ) {

areEyesClosed = true;

}

if( rightEyesDist / faceScale < 23.5 ) {

areEyesClosed = true;

}

const lipsDist = Math.sqrt(

( face.annotations.lipsLowerInner[ 5 ][ 0 ] - face.annotations.lipsUpperInner[ 5 ][ 0 ] ) ** 2 +

( face.annotations.lipsLowerInner[ 5 ][ 1 ] - face.annotations.lipsUpperInner[ 5 ][ 1 ] ) ** 2 +

( face.annotations.lipsLowerInner[ 5 ][ 2 ] - face.annotations.lipsUpperInner[ 5 ][ 2 ] ) ** 2

);

if( lipsDist / faceScale > 20 ) {

isMouthOpen = true;

}

});

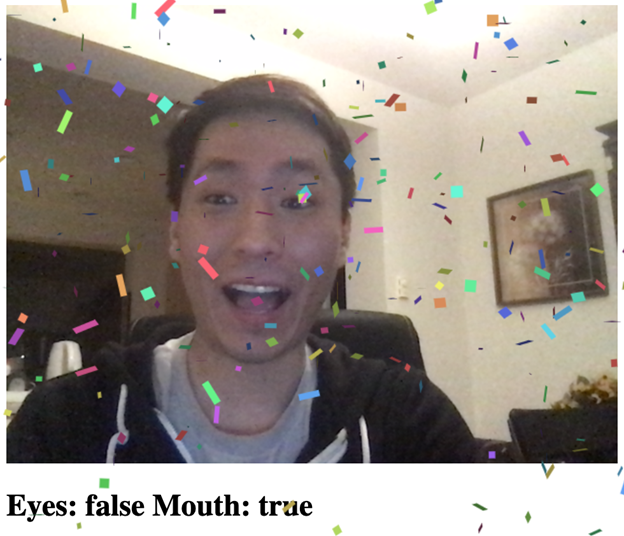

setText( `Eyes: ${areEyesClosed} Mouth: ${isMouthOpen}` );

requestAnimationFrame( trackFace );

}

Now we are set up to detect some face events.

Confetti Party Time

Every celebration needs confetti, right? We are going to connect virtual confetti to our eye blinks and the mouth opens to make it a whole party.

For this, we’ll use an open-source party JavaScript library called Party-JS. Include it at the top of your page like this:

<script src="https://cdn.jsdelivr.net/npm/party-js@1.0.0/party.min.js"></script>

Let’s keep a global variable state to track whether we already launched confetti or not.

let didParty = false;

Last but not least, we can activate the party animation whenever our eyes blinked or our mouth opened.

async function trackFace() {

...

if( !didParty && ( areEyesClosed || isMouthOpen ) ) {

party.screen();

}

didParty = areEyesClosed || isMouthOpen;

requestAnimationFrame( trackFace );

}

And it’s party time! By harnessing the power of face tracking and confetti, you’ve got a party on your screen right at your lips.

Finish Line

This project wouldn’t be complete without the full code for you to take a look, so here it is:

<html>

<head>

<title>Tracking Faces in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.4.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/face-landmarks-detection@0.0.1/dist/face-landmarks-detection.js"></script>

<script src="https://cdn.jsdelivr.net/npm/party-js@1.0.0/party.min.js"></script>

</head>

<body>

<canvas id="output"></canvas>

<video id="webcam" playsinline style="

visibility: hidden;

width: auto;

height: auto;

">

</video>

<h1 id="status">Loading...</h1>

<script>

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

async function setupWebcam() {

return new Promise( ( resolve, reject ) => {

const webcamElement = document.getElementById( "webcam" );

const navigatorAny = navigator;

navigator.getUserMedia = navigator.getUserMedia ||

navigatorAny.webkitGetUserMedia || navigatorAny.mozGetUserMedia ||

navigatorAny.msGetUserMedia;

if( navigator.getUserMedia ) {

navigator.getUserMedia( { video: true },

stream => {

webcamElement.srcObject = stream;

webcamElement.addEventListener( "loadeddata", resolve, false );

},

error => reject());

}

else {

reject();

}

});

}

let output = null;

let model = null;

let didParty = false;

async function trackFace() {

const video = document.getElementById( "webcam" );

const faces = await model.estimateFaces( {

input: video,

returnTensors: false,

flipHorizontal: false,

});

output.drawImage(

video,

0, 0, video.width, video.height,

0, 0, video.width, video.height

);

let areEyesClosed = false, isMouthOpen = false;

faces.forEach( face => {

const eyeDist = Math.sqrt(

( face.annotations.leftEyeUpper1[ 3 ][ 0 ] - face.annotations.rightEyeUpper1[ 3 ][ 0 ] ) ** 2 +

( face.annotations.leftEyeUpper1[ 3 ][ 1 ] - face.annotations.rightEyeUpper1[ 3 ][ 1 ] ) ** 2 +

( face.annotations.leftEyeUpper1[ 3 ][ 2 ] - face.annotations.rightEyeUpper1[ 3 ][ 2 ] ) ** 2

);

const faceScale = eyeDist / 80;

const leftEyesDist = Math.sqrt(

( face.annotations.leftEyeLower1[ 4 ][ 0 ] - face.annotations.leftEyeUpper1[ 4 ][ 0 ] ) ** 2 +

( face.annotations.leftEyeLower1[ 4 ][ 1 ] - face.annotations.leftEyeUpper1[ 4 ][ 1 ] ) ** 2 +

( face.annotations.leftEyeLower1[ 4 ][ 2 ] - face.annotations.leftEyeUpper1[ 4 ][ 2 ] ) ** 2

);

const rightEyesDist = Math.sqrt(

( face.annotations.rightEyeLower1[ 4 ][ 0 ] - face.annotations.rightEyeUpper1[ 4 ][ 0 ] ) ** 2 +

( face.annotations.rightEyeLower1[ 4 ][ 1 ] - face.annotations.rightEyeUpper1[ 4 ][ 1 ] ) ** 2 +

( face.annotations.rightEyeLower1[ 4 ][ 2 ] - face.annotations.rightEyeUpper1[ 4 ][ 2 ] ) ** 2

);

if( leftEyesDist / faceScale < 23.5 ) {

areEyesClosed = true;

}

if( rightEyesDist / faceScale < 23.5 ) {

areEyesClosed = true;

}

const lipsDist = Math.sqrt(

( face.annotations.lipsLowerInner[ 5 ][ 0 ] - face.annotations.lipsUpperInner[ 5 ][ 0 ] ) ** 2 +

( face.annotations.lipsLowerInner[ 5 ][ 1 ] - face.annotations.lipsUpperInner[ 5 ][ 1 ] ) ** 2 +

( face.annotations.lipsLowerInner[ 5 ][ 2 ] - face.annotations.lipsUpperInner[ 5 ][ 2 ] ) ** 2

);

if( lipsDist / faceScale > 20 ) {

isMouthOpen = true;

}

});

if( !didParty && ( areEyesClosed || isMouthOpen ) ) {

party.screen();

}

didParty = areEyesClosed || isMouthOpen;

setText( `Eyes: ${areEyesClosed} Mouth: ${isMouthOpen}` );

requestAnimationFrame( trackFace );

}

(async () => {

await setupWebcam();

const video = document.getElementById( "webcam" );

video.play();

let videoWidth = video.videoWidth;

let videoHeight = video.videoHeight;

video.width = videoWidth;

video.height = videoHeight;

let canvas = document.getElementById( "output" );

canvas.width = video.width;

canvas.height = video.height;

output = canvas.getContext( "2d" );

output.translate( canvas.width, 0 );

output.scale( -1, 1 );

output.fillStyle = "#fdffb6";

output.strokeStyle = "#fdffb6";

output.lineWidth = 2;

model = await faceLandmarksDetection.load(

faceLandmarksDetection.SupportedPackages.mediapipeFacemesh

);

setText( "Loaded!" );

trackFace();

})();

</script>

</body>

</html>

What’s Next?

Actually, that’s all for now. In this series, we learned how to use AI with faces to track them in real-time, detect facial emotions and mouth and eye movements. We even built our own augmented reality fun with virtual glasses from scratch, all running within a web browser.

Although we've chosen to cover fun examples, there are plenty of business applications for this technology as well. Imagine an eyeglasses retailer who wants to let website visitors try on glasses while browsing the website. It's not hard to envision how you'd use what you've learned in this series to build that functionality. Hopefully, you now have some tools to build more useful solutions with AI andTensorFlow.js.

Try putting this confetti into the virtual glasses project, see if you can use the emotion detection on a photo album. If you would like to learn how to do more with AI in your browser, check out the related series, Computer Vision with TensorFlow.js and Chatbots using TensorFlow.js.

And if these series inspires you to build more cool projects, please share them with us! We would love to hear about your projects.

Good luck and have fun coding!

Raphael Mun is a tech entrepreneur and educator who has been developing software professionally for over 20 years. He currently runs Lemmino, Inc and teaches and entertains through his Instafluff livestreams on Twitch building open source projects with his community.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin